-

Notifications

You must be signed in to change notification settings - Fork 5

Report

Date: Dec 15, 2013

Project Repo: https://github.com/seekshreyas/obidroid

| Team Members | Contact |

|---|---|

| Luis Aguilar | [email protected] |

| Morgan Wallace | [email protected] |

| Shreyas | [email protected] |

| Kristine A Yoshihara (from Info 219 Computer Security contributed Research) | [email protected] |

As outlined in the Project Proposal, our original intention of project goals was:

"Our main aim is develop a filter system for flagging unfair apps, via customer reviews and app descriptions."

"We do not aim to predict if an app is unfair or not using the reviews/description. Instead we aim to help scale down the problem of policing every app periodically on the app store by building a good indicator/flagging system."

So although we built classifier for predicting an app is fair / unfair, we were more interested in coming up with most informative features for such a classifier system that could help scale down the problem of policing the app store.

Please refer our initial premises of Acceptable Ground Rules, Assessment Scenarios and Deliverables as outlined in the Project Proposal

We were able to develop/build:

| Module/Utility | Type | Description |

|---|---|---|

crawler.py |

Script[^note-1] | Given a saved HTML page [^note-2] of the Google Play Store app listing eg: free-biz.html, it compiles a list of all the apps listed on that page and exports to a txt file in the inputs/ directory. |

scraper.py |

Script[^note-1] | Scrapes all the desired features of each app url from the crawler's exported txt file and compiles them into their respective json files in the exports/ directory. |

| Labeling Machinery Scripts | Module | To enable a human to label large quantity of apps manually quickly as fair/ unfair |

| Sentiment Analysis Scripts [^note-3] | Module | To aggregate a sentiment score for the overall review for a particular app |

| Application Data CRUD Operations/Classifier/Analysis Scripts | Module | Scripts for Data Storage and Retrieval, Supervised and Unsupervised learning of the dataset |

One of the challenges we faced during the project was finding good ground truths for labeling the apps. Initially were were hopeful that we would be able to obtain such a list from the FTC, but when it did not come to fruition, we decided to manually label the apps based on some Evaluation Criteria.

So we developed scripts for our Labeling Machinery. These were a collection of scripts that would enable concatenation of given json files, export them to a csv file that could then be loaded up into softwares like Excel, to enable a human to review the desired features in each app and label them.

300+ apps with 1800+ reviews were reviewed by a human i.e. Morgan Wallace on his Evaluation Criteria to weed out the unfair apps based on user app reviews. This gave a list of 42 apps.

To get the latest features[^note-4] then we tried scraping those apps again, but found that 18 of those had been taken down[^note-5]. So, in a way it did seem to justify our intuition of unfair apps labeled just using user reviews.

| Scripts | Script Description |

|---|---|

scripts/concat.py |

concatenate the given json files |

scripts/json_extract_to_csv.py |

export the overall json files to csv to enable loading in Excel for labeling

|

scripts/extract_unfair.py |

extract apps labeled unfair and append them to docs/malapps.txt

|

Using our Sentence Sentiment Classifier[^note-3], we classified each sentence into -1, 0, 1 score and then aggregated that score for every sentence in each review. We chose not to normalize these aggregate scores for the length of the review as we felt that the length of the review was a good bias for our feature.

| Scripts | Script Description |

|---|---|

parser.py, extractor.py

|

for extracting NLP features from each sentence |

mySentClassifer.pickle |

sentence sentiment classifier |

Storing, retrieving and examining the scraped attributes of the apps we extracted app features and fed them to a Naive Bayes Classifier over 4 folds as training and test data.

| Features Extracted | Feature Description | Feature Intuition | Feature Histogram |

|---|---|---|---|

price |

price of an app | Free apps might be more malware ridden | - |

revLength |

total sentences in all user reviews | longer the review, more coherent the user feeling about the app |  |

avgRating |

average rating of the app | higher rated apps might be more reliable | - |

hasPrivacy |

whether the app has a privacy policy or not | FTC inspired | - |

revSent |

aggregate review sentiment | NLP inspired |  |

hasDeveloperEmail |

app has an associated developer email | FTC inspired | - |

hasDeveloperWebsite |

app has an associated developer website | FTC inspired | - |

countMultipleApps |

app has multiple apps associated with it | self | - |

installs |

average install of each app | self | - |

exclamationCount |

count of exclamation for extreme reviews | NLP inspired | - |

countCapital |

count capitalized words in a review | NLP inspired | - |

adjectiveCount |

count the number of adjectives in | NLP inspired | - |

positiveWordCount |

count the number of positive words from a curated list | NLP inspired | - |

negativeWordCount |

count the number of negative words from a curated list | NLP inspired | - |

unigrams like has(word)

|

presence of curated malindicator words |

NLP inspired | - |

bigrams |

top 20 bigrams via likelihood ration measure | NLP inspired | - |

trigrams |

top trigrams based on raw frequency | NLP inspired | - |

- Although scraping gave us separate counts for each rating (1star, count), (2star, count) ..., which we intuitively felt would give use more granular feature for predicting malware, the classifier gave best output for an overall average rating.

- adding

unigrams,bigramsandtrigramsmade our classifier a lot worse (from~90% -> ~10%). We eventually turned them off. Please review our rationalizations in Results.

We did some unsupervised learning with the extracted features by exporting the apps and their features to a CSV file and using R scripts to do the analysis.

KMeans Clustering

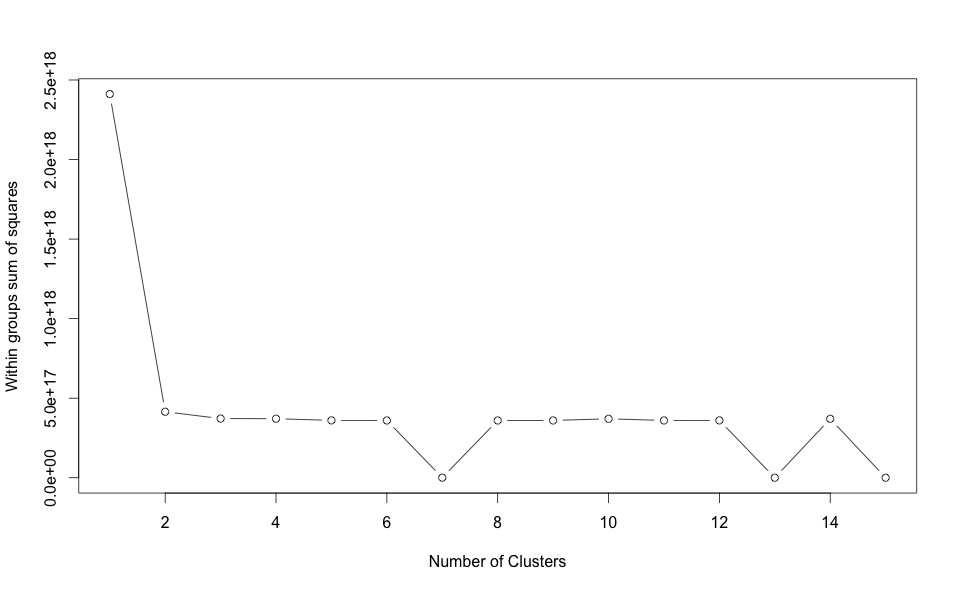

Ideal Number of Clusters (Optimal K)

So we observed that the best K for Kmeans cluster was K=7. The optimal K is obtained by looking for a bend in the above curve. (As per Quick-R Cluster Analysis)

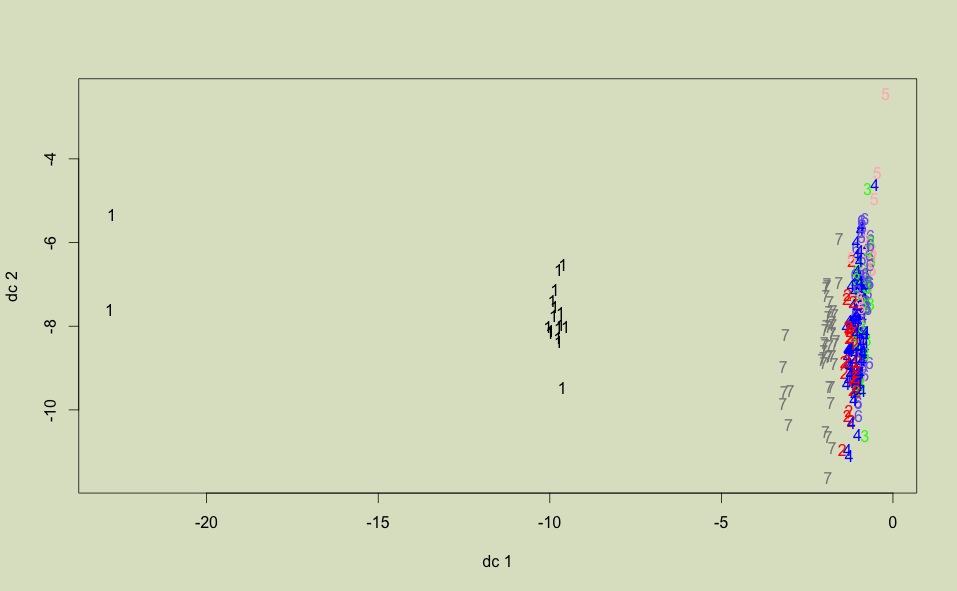

Plotting K=7 clusters

It suggests that apps in cluster1 are a lot further apart than all other apps. Cluster7 is also markedly different from others. But then the rest of the clusters are quite close together.

| Scripts | Script Description |

|---|---|

classifier.py |

extract features from apps and classify them |

db/models/app.py |

SQLAlchemy class that provides communication to app table |

db/models/review.py |

SQLAlchemy class that provides communication to the review table |

db/putAppsReviews.py |

loads db with json files of application attributes and reviews |

db/getAppsReviews.py |

gets from db application attributes and reviews by category or appid |

dataExport.py |

export the app features to CSV which can be loaded in R for some analysis |

ranalytics.r [^note-6] |

unsupervised learning on the dataset |

ranalytics_all.r [^note-6] |

full R Worksheet |

Taking our best results for the classifier, which was cross-validated over 4 folds, :

- Average Prediction Accuracy :

86.71875% - Predictions in each fold:

[0.8125, 0.90625, 0.84375, 0.90625] - Overall Most Informative Features:

-

installs- for

installs = 3000.0theunfair : fairratio was ~9 : 1 - for

installs = 30000.0theunfair : fairratio was ~6 : 1 - for

installs = 3000000.0thefair : unfairratio was ~2 : 1

- for

-

revSent- for

revSent = -17theunfair : fairratio was ~8 : 1 - for

revSent = -10theunfair : fairratio was ~2 : 1

- for

-

countCapital:- for

countCapital = 9theunfair : fairratio was ~3 : 1

- for

-

revLength:- for

revlength = 800+theunfair: fairratio was ~2 : 1

- for

-

avgRating:- ambiguous

-

We got an accuracy of ~86% for fair/unfair app prediction. But we would like to mention that we are a little ambivalent about the accuracy as we had few training examples for malapps.

But we would say that we were able to come up with a list of most informative features which could be indicators of malapps and hence essentially scale down the problem of evaluating each and every feature of apps and their reviews.

Also, we would like to point out that our sentiment classifier performed fairly well in predicting malapps, showing up in most informative features in every fold, we observed that usual NLP features like unigrams, top bigrams, top trigrams performed poorly and brought down the classifier accuracy. And our rationalization for this we believe is related to the ambiguity between bad reviews and unfair reviews, which is harder to put down in exact words/phrases but is easier to gauge from overall sentiments of the app. Crude NLP features like countCapital, which was a count of capital words in a review, were better indicators than bigrams/trigrams.

We must obtain more labeled data from additional sources in order to improve our feature selection and classifier.

Adding additional features that take more processing time like: verifying that privacy policy links and developer urls are active and not blacklisted, lightweight parsing of privacy policies for certain keywords, email domain lookups.

Setting up a server to automate this classification over a broader set of the Google Play apps. The automated process will allow newer applications and application version updates to be reviewed and making the results available. Furthermore, the automation needs to take into account that comments are continually added to the application profiles over time. The sentiment of each app will need to be reanalyzed on an iterative basis.

Data was scrapped from the web page specific to each app on the Google Play store. We collected data from 60 applications for each of the following categories:

- Business (free)

- Comics (free)

- Communications (free)

- Lifestyle (free)

- Social (free)

We were looking for mostly free apps because a higher proportion of them have higher installs and free apps might be more enticing and malware ridden.

| Attributes | Description |

|---|---|

Name |

The official name of the application (e.g. Gmail) |

Company |

The name of the company (e.g. Google) |

AppCategory |

Category of app (e.g. Communication) |

AppId |

The ID used by Google Play to uniquely identify each app (e.g. com.google.android.gm) |

AppVer |

Number representing how many releases of this app there have been (e.g. 2.1) |

Price |

How much the app costs (US Dollars) |

Rating |

Number of 1, 2, 3, 4, and 5 star ratings given by users |

Total Reviewers |

Total number of users that reviewed the app for all versions |

CountOfScreenShots |

Number of screenshots shown by developer |

Installs |

How many devices have this app installed |

ContentRating |

How long the app has been in the store (e.g. Low Maturity) |

SimilarApps |

A list of other AppIDs for apps considered similar by Google Play |

Description |

Developer written description of the app |

MoreAppsFromDev |

A list of AppIDs for other apps that the developer has also made |

User Reviews |

A list of the 6 reviews shown on the page |

Developer Website URL |

Web site link to the developers own page |

Developer Email |

Email address where users can contact the developer |

Privacy Policy URL |

URL of the privacy policy that explains how the developer uses the users` information |

- Sentiment Analysis Scripts [^note-3]: was developed with support from Sayantan Mukhopadhayay, Charles Wang during the earlier assignment.

- R scripts [^note-6]: Were borrowed from R blogs and sites like Quick R

- K-fold validation script: was referred from: Stack Overflow

- All other scripts: were coded in by the team.

-

Luis Aguilar

- Created Postgres database for storage of app information

- Created SQLAlchemy scripts which moves app JSON data to/from the database

- Web Service creation for access to app information

- Development of some of the features in the classifier script

- Searches for malware apps and FTC/resource correspondence

-

Morgan Wallace

- Crawler to get app IDs and URLs from an HTML page listing hundreds of apps.

- Conversion script from JSON to CSV for app features for the purpose of labelling

- Manual labeling (‘fair’ or ‘unfair’) of over 1800 app reviews.

-

Shreyas

- Project Architecture

- Scraper to get all features

- Feature Extraction Classifier Scripts

- R Scripts for unsupervised learning

-

Kristine A Yoshihara

- Research, Experiment Design and Statistical Analysis

The entire code is hosted at Github in the Obidroid Repository

Several key features stood above the rest.

- Installs and capital letter usage in reviews were good predictors of malware.

- Surprisingly, average rating from the user was ambiguous. According to our training set, having a high average rating did not preclude an app from being labelled as unfair.

- Lastly, sentiment was a fair indicator, and performed better than other word features like bigrams and trigrams, which could possibly be attributed to words or phrases being poor indicators of malapps as most of the times even users don't know if it is a malware, and it is harder to disambiguate between bad apps and unfair apps.

The work done proves the viability of natural language processing's applicability to detecting malware apps when utilized in conjunction with classification using other application features. We believe it provides a valuable tool for those like the FTC to more efficiently scrutinize the plethora of applications that could be harmful to consumers.

- Appendix A: Results

- Appendix B: Code Documentation

- Appendix C: Evaluation Criteria

[^note-1]: Refer to Code Documentation for understanding how to run these utilities with appropriate command line flags.

[^note-2]: We chose to provide the saved HTML page instead of the live url because the app list is lazy-loaded via AJAX and on fetching the live url doesn't give all the apps of a page.

[^note-3]: We chose to use the sentiment classifier that was created during the sentence classification assignment as the classifier was also trained on user reviews of products.

[^note-4]: We had to rescrape those app attributes because we had improved our scraper for more in the meantime

[^note-5]: See the app urls starting with # in docs/malapps.txt

[^note-6]: The R scripts that were used were generally referred from online tutorials and blogs. Majorly, QuickR and InstantR