-

Notifications

You must be signed in to change notification settings - Fork 1

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

- Loading branch information

timurcarstensen

committed

Aug 7, 2022

1 parent

2e9c28b

commit a7f6aa1

Showing

264 changed files

with

38,317 additions

and

1 deletion.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,23 @@ | ||

| node_modules | ||

| typings | ||

| *.pyc | ||

| .DS_Store | ||

| package-lock.json | ||

| mtp-ai-turing-tumble.iml | ||

| .idea/ | ||

| out | ||

| reinforcement_learning/wandb | ||

| reinforcement_learning/tmp | ||

| /out/ | ||

| /reinforcement_learning/tmp/ | ||

| .idea/* | ||

| reinforcement_learning/wandb/* | ||

| out/* | ||

| reinforcement_learning/tmp/* | ||

| */META-INF/* | ||

| MANIFEST.MF | ||

| wandb_key_file | ||

| LogFile.txt | ||

| State.txt | ||

| /reinforcement_learning/dataset_generators/rl_training_set.csv | ||

| /reinforcement_learning/environments/envs/bugbit_env_backup.py |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,64 @@ | ||

| # European Master Team Project - AI Turing Tumble | ||

|

|

||

| > This repository holds the code that was developed during the European Master Team Project | ||

| > in the spring semester of 2022 (EMTP 22). The project was supervised by | ||

| > [Dr. Christian Bartelt](https://www.uni-mannheim.de/en/ines/about-us/researchers/dr-christian-bartelt/) and | ||

| > [Jannik Brinkmann](https://www.linkedin.com/in/brinkmann-jannik/). The project team was | ||

| > composed of students from the [Babeș-Bolyai University](https://www.ubbcluj.ro/en/) | ||

| > in Cluj-Napoca, Romania, and the [University of Mannheim](https://www.uni-mannheim.de/), Germany. | ||

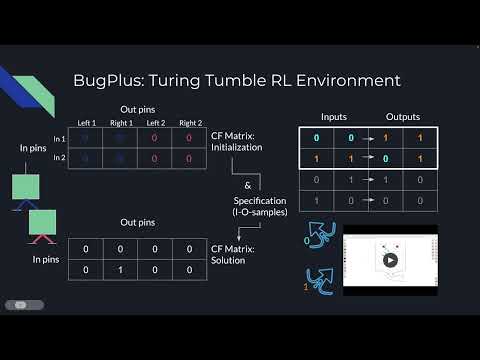

| ## Introduction | ||

|

|

||

| In the game Turing Tumble, players construct mechanical computers that use the flow of marbles along a board to solve | ||

| logic problems. As the board and its parts are Turing complete, which means that they can be used to express any | ||

| mathematical function, an intelligent agent taught to solve a Turing Tumble challenge essentially learns how to write | ||

| code according to a given specification. | ||

|

|

||

| Following this logic, we taught an agent how to write a simple programme according to a minimal specification, using | ||

| an abstracted version of the Turing Tumble board as reinforcement learning training environment. This is related to | ||

| the emerging field of programme synthesis, as is for example applied in | ||

| [GitHub’s CoPilot](https://github.com/features/copilot). | ||

|

|

||

| ## Participants | ||

|

|

||

| ### Babeș-Bolyai University | ||

|

|

||

| * [Tudor Esan](https://github.com/TudorEsan) - B.Sc. Computer Science | ||

| * [Raluca Diana Chis](https://github.com/RalucaChis) - M.Sc. Applied Computational Intelligence | ||

|

|

||

| ### University of Mannheim | ||

|

|

||

| * [Roman Hess](https://github.com/romanhess98) - M.Sc. Data Science | ||

| * [Timur Carstensen](https://github.com/timurcarstensen) - M.Sc. Data Science | ||

| * [Julie Naegelen](https://github.com/jnaeg) - M.Sc. Data Science | ||

| * [Tobias Sesterhenn](https://github.com/Tsesterh) - M.Sc. Data Science | ||

|

|

||

| ## Contents of this repository | ||

|

|

||

| The project directory is organised in the following way: | ||

|

|

||

| | Path | Role | | ||

| |---------------------------|----------------------------------------------| | ||

| | `docs/` | Supporting material to document the project | | ||

| | `reinforcement_learning/` | Everything related to Reinforcement Learning | | ||

| | `src/` | Java sources | | ||

| | `ttsim/` | Source Code of the Turing Tumble Simulator | | ||

|

|

||

| ## Weights & Biases (wandb) | ||

| We used [Weights & Biases](https://wandb.ai/) to log the results of our training: | ||

| 1. [Reinforcement Learning](https://wandb.ai/mtp-ai-board-game-engine/ray-tune-bugbit) | ||

| 2. [Pretraining](https://wandb.ai/mtp-ai-board-game-engine/Pretraining) | ||

| 3. [Connect Four](https://wandb.ai/mtp-ai-board-game-engine/connect-four) | ||

|

|

||

| ## Credits | ||

|

|

||

| We used third-party software to implement the project. Namely: | ||

|

|

||

| - **BugPlus** - [Dr. Christian Bartelt](https://www.uni-mannheim.de/en/ines/about-us/researchers/dr-christian-bartelt/) | ||

| - **Turing Tumble Simulator** - [Jesse Crossen](https://github.com/jessecrossen/ttsim) | ||

|

|

||

| ## Final Project Presentation | ||

|

|

||

| Link to video: | ||

| [](https://www.youtube.com/watch?v=w501gf2MLFM) | ||

|

|

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,111 @@ | ||

| # Setup | ||

|

|

||

| > **DISCLAIMER: this project is meant to be run on Linux or macOS machines. Windows is not supported | ||

| > due to a scheduler conflict between JPype and Ray.** | ||

| ## Prerequisites | ||

|

|

||

| 1. An x86 machine running Linux or macOS (tested on Ubuntu 20.04 and macOS Monterey) | ||

| 2. A working, clean (i.e new / separate) conda ([miniconda3](https://docs.conda.io/en/latest/miniconda.html) | ||

| or [anaconda3](https://docs.anaconda.com/anaconda/install/)) installation | ||

| 3. [IntelliJ IDEA](https://www.jetbrains.com/idea/) (CE / Ultimate) | ||

|

|

||

| ## Installing dependencies | ||

|

|

||

| 1. Create and activate a new conda environment for python3.8 (`conda create -n mtp python=3.8` & `conda activate mtp`) | ||

| 2. Navigate to the project root and run `pip install -r requirements.txt` | ||

| 3. Run `pip install "ray[all]"` to install all dependencies for Ray | ||

|

|

||

| ## Python Setup | ||

|

|

||

| 1. In IntelliJ, open the project structure dialogue: `File -> Project Structure` | ||

| 2. In Modules, select the project and click `Add` and select `Python` | ||

| 3. In the `Python` tab, add a new Python interpreter by clicking on `...` | ||

| 4. In the newly opened dialogue, click on `+` and click `Add Python SDK...` | ||

| 5. In the dialogue, click on `Conda environment` and select the existing environment we created in the previous step | ||

| 6. Select the newly registered interpreter as the project interpreter and close out of the dialogue after | ||

| clicking `apply` | ||

| 7. Still in `Project Structure`, navigate to `Modules` and select the project: select the directories `src` | ||

| and `reinforcement_learning` and mark them as `Sources`. Click `Apply` and close out of the dialogue. | ||

|

|

||

| ## Compiling the project | ||

|

|

||

| 1. Make sure that the SDK and Language Level in the Project tab are set to 17 (i.e. openjdk-17) | ||

| 2. Open the Project Structure Dialogue in IntelliJ `File -> Project Structure` | ||

| 3. Select `Artifacts` | ||

| 4. Add a JAR file with dependencies | ||

| 5. Click on the folder icon and select `CF_Translated` in the next dialogue and click OK | ||

| 6. Click OK again and then in the artifacts overview, in the Output Layout tab, select the Python library and remove it | ||

| 7. Click on apply and OK | ||

| 8. Build the artifact: `Build -> Build Artifacts` | ||

| 9. In `reinforcement_learning/utilities/utilities.py`, make sure that the variable `artifact_directory` is set to the | ||

| folder that contains the compiled artifact. The variable `artifact_file_name` should be set to the name of the jar | ||

| file. (cf. image below: `artifact_directory = mtp_testing_jar` and `artifact_file_name = mtp-testing.jar`) | ||

|

|

||

| <p align="center"> | ||

| <img src="assets/out_directory.png" alt="example challenge" width="300"/> | ||

| </p> | ||

|

|

||

| ## Weights & Biases | ||

|

|

||

| We used [Weights & Biases](https://wandb.ai/) (wandb) to document our training progress and results. All training files | ||

| in this project rely on wandb for logging. For the following you will need a wandb account and your wandb | ||

| [API key](https://docs.wandb.ai/quickstart): | ||

|

|

||

| 1. Create a file named `wandb_key_file` in the `reinforcement_learning` directory | ||

| 2. Paste your wandb API key in the newly created file | ||

| 3. In the training files, adjust the wandb configuration to your wandb account (i.e. arguments such as `entity`, | ||

| `project`, and `group` should be modified accordingly) | ||

|

|

||

| ## Running the project | ||

|

|

||

| > DISCLAIMER: the number of bugs used in pretraining, reinforcement learning, and the environment configuration **must** | ||

| > be the same | ||

| > To run the individual parts of the final project pipeline, follow the steps outlined below. | ||

| > DISCLAIMER: the generation of Pretraining and RL training sets may take some time for *num_bugs > 6* and a large | ||

| > number | ||

| > of samples. | ||

| ### Pretraining | ||

|

|

||

| #### Pretraining Sample Generation | ||

|

|

||

| Run the `reinforcement_learning/dataset_generators > pretraining_dataset_generation.py` script in the terminal or | ||

| execute the file in IntelliJ. | ||

| The generated dataset(s) will be saved in `data/training_sets/pretraining_training_sets` as different `.pkl` files. | ||

| Each training set is identified by the number of bugs and whether multiple_actions are allowed or not. If multiple | ||

| actions | ||

| are allowed, this means that in the training set there are samples where more then one edge have to be removed from the | ||

| CF Matrix. | ||

|

|

||

| #### Model Pretraining | ||

|

|

||

| Run the `reinforcement_learning/custom_torch_models > rl_network_pretraining.py` script in the terminal or execute | ||

| the file in IntelliJ. | ||

| The script loads the created training dataset depending on the number of bugs and whether multiple actions are allowed. | ||

| After the pretraining, the model is saved in `data/model_weights`. | ||

|

|

||

| ### Reinforcement Learning (RL) | ||

|

|

||

| The RL part of the project is split up into two components: sample generation and training. | ||

|

|

||

| #### Reinforcement Learning Sample Generation | ||

|

|

||

| To generate RL samples, adjust the number of bugs and number of samples in the main function of | ||

| `reinforcement_learning/dataset_generators > rl_trainingset_generation.py` and run the file. The generated training set | ||

| will be saved | ||

| as a `.pkl` file in `data/training_sets/rl_training_sets`. | ||

|

|

||

| #### Reinforcement Learning Training | ||

|

|

||

| Reinforcement learning training is done in `reinforcement_learning > train.py`: | ||

|

|

||

| 1. Set the path to your RL training set from the previous step in `global_config["training_set_path]` | ||

| 2. If you did generate a pretraining set and did pretrain, also adjust `global_config["pretrained_model_path"]` | ||

| 3. ***Optional***: if you want to initialise the learner with the pretrained model's weights, set the config parameter | ||

| in `global_config["pretraining"]` to `True`. | ||

|

|

||

| ### Connect Four | ||

|

|

||

| For Connect Four please refer to the [Connect Four](connect-four.md) documentation. |

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

Oops, something went wrong.