forked from decentralized-identity/universal-resolver

-

Notifications

You must be signed in to change notification settings - Fork 0

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Added docu for dev-system, branching, releasing, ci/cd

- Loading branch information

Philipp Potisk

committed

Nov 29, 2019

1 parent

6b1a3ac

commit 93cdc0f

Showing

5 changed files

with

125 additions

and

0 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,16 @@ | ||

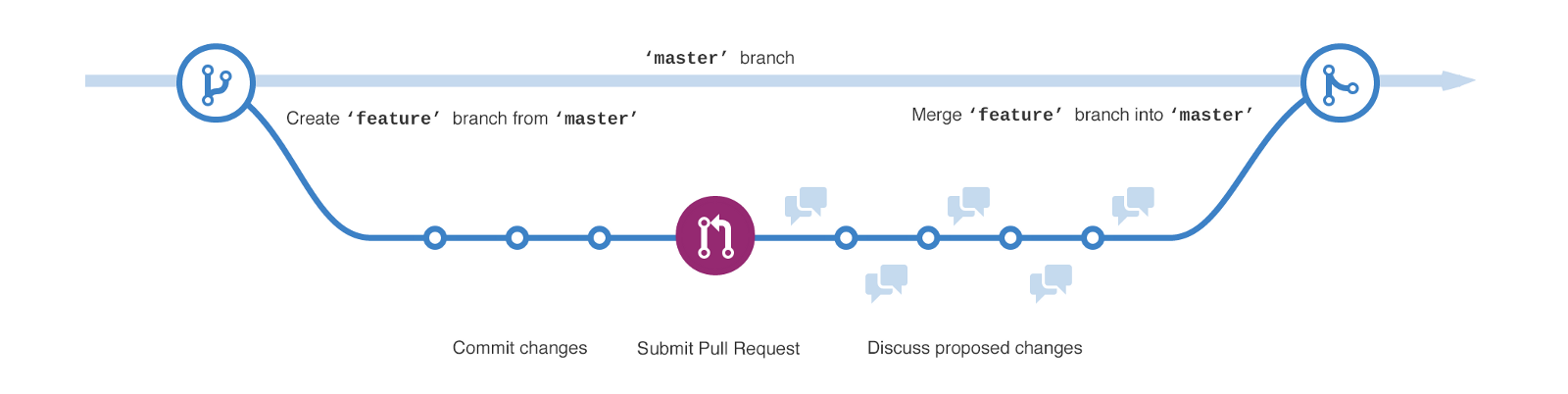

| The goals for our branching strategy are: | ||

| * The master-branch should be deployable at any time. This implies a stable build and the assurance that the core-functionality is provided at any time when cloning the master. | ||

| * The master-branch should stay active. As collaboratively working with multiple developers, we encourage merging the code as frequently as possible. This will potentially disclose issues at an early stage and facilitate the repair. Furthermore, it makes clear that the master-branch is the preferred choice that newcomers want to clone. | ||

| * In order not to waste time the branching strategy should stay as simple as possible. | ||

|

|

||

| Among a bunch of various strategies we have chosen *GitHub Flow*, which is a lightweight branching-strategy encouraged by the GitHub dev-teams. Details can be found here: https://guides.github.com/introduction/flow/ | ||

| The following recipe shortly describes the typical steps that developers need to be take into account when updating the code base: | ||

|

|

||

|  | ||

|

|

||

| 1. ***Create a branch***: When implementing a new feature, some improvement or a bugfix, a new branch should be created of the master-branch. In order to preserve an organized git-repo, the new branches should go under: `topic/<your-branch-name>` , whereas the name of the branch should reflect the intention of the branch itself. Use the GitHub-issue name as part of the branch-name, if there is a corresponding issue available. | ||

|

|

||

| 2. ***Commit some code***: Add your changes to the new branch and commit regularly with a descriptive commit-message. This builds up a transparent history of work and makes a roll back of changes easier. | ||

| 3. ***Open a Pull Request (PR)***: Once your changes are complete (or you want some feedback at an early stage of development) open a PR against the master-branch. A PR initiates a discussion with the maintainer, which will review your code at this point. Furthermore, the [[CI/CD process|Continuous-Integration-and-Delivery]] will be kicked off and your code will be deployed to the dev-system. | ||

| 4. ***Discuss, review code and deployment***: Wait for feedback of the maintainer and check the deployment at the dev-system. In case of contributing a new driver the maintainer will also add the deployment-scripts in the scope of this PR. You may also be requested to make some changes to your code. Finally, the new changes should be safely incorporated to the master-branch and the updated universal-resolver is running smoothly in dev-environment. | ||

| 5. ***Merge to the master-branch***: If all parties involved in the discussion are satisfied, the maintainer will merge the PR into the master-branch and will close the PR itself. You are free to delete your branch as all changes have already been incorporated in the master. |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,34 @@ | ||

| This section describes the building-blocks and ideas of the implemented CI/CD pipeline. In case of issues or requests in the scope of CI/CD, please directly consult the maintainers of the repository. | ||

|

|

||

| ## Intro | ||

|

|

||

| The CI/CD pipeline helps achieving the following goals: | ||

| * Detection of problems asap | ||

| * Short and robust release cycles | ||

| * Avoidance of repetitive, manual tasks | ||

| * Increase of Software Quality | ||

|

|

||

| After every code change the CI/CD pipeline builds all software packages/Docker containers automatically. Once the containers are built, they are automatically deployed to the dev-system. By these measures building as well as deployment issues are immediately discovered. Once the freshly built containers are deployed, automatic tests are run in order to verify the software and to detect functional issues. | ||

|

|

||

| ## Building Blocks | ||

|

|

||

| The CI/CD pipeline is constructed by using GitHub Actions. The workflow (workflow file https://github.com/philpotisk/universal-resolver/blob/master/.github/workflows/universal-resolver-ws.yml ) is run after every push to the master and on ever PR against master. | ||

| The workflow consists of several steps. Each step is implemented as Docker container that performs the relevant actions. Currently, the two main steps are: | ||

|

|

||

| 1. Building the resolver | ||

| 2. Deploying the resolver | ||

|

|

||

| The build-step uses this container https://github.com/philpotisk/github-action-docker-build-push, which generically builds a Docker image and pushes it to Docker Hub at https://hub.docker.com/u/universalresolver | ||

| The second step takes the image and deploys it (create or update) to the configured Kubernetes cluster. The Docker container fulfilling this step can be found here: https://github.com/philpotisk/github-action-deploy-eks | ||

|

|

||

| ## Steps of the CI/CD Workflow | ||

|

|

||

|  | ||

|

|

||

| 1. Dev pushes code to GitHub | ||

| 2. GitHub Actions (GHA) is triggerd by the „push“ event, clones the repo and runs the workflow for every container: | ||

| * (a) Docker build | ||

| * (b) Docker push (Docker image goes to DockerHub) | ||

| * (c) Deploy to Kubernetes | ||

| * (d) Runs a test-container | ||

| 3. Manual and automated feedback |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,37 @@ | ||

| This guide explains the release process of the universal resolver. | ||

|

|

||

| ## Version Number | ||

|

|

||

| The concept of versioning is in-line with Semantic Versioning as described here https://semver.org/. | ||

| Each release is defined by the version number, which is composed by the three parts MAJOR.MINOR.PATCH. | ||

| 1. MAJOR version for incompatible API changes. | ||

| 2. MINOR version when adding functionality in a backwards compatible manner. | ||

| 3. PATCH version when making backwards compatible bug fixes. | ||

| Further details should be taken from the link above. | ||

|

|

||

| ## Release Process | ||

| According to the [roadmap](https://github.com/philpotisk/universal-resolver/wiki/Roadmap-Universal-Resolver) major and minor releases are planned and scheduled. Patch-versions, may have happened spontaneously according to urgent bugfixes. | ||

| For each minor release there is a dedicated branch following the naming pattern: MAJOR.MINOR.x (e.g. 3.1.x). In this branch the code-base for this particular release will be hardened. The code has to pass the static code analysis, which detects potential issues with the code in terms of quality and security. Furthermore, this code will be manually deployed in a staging/ production-like environment, where manual and automatic testing are taking place. Once the release fulfills the desired standards, it will be packaged and released to the GitHub release page, and announced at (TODO: wiki/whats-new-in-universal-resolver-<MAJOR>.x). | ||

|

|

||

| Steps for releasing a new version: | ||

| 1. Create a release branch from the master. | ||

|

|

||

| `git checkout -b 3.1.x` | ||

|

|

||

| `git push --set-upstream origin 3.1.x` | ||

|

|

||

| Open universal-resolver/.github/workflows/universal-resolver-ws.yml and adjust the CONTAINER_TAG to the specific release number. For example: 3.1.0 | ||

| In the same file, change also the branch the CI/CD workflow is listening on, to 3.1.x. In future, when there will be a staging-system in place, also the KUBE_KONFIG_DATA needs to be changed, so the deployment will go to the staging system, rather to the dev-system. | ||

|

|

||

| 2. Run Sonar Cube analytics and review results in the online dashboard. Consider all issues and make sure, that no critical issues are flagged, before releasing the software. | ||

|

|

||

| 3. After the build-job got executed, the Docker image should be stored at Docker Hub. You can check the website or simply run the container by specifying the tag: docker pull phil21/uni-resolver-web:3.1.0 | ||

|

|

||

| 4. Tag the release: | ||

|

|

||

| `git tag v3.1.0.RELEASE` | ||

|

|

||

| `git push origin --tags` | ||

|

|

||

| Afterwards the release will be automatically shown on GitHub under the releases. | ||

|

|

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,38 @@ | ||

| # Development System of the Universal Resolver | ||

|

|

||

| The dev-system or the sandbox installation, which runns the latest code-base of the Universal Resolver project, is hosted at: | ||

|

|

||

| http://dev.uniresolver.io | ||

|

|

||

| The drivers are exposed by their subdomains (DID method-names). For example: btcr.dev.uniresolver.io | ||

| > Note for driver-developers: The subdomains are automatically generated based on the Docker image tag e.g.: driver-did-btcr, which consequently must have the DID-method name as part of the tag-name (pattern: driver-di-<DID method name>). | ||

| DIDs can be resolved by calling the resolver: | ||

|

|

||

| http://dev.uniresolver.io/1.0/identifiers/did:btcr:xz35-jznz-q6mr-7q6 | ||

|

|

||

| or by directly accessing the driver’s endpoint: | ||

|

|

||

| http://btcr.dev.uniresolver.io/1.0/identifiers/did:btcr:xz35-jznz-q6mr-7q6 | ||

|

|

||

|

|

||

| The software is automatically update on every commit and PR on the master branch. See CI-CD for more details | ||

|

|

||

|

|

||

| Currently the system is deployed in the AWS cloud by the use of the Elastic Kubernetes Service (EKS). Please be aware that the use of AWS is not mandatory for hosting the resolver. Any environment that supports Docker Compose or Kubernetes will be capable of running an instance of the Universal Resolver. | ||

|

|

||

|

|

||

| ## AWS Architecture | ||

|

|

||

| This picture illustrates the AWS architecture for hosting the Universal resolver as well as the traffic-flow through the system. | ||

|

|

||

| <p align="center"><img src="figures/aws-architecture.png" width="75%"></p> | ||

|

|

||

| The entry-point to the system is the public internet facing Application Load Balancer (ALB), that sits at the edge of the AWS cloud and is bound to the DNS name “dev.uniresolver.io”. When resolving DIDs the traffic flows though the ALB to the resolver forwards. Based on the configuration of each DID-method the resolver calls the corresponding DID-driver (typical scenario) or may call another HTTP endpoint (another resolver or directly the DLT if HTTP is supported). In order to gain performance, blockchain nodes may also be added to the deployment, as sketched at Driver C. | ||

|

|

||

| The Kubernetes cluster is spanned across multiple Availability Zones (AZ), which are essential parts for providing fault-tolerance and achieving a high-availability HA of the system. This means that no downtime is to be expected in case of failing parts of the system, as the healthy parts will take over operations reliably. | ||

|

|

||

| If conatiners, like DID-Drivers, are added or removed, the ALB ingress controller https://kubernetes-sigs.github.io/aws-alb-ingress-controller/ takes care of notifying the ALB. Due to this mechanism the ALB stays aware of the system state and is able to keep traffic-routes healthy. | ||

| By use of https://github.com/kubernetes-sigs/external-dns the DNS Service Route 53 is updated. Further details regarding the automated system-update are described at CI/CD. | ||

|

|

||

|

|

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.