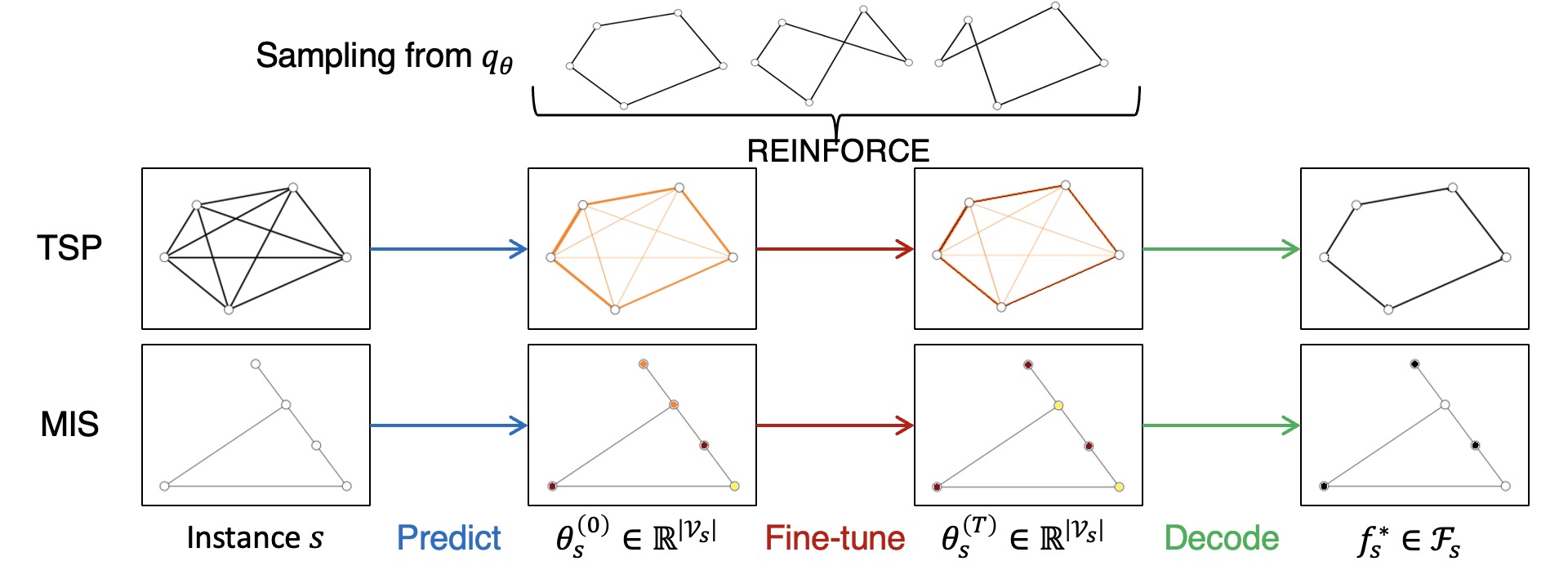

A meta-learner for large-scale combinatorial optimization problems including the traveling salesman problem (TSP) and the maximum independent set problem (MIS).

@inproceedings{qiu2022dimes,

title={{DIMES}: A differentiable meta solver for combinatorial optimization problems},

author={Ruizhong Qiu and Zhiqing Sun and Yiming Yang},

booktitle={Advances in Neural Information Processing Systems},

volume={35},

year={2022}

}The code for DIMES-TSP is in the directories TSP/TSP-KNN and TSP/TSP-Full. TSP-KNN employs KNN edge pruning, while TSP-Full runs on the full graph.

We use

Our code was tested under the following dependencies:

- GCC 7.5.0 on Ubuntu 18.04

- CUDA 11.0

- Python 3.7.10 (packaged by

conda-forge) - PyTorch 1.7.0

- PyTorch Scatter 2.0.7

- PyTorch Sparse 0.6.9

- PyTorch Cluster 1.5.9

- PyTorch Geometric 2.0.4

Notice: According to issue #2 and issue #4, some other versions of PyTorch Scatter can be incompatible with our TSP sampler.

For your reference, we used the following commands to install dependencies:

pip install --no-index torch-scatter==2.0.7 -f https://pytorch-geometric.com/whl/torch-1.7.0+cu110.html

pip install --no-index torch-sparse==0.6.9 -f https://pytorch-geometric.com/whl/torch-1.7.0+cu110.html

pip install --no-index torch-cluster==1.5.9 -f https://pytorch-geometric.com/whl/torch-1.7.0+cu110.html

pip install torch-geometric==2.0.4Notice: If you see the following warning when running our code, just ignore it.

[W OperatorEntry.cpp:111] Warning: Registering a kernel (registered by RegisterOperators) for operator torch_scatter::segment_sum_csr for dispatch key (catch all) that overwrote a previously registered kernel with the same dispatch key for the same operator. (function registerKernel)

Before installing our TSP sampler, please ensure PyTorch Scatter 2.0.7 is already installed.

Install our TSP sampler:

cd TSP

pip install ./torch_samplingNotice: If the installer fails to find -l_segment_csr_cpu or -l_segment_csr_cuda, you may have to add their path to torch_sampling/setup.py manually. Please refer to issue #4 for detail. We thank @L-fu-des22 for sharing the experience about this issue.

To reproduce our results, please run:

cd TSP/TSP-KNN

./tsp{N}_test_{decoder}.sh tsp{N}where decoder can be G / AS_G / S / AS_S / MCTS / AS_MCTS.

To train a model from scratch, please run:

cd TSP/TSP-KNN

./tsp{N}_train.shOutput (parameters of trained models) will be saved in folder output/.

The output will have a prefix generated automatically. We call this prefix save_name. For example, save_name of the file output/tsp500~net120.pt is tsp500. A generated save_name is like dimes-tsp{N}-knn{K}@{timestamp}, where a timestamp is to ensure uniqueness of filenames.

To test a trained model, please run:

cd TSP/TSP-KNN

./tsp{N}_test_{decoder}.sh {save_name}where save_name is the one generated during training, and decoder can be G / AS_G / S / AS_S / MCTS / AS_MCTS.

The test instances are originally provided by Fu et al. (2021). We have reformatted them for our code. Reformatted test instances are provided in folder input/.

Our trained models tsp{N}_net*.pt are in folder output/.

The predicted heatmaps of DIMES+MCTS and DIMES+AS+MCTS are in folder output/.

Notice: The TSP-10000 heatmaps are large after unzipping (around 900MB per graph).

- EAN (Deudon et al., 2018): https://github.com/MichelDeudon/encode-attend-navigate

- AM (Kool et al., 2019): https://github.com/wouterkool/attention-learn-to-route

- GCN (Joshi et al., 2019): https://github.com/chaitjo/graph-convnet-tsp

- POMO (Kwon et al., 2020): https://github.com/yd-kwon/POMO

- EAS (Hottung et al., 2022): https://github.com/ahottung/EAS

- Att-GCN (Fu et al., 2021): https://github.com/Spider-SCNU/TSP (We use their CPU-version MCTS code.)

The code for DIMES-MIS is in the directory MIS. Our code is adapted from the code of mis-benchmark-framework

Before running our code, please install our MIS sampler:

cd MIS

conda env create -f environment.ymlcd MIS

bash scripts/solve_intel_dimes_er.sh

bash scripts/solve_intel_dimes_sat.shThe evaluation data can be found at MIS/data.

For SATLIB (https://www.cs.ubc.ca/~hoos/SATLIB/Benchmarks/SAT/CBS/descr_CBS.html), since the converted graphs are quite huge and the graphs are fixed. We only provide the file names for the test split, while the rest can be used for training.

For ER graphs, since the graphs are randomly generated, we provide the gpickle version of the test graphs that we used for evaluation (in a shared Google drive link). The training graphs can be generated with another call of

python -u main.py gendata \

random \

None \

data_er/train \

--model er \

--min_n 700 \

--max_n 800 \

--num_graphs 16384 \

--er_p 0.15or

python -u main.py gendata \

random \

None \

data_er_large/train \

--model er \

--min_n 9000 \

--max_n 11000 \

--num_graphs 4096 \

--er_p 0.02