diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000..4c8a046

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,23 @@

+node_modules

+typings

+*.pyc

+.DS_Store

+package-lock.json

+mtp-ai-turing-tumble.iml

+.idea/

+out

+reinforcement_learning/wandb

+reinforcement_learning/tmp

+/out/

+/reinforcement_learning/tmp/

+.idea/*

+reinforcement_learning/wandb/*

+out/*

+reinforcement_learning/tmp/*

+*/META-INF/*

+MANIFEST.MF

+wandb_key_file

+LogFile.txt

+State.txt

+/reinforcement_learning/dataset_generators/rl_training_set.csv

+/reinforcement_learning/environments/envs/bugbit_env_backup.py

diff --git a/LICENSE b/LICENSE

index 7c8f7c8..b621ac1 100644

--- a/LICENSE

+++ b/LICENSE

@@ -18,4 +18,4 @@ FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

-SOFTWARE.

+SOFTWARE.

\ No newline at end of file

diff --git a/README.md b/README.md

new file mode 100644

index 0000000..d45d938

--- /dev/null

+++ b/README.md

@@ -0,0 +1,64 @@

+# European Master Team Project - AI Turing Tumble

+

+> This repository holds the code that was developed during the European Master Team Project

+> in the spring semester of 2022 (EMTP 22). The project was supervised by

+> [Dr. Christian Bartelt](https://www.uni-mannheim.de/en/ines/about-us/researchers/dr-christian-bartelt/) and

+> [Jannik Brinkmann](https://www.linkedin.com/in/brinkmann-jannik/). The project team was

+> composed of students from the [Babeș-Bolyai University](https://www.ubbcluj.ro/en/)

+> in Cluj-Napoca, Romania, and the [University of Mannheim](https://www.uni-mannheim.de/), Germany.

+

+## Introduction

+

+In the game Turing Tumble, players construct mechanical computers that use the flow of marbles along a board to solve

+logic problems. As the board and its parts are Turing complete, which means that they can be used to express any

+mathematical function, an intelligent agent taught to solve a Turing Tumble challenge essentially learns how to write

+code according to a given specification.

+

+Following this logic, we taught an agent how to write a simple programme according to a minimal specification, using

+an abstracted version of the Turing Tumble board as reinforcement learning training environment. This is related to

+the emerging field of programme synthesis, as is for example applied in

+[GitHub’s CoPilot](https://github.com/features/copilot).

+

+## Participants

+

+### Babeș-Bolyai University

+

+* [Tudor Esan](https://github.com/TudorEsan) - B.Sc. Computer Science

+* [Raluca Diana Chis](https://github.com/RalucaChis) - M.Sc. Applied Computational Intelligence

+

+### University of Mannheim

+

+* [Roman Hess](https://github.com/romanhess98) - M.Sc. Data Science

+* [Timur Carstensen](https://github.com/timurcarstensen) - M.Sc. Data Science

+* [Julie Naegelen](https://github.com/jnaeg) - M.Sc. Data Science

+* [Tobias Sesterhenn](https://github.com/Tsesterh) - M.Sc. Data Science

+

+## Contents of this repository

+

+The project directory is organised in the following way:

+

+| Path | Role |

+|---------------------------|----------------------------------------------|

+| `docs/` | Supporting material to document the project |

+| `reinforcement_learning/` | Everything related to Reinforcement Learning |

+| `src/` | Java sources |

+| `ttsim/` | Source Code of the Turing Tumble Simulator |

+

+## Weights & Biases (wandb)

+We used [Weights & Biases](https://wandb.ai/) to log the results of our training:

+1. [Reinforcement Learning](https://wandb.ai/mtp-ai-board-game-engine/ray-tune-bugbit)

+2. [Pretraining](https://wandb.ai/mtp-ai-board-game-engine/Pretraining)

+3. [Connect Four](https://wandb.ai/mtp-ai-board-game-engine/connect-four)

+

+## Credits

+

+We used third-party software to implement the project. Namely:

+

+- **BugPlus** - [Dr. Christian Bartelt](https://www.uni-mannheim.de/en/ines/about-us/researchers/dr-christian-bartelt/)

+- **Turing Tumble Simulator** - [Jesse Crossen](https://github.com/jessecrossen/ttsim)

+

+## Final Project Presentation

+

+Link to video:

+[](https://www.youtube.com/watch?v=w501gf2MLFM)

+

diff --git a/docs/README.md b/docs/README.md

new file mode 100644

index 0000000..4fdf31c

--- /dev/null

+++ b/docs/README.md

@@ -0,0 +1,111 @@

+# Setup

+

+> **DISCLAIMER: this project is meant to be run on Linux or macOS machines. Windows is not supported

+> due to a scheduler conflict between JPype and Ray.**

+

+## Prerequisites

+

+1. An x86 machine running Linux or macOS (tested on Ubuntu 20.04 and macOS Monterey)

+2. A working, clean (i.e new / separate) conda ([miniconda3](https://docs.conda.io/en/latest/miniconda.html)

+ or [anaconda3](https://docs.anaconda.com/anaconda/install/)) installation

+3. [IntelliJ IDEA](https://www.jetbrains.com/idea/) (CE / Ultimate)

+

+## Installing dependencies

+

+1. Create and activate a new conda environment for python3.8 (`conda create -n mtp python=3.8` & `conda activate mtp`)

+2. Navigate to the project root and run `pip install -r requirements.txt`

+3. Run `pip install "ray[all]"` to install all dependencies for Ray

+

+## Python Setup

+

+1. In IntelliJ, open the project structure dialogue: `File -> Project Structure`

+2. In Modules, select the project and click `Add` and select `Python`

+3. In the `Python` tab, add a new Python interpreter by clicking on `...`

+4. In the newly opened dialogue, click on `+` and click `Add Python SDK...`

+5. In the dialogue, click on `Conda environment` and select the existing environment we created in the previous step

+6. Select the newly registered interpreter as the project interpreter and close out of the dialogue after

+ clicking `apply`

+7. Still in `Project Structure`, navigate to `Modules` and select the project: select the directories `src`

+ and `reinforcement_learning` and mark them as `Sources`. Click `Apply` and close out of the dialogue.

+

+## Compiling the project

+

+1. Make sure that the SDK and Language Level in the Project tab are set to 17 (i.e. openjdk-17)

+2. Open the Project Structure Dialogue in IntelliJ `File -> Project Structure`

+3. Select `Artifacts`

+4. Add a JAR file with dependencies

+5. Click on the folder icon and select `CF_Translated` in the next dialogue and click OK

+6. Click OK again and then in the artifacts overview, in the Output Layout tab, select the Python library and remove it

+7. Click on apply and OK

+8. Build the artifact: `Build -> Build Artifacts`

+9. In `reinforcement_learning/utilities/utilities.py`, make sure that the variable `artifact_directory` is set to the

+ folder that contains the compiled artifact. The variable `artifact_file_name` should be set to the name of the jar

+ file. (cf. image below: `artifact_directory = mtp_testing_jar` and `artifact_file_name = mtp-testing.jar`)

+

+

+

+

+## Weights & Biases

+

+We used [Weights & Biases](https://wandb.ai/) (wandb) to document our training progress and results. All training files

+in this project rely on wandb for logging. For the following you will need a wandb account and your wandb

+[API key](https://docs.wandb.ai/quickstart):

+

+1. Create a file named `wandb_key_file` in the `reinforcement_learning` directory

+2. Paste your wandb API key in the newly created file

+3. In the training files, adjust the wandb configuration to your wandb account (i.e. arguments such as `entity`,

+ `project`, and `group` should be modified accordingly)

+

+## Running the project

+

+> DISCLAIMER: the number of bugs used in pretraining, reinforcement learning, and the environment configuration **must**

+> be the same

+> To run the individual parts of the final project pipeline, follow the steps outlined below.

+

+> DISCLAIMER: the generation of Pretraining and RL training sets may take some time for *num_bugs > 6* and a large

+> number

+> of samples.

+

+### Pretraining

+

+#### Pretraining Sample Generation

+

+Run the `reinforcement_learning/dataset_generators > pretraining_dataset_generation.py` script in the terminal or

+execute the file in IntelliJ.

+The generated dataset(s) will be saved in `data/training_sets/pretraining_training_sets` as different `.pkl` files.

+Each training set is identified by the number of bugs and whether multiple_actions are allowed or not. If multiple

+actions

+are allowed, this means that in the training set there are samples where more then one edge have to be removed from the

+CF Matrix.

+

+#### Model Pretraining

+

+Run the `reinforcement_learning/custom_torch_models > rl_network_pretraining.py` script in the terminal or execute

+the file in IntelliJ.

+The script loads the created training dataset depending on the number of bugs and whether multiple actions are allowed.

+After the pretraining, the model is saved in `data/model_weights`.

+

+### Reinforcement Learning (RL)

+

+The RL part of the project is split up into two components: sample generation and training.

+

+#### Reinforcement Learning Sample Generation

+

+To generate RL samples, adjust the number of bugs and number of samples in the main function of

+`reinforcement_learning/dataset_generators > rl_trainingset_generation.py` and run the file. The generated training set

+will be saved

+as a `.pkl` file in `data/training_sets/rl_training_sets`.

+

+#### Reinforcement Learning Training

+

+Reinforcement learning training is done in `reinforcement_learning > train.py`:

+

+1. Set the path to your RL training set from the previous step in `global_config["training_set_path]`

+2. If you did generate a pretraining set and did pretrain, also adjust `global_config["pretrained_model_path"]`

+3. ***Optional***: if you want to initialise the learner with the pretrained model's weights, set the config parameter

+ in `global_config["pretraining"]` to `True`.

+

+### Connect Four

+

+For Connect Four please refer to the [Connect Four](connect-four.md) documentation.

diff --git a/docs/assets/Example_Challenge.png b/docs/assets/Example_Challenge.png

new file mode 100644

index 0000000..f1da239

Binary files /dev/null and b/docs/assets/Example_Challenge.png differ

diff --git a/docs/assets/TT_architecture_diagram.png b/docs/assets/TT_architecture_diagram.png

new file mode 100644

index 0000000..1b841e2

Binary files /dev/null and b/docs/assets/TT_architecture_diagram.png differ

diff --git a/docs/assets/TT_env_subdiagram.png b/docs/assets/TT_env_subdiagram.png

new file mode 100644

index 0000000..705ea77

Binary files /dev/null and b/docs/assets/TT_env_subdiagram.png differ

diff --git a/docs/assets/TT_transl_diagram.png b/docs/assets/TT_transl_diagram.png

new file mode 100644

index 0000000..d994ac8

Binary files /dev/null and b/docs/assets/TT_transl_diagram.png differ

diff --git a/docs/assets/blue_bit.svg b/docs/assets/blue_bit.svg

new file mode 100644

index 0000000..b73941a

--- /dev/null

+++ b/docs/assets/blue_bit.svg

@@ -0,0 +1,64 @@

+

+

+

+

+

+ image/svg+xml

+

+

+

+

+

+

diff --git a/docs/assets/blue_bits.svg b/docs/assets/blue_bits.svg

new file mode 100644

index 0000000..0c02024

--- /dev/null

+++ b/docs/assets/blue_bits.svg

@@ -0,0 +1 @@

+Control OutData Out Data In Control In 00 0 0 0 1 0 0 In pins Out pins Left 1 Right 1 Left 2 Right 2 In 1 In 2 00 0 0 0 1 0 0 In pins Out pins

+

+

+

+ image/svg+xml

+

+

+

+

+

+

diff --git a/docs/assets/green_edges.svg b/docs/assets/green_edges.svg

new file mode 100644

index 0000000..e54b386

--- /dev/null

+++ b/docs/assets/green_edges.svg

@@ -0,0 +1 @@

+

+

+

+

+ image/svg+xml

+

+

+

+

+

+

diff --git a/docs/assets/orange_edge.svg b/docs/assets/orange_edge.svg

new file mode 100644

index 0000000..4c5b8fa

--- /dev/null

+++ b/docs/assets/orange_edge.svg

@@ -0,0 +1,124 @@

+

+

+

+

+

+ image/svg+xml

+

+

+

+

+

+

diff --git a/docs/assets/out_directory.png b/docs/assets/out_directory.png

new file mode 100644

index 0000000..83c55ee

Binary files /dev/null and b/docs/assets/out_directory.png differ

diff --git a/docs/assets/physical_board.png b/docs/assets/physical_board.png

new file mode 100644

index 0000000..27f82b8

Binary files /dev/null and b/docs/assets/physical_board.png differ

diff --git a/docs/assets/spec.svg b/docs/assets/spec.svg

new file mode 100644

index 0000000..87ae9be

--- /dev/null

+++ b/docs/assets/spec.svg

@@ -0,0 +1 @@

+00 1 1 1 1 0 1 InputsOutputs 01 2 bits 4 bits 3 bits v alid invalid

+

+

+## Table of contents

+

+#### 1. [About](#about)

+

+#### 2. [Setup](#setup)

+

+#### 3. [Training](#training)

+

+#### 4. [GUI / Rollout](#gui--rollout)

+

+## About

+

+

+

+An exemplary challenge

+

+

+#### The BugBit Environment

+

+BugBit is an abstraction of the Turing Tumble board without restrictions like board size or gravity, devised

+by [Dr. Christian Bartelt](https://www.uni-mannheim.de/en/ines/about-us/researchers/dr-christian-bartelt/).

+Bugs represent the blue Bits from the physical game.

+The orange lines indicate the control flow along the BugBit programme, which equates the flow of the marbles along the

+bits on the board.

+Each Bug has a control-in pin at its top where the control flow (marble) can come in and two control-out pins at the

+bottom, where it can leave.

+If a Bug has internal state zero (the blue Bit is flipped to the left) the control flow will leave the Bug via the right

+control

+out pin.

+If it has state one (flipped to the right) the control flow will leave it via the left control-out pin.

+

+

+

+

+

+An Illustration of a Bug.

+The data-in and -out pin may be ignored, as they are only used internally to flip a Bit’s state after the control flow

+(the marble) passes through it

+

+

+Different Bugs can now be connected with each other to define more complex programmes.

+For example, the following physical Turing Tumble board can be represented by setting the connections

+between Bugs as shown below.

+

+

+

+

+

+A Turing Tumble Board and its BugBit abstraction

+

+

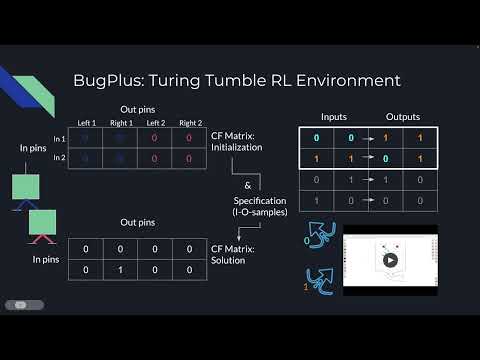

+#### The control flow matrix

+

+How the Bugs are connected with each other is stored in the control flow matrix (CF matrix).

+Visualised below is a CF matrix for two Bugs. Rows indicate the control-in pins of the Bits,

+and columns indicate the control-out pins of the Bits. As each Bit has one control-in pin, but two control-out pins,

+we have twice as many columns as rows. If a cell contains a 1 in this matrix, it means that the corresponding in and out

+pins are

+connected. By setting 1s in the CF matrix we can define a programme, which we can later run.

+

+

+

+

+

+The control flow matrix

+

+

+#### Reducing the Problem Size

+

+The Turing Tumble Puzzle Book contains all kinds of challenges for the player. So the first step was to define a

+specific

+subset of the challenges to focus on. For the purpose of this project we limited ourselves to the following problem

+class:

+

+1. *We do not allow loops* We treat the board as if the switches at the bottom did not exist, so a challenge has to be

+ solved with a single marble.

+2. *The waterfall principle* While in the BugBit abstraction gravity does not matter, we only allow Bits’ control flows

+ to go into Bits with a higher indication number, i.e. Bits placed

+ below them on the physical Turing Tumble board. For example, Bit 1 can only be connected to Bit 2 and 3, Bit 2 can

+ only be

+ connected to Bit 3, and from Bit 3 the control flow can not go any further.

+

+This limitation reduces the complexity of valid programmes, making the reinforcement learning task more feasible.

+

+#### The Challenge – What the agent learns

+

+The observation space, i.e. what the agent perceives, is comprised of two components: a CF matrix and a specification.

+As we reduced the problem size to challenges that follow the waterfall principle, the **CF matrix can indeed only have

+non-zero entries in its lower triangular part**. Therefore, we can represent the CF matrix as a vector of length

+*n2 -n*, where n is the number of Bits.

+

+The specification consists of a set of input-output pairs, describing the Bit positions

+(flipped to the left or to the right) before and after the execution of the programme represented by the CF matrix.

+The following specification now defines that when we start our programme and both Bits are flipped to the left

+(state 0,0), after the programme has completed, they should both be pointing to the right (state 1, 1).

+The second row says that when we start our programme with both Bits pointed to the right (state 1, 1), we want the first

+Bit to be pointing to the left now (state 0) while the second Bit

+stays pointed to the right (state 1) after the programme has finished.

+

+

+

+

+

+A specification: Two input output pairs describing Bit positions before and after running the programme

+

+

+In summary, the agent is given a **specification and a CF matrix**, which it then has to modify such that the latter

+describes a programme which fulfills the former. So a programme, that, if we run

+it according to how it is represented in the matrix, will flip the Bits in the way fixed in our specification.

+

+The challenge that was just introduced can be solved by the agent by connecting the first with the second Bit via the

+right control-out pin, which results in the following CF matrix:

+

+

+

+

+

+The control flow matrix solving the specified challenge

+

+

+This results in the following Turing Tumble Board which, as can be seen, fulfils the specification.

+

+

+

+

+

+The solution of the challenge visualised

+

+

+##

+

+## 2. Technical Implementation n *** input-output pairs, we keep half.

+Using

+the expert play algorithm 'solver', we generate CF matrices from these incomplete specifications in a stepwise

+'waterfall principle' fashion as described in section 1.1. To create the final pretraining samples, we take these CF

+matrices and all their

+intermediate versions ('timeline') and randomly flip up to three entries in each, thereby adding or removing edges in

+the BugBit programme. We generate the target policy vector by identifying which changes need to be reverted to come back

+to the original state of the control flow matrix, or to progress in the 'timeline' according to the 'solver' algorithm.

+

+By learning how to delete superfluous edges/add edges which move the state of the CF matrix from one intermediate state

+to the next, the agent learns how to 'reflexively' execute the expert play algorithm.

+

+The reason why we only use half of each specification set as inputs to our training is that for the full set, there

+exists

+only one single correct CF matrix satisfying them. In such a setting, we wouldn't teach the agent creative problem

+solving and reinforcement learning would not make sense methodically.

+

+### 5.2. Generating RL Training Sets

+

+To create the reinforcement trainings data set, we first generate random ***n*** bit programmes and their corresponding

+full

+specification as above. We then randomly keep half of the input-output pairs of the specification. To get training

+examples of one to ***m*** steps away from a valid solution, we iteratively add/remove edges from the original

+programmes,

+that is, we flip entries in the CF matrix. The resulting training set can then be divided into degrees of difficulty by

+the maximum 'distance', i.e. amount of steps needed to reach a known solution, which will still fulfill the

+specification. Moving up in difficulty during the CL training process will then introduce samples with a larger '

+distance' into the RL training process

+

+## 6. Callbacks : Returns the list of seeds used in this env's random

+ number generators. The first value in the list should be the

+ "main" seed, or the value which a reproducer should pass to

+ 'seed'. Often, the main seed equals the provided 'seed', but

+ this won't be true if seed=None, for example.

+ """

+ self.np_random, seed = seeding.np_random(seed)

+ return [seed]

+

+ def close(self):

+ """

+ Close method of the environment. Not implemented for bugbit.

+ :return: None

+ """

+ pass

diff --git a/reinforcement_learning/environments/envs/connectfourmvc_env.py b/reinforcement_learning/environments/envs/connectfourmvc_env.py

new file mode 100644

index 0000000..681af67

--- /dev/null

+++ b/reinforcement_learning/environments/envs/connectfourmvc_env.py

@@ -0,0 +1,122 @@

+"""

+The Connect Four environment Python adaptation of the original Java Implementation (connectfour.ConnectFour.java)

+"""

+# standard library imports

+

+from typing import List

+

+# 3rd party imports

+import gym

+# noinspection PyPackageRequirements

+import jpype

+import numpy as np

+

+# local imports (i.e. our own code)

+# noinspection PyUnresolvedReferences

+from utilities import utilities

+

+

+# noinspection PyAbstractClass

+class ConnectFourMVC(gym.Env):

+ done: bool = False

+ reward: int = None

+ state: List[List[int]] = None

+ connectfour: jpype.JClass = None

+ config: str

+ info: dict = {}

+ view = None

+ winner: int = 0

+

+ metadata = {

+ "render.modes": ["human"]

+ }

+

+ def __init__(self):

+ """

+ Initialises the environment.

+

+ :return: None

+ """

+ self.winner: int = 0

+ self.action_space: gym.spaces.Space = gym.spaces.Discrete(7)

+ self.observation_space: gym.spaces.Space = gym.spaces.Box(0, 2, shape=(6, 7))

+ self.connectfour: jpype.JClass = jpype.JClass("connectfour.ConnectFour")()

+ # noinspection PyTypeChecker

+ self.state: np.ndarray = np.array(self.connectfour.reset())

+

+ def reset(self):

+ """

+ Resets the environment (e.g. after a game has ended)

+

+ :return:

+ """

+ self.reward = 0

+ self.done = False

+ return np.array(self.connectfour.reset())

+

+ def step(self, action: int) -> list:

+ """

+ Step function of the environment.

+

+ :param action: Integer representing the action to take

+ :return: state, reward, done, info

+ """

+ self.state, self.reward, self.done, self.info, winner = self.connectfour.step(action)

+ # noinspection PyTypeChecker

+ self.state = np.array(self.state)

+ self.reward = int(self.reward)

+ self.done = bool(self.done)

+ self.info = {}

+

+ return [self.state, self.reward, self.done, self.info]

+

+ def return_reward(self) -> int:

+ """

+ Returns the reward obtained in the current step

+

+ :return: An Integer describing the reward.

+ """

+ return self.reward

+

+ def return_done(self) -> bool:

+ """

+ Returns whether the game is over or not.

+

+ :return: A Boolean. True = Done, False = Not Done

+ """

+ return self.done

+

+ def return_winner(self) -> int:

+ """

+ Returns the winner of a finished game

+

+ :return: An integer indicating who won the game.

+ """

+ return self.winner

+

+ def get_greedy_action(self, agent_id: int) -> int:

+ """

+ Returns the next action of the greedy player.

+

+ :param agent_id: specifies which ´id´ the greedy agent is (1 or 2)

+ :return: int indicating the next action of the greedy player.

+ """

+ return int(self.connectfour.getGreedyAction(agent_id))

+

+ def interactive_step(self, action: int, agent_id: int) -> list:

+ """

+ Interactive step such that only one action is taken at a time (either RL agent or heuristic/greedy agent)

+

+ :param action: which column to place a token in

+ :param agent_id: which agent is placing the token (either [heuristic/greedy]/human or RL agent; i.e. 1 or 2

+ (some integer))

+ :return: returns the new state after taking the step

+ """

+ self.state, self.reward, self.done, self.info, winner = self.connectfour.step(action, agent_id)

+ # noinspection PyTypeChecker

+ self.state = np.array(self.state)

+ self.reward = int(self.reward)

+ self.done = bool(self.done)

+ self.info = {}

+

+ return [self.state, self.reward, self.done, self.info]

diff --git a/reinforcement_learning/setup.py b/reinforcement_learning/setup.py

new file mode 100644

index 0000000..1981ef3

--- /dev/null

+++ b/reinforcement_learning/setup.py

@@ -0,0 +1,13 @@

+from setuptools import setup

+

+setup(

+ name="bugbit_v0",

+ version="1.0.0",

+ install_requires=["gym"]

+)

+

+setup(

+ name="connectfourmvc_v0",

+ version="1.0.0",

+ install_requires=["gym"]

+)

diff --git a/reinforcement_learning/train.py b/reinforcement_learning/train.py

new file mode 100644

index 0000000..ed6ce94

--- /dev/null

+++ b/reinforcement_learning/train.py

@@ -0,0 +1,108 @@

+"""

+Training file for the BugBit environment.

+"""

+

+# standard library imports

+import os

+

+# 3rd party imports

+import torch

+import ray

+from ray.tune.integration.wandb import WandbLoggerCallback

+from ray import tune

+from ray.tune.schedulers import ASHAScheduler

+import pandas as pd

+

+# local imports (i.e. our own code)

+# noinspection PyUnresolvedReferences

+from utilities import utilities

+# noinspection PyUnresolvedReferences

+from utilities import registration

+from callbacks.custom_metric_callbacks import CustomMetricCallbacks

+

+# training set path and pretrained_model_path must point to the respective files in the data directory

+

+num_bugs: int = 3

+sample_size = int((2 ** num_bugs) / 2)

+rl_training_set_file_name: str = ""

+pretrained_model_file_name: str = ""

+

+global_config = {

+ "training_set_path": f"{os.getenv('REINFORCEMENT_LEARNING_DIR')}/data/training_sets/rl_training_sets/"

+ f"{rl_training_set_file_name}",

+ "pretraining": True,

+ "pretrained_model_path": f"{os.getenv('REINFORCEMENT_LEARNING_DIR')}/data/model_weights"

+ f"/{pretrained_model_file_name}",

+}

+

+if not rl_training_set_file_name or (global_config["pretraining"] and not pretrained_model_file_name):

+ raise ValueError("rl_training_set_file_name and/or pretrained_model_path must be set")

+

+if __name__ == "__main__":

+ # load training set from pickle

+ training_set = pd.read_pickle(global_config["training_set_path"])

+

+ # initialise ray (set local_mode to True for debugging)

+ ray.init()

+

+ # running the experiment

+ tune.run(

+ "PPO",

+ verbose=0, # verbosity of the console output during training

+ # checkpointing settings

+ checkpoint_freq=5, # after every 5 epochs, a checkpoint is created

+ local_dir=f"{os.getenv('REINFORCEMENT_LEARNING_DIR')}/data/agent_checkpoints/bugbit",

+ checkpoint_score_attr="custom_metrics/game_history_mean",

+ keep_checkpoints_num=1, # how many checkpoints to keep in the end

+ num_samples=1, # number of simultaeous agents to train

+ scheduler=ASHAScheduler(

+ metric="custom_metrics/game_history_mean",

+ time_attr="training_iteration",

+ mode="max",

+ max_t=81,

+ reduction_factor=3,

+ brackets=1

+ ), # scheduler for training (i.e. predictive termination)

+ callbacks=[

+ # adjust the entries here to conform to your wandb environment

+ # cf. https://docs.wandb.ai/ and https://docs.ray.io/en/master/tune/examples/tune-wandb.html

+ WandbLoggerCallback(

+ api_key_file="wandb_key_file",

+ project="ray-tune-bugbit",

+ entity="mtp-ai-board-game-engine",

+ group="final-submission-testing",

+ ),

+ ],

+ # trainer config

+ config={

+ "callbacks": CustomMetricCallbacks,

+ "num_envs_per_worker": 1, # how many environments to run in parallel per worker (read: CPU thread)

+ "num_workers": 1, # how many CPU threads are used for training one agent

+ "framework": "torch", # framework to use for training (i.e. PyTorch or TensorFlow)

+ "env": "bugbit-v0", # name of the environment which is used for training

+ # config of the neural network

+ # if pretraining=True: the pretrained model is used for initialisation and hence must have the same config

+ # as below. Else: the model is initialised with random weights and one can modify the model config as needed.

+ "model": {

+ "custom_model": "custom_torch_fcnn",

+ "custom_model_config": {

+ "fcnet_hiddens": [256, 256, 256],

+ "fcnet_activation": torch.nn.ReLU,

+ "no_final_layer": False,

+ "vf_share_layers": False,

+ "free_log_std": False,

+ "pretraining": global_config["pretraining"],

+ "pretrained_model_path": global_config["pretrained_model_path"],

+ },

+ },

+ # bugbit gym environment config

+ "env_config": {

+ "n_bugs": num_bugs, # number of bugs

+ "training_set": training_set,

+ "max_steps": 3, # the maximum number of steps before the game is terminated

+ "sample_size": sample_size, # MUST be set to (n_bugs^2)/2

+ "pretraining": global_config["pretraining"],

+ "pretrained_model_path": global_config["pretrained_model_path"],

+ }

+ },

+ )

diff --git a/reinforcement_learning/translators/__init__.py b/reinforcement_learning/translators/__init__.py

new file mode 100644

index 0000000..e69de29

diff --git a/reinforcement_learning/translators/assets/defaultState.png b/reinforcement_learning/translators/assets/defaultState.png

new file mode 100644

index 0000000..41cff1e

Binary files /dev/null and b/reinforcement_learning/translators/assets/defaultState.png differ

diff --git a/reinforcement_learning/translators/assets/long_board.png b/reinforcement_learning/translators/assets/long_board.png

new file mode 100644

index 0000000..07e5e6a

Binary files /dev/null and b/reinforcement_learning/translators/assets/long_board.png differ

diff --git a/reinforcement_learning/translators/assets/newDefaultState.png b/reinforcement_learning/translators/assets/newDefaultState.png

new file mode 100644

index 0000000..8cf4617

Binary files /dev/null and b/reinforcement_learning/translators/assets/newDefaultState.png differ

diff --git a/reinforcement_learning/translators/assets/newDefaultState2.png b/reinforcement_learning/translators/assets/newDefaultState2.png

new file mode 100644

index 0000000..b81f335

Binary files /dev/null and b/reinforcement_learning/translators/assets/newDefaultState2.png differ

diff --git a/reinforcement_learning/translators/aux_image_to_code.py b/reinforcement_learning/translators/aux_image_to_code.py

new file mode 100644

index 0000000..7d3997f

--- /dev/null

+++ b/reinforcement_learning/translators/aux_image_to_code.py

@@ -0,0 +1,776 @@

+"""

+This file contains the translator (T2) from a matrix representation of a Turing Tumble Board (as can be obtained from

+the Turing Tumble Simulator as a png image) to BugBit code that is executed via jpype.

+

+"""

+

+# standard library imports

+import os

+from typing import List

+import json

+import pickle

+

+# 3rd party imports

+# noinspection PyPackageRequirements

+import jpype

+import numpy as np

+

+# local imports

+# noinspection PyUnresolvedReferences

+from utilities import utilities

+

+# get java classes

+NegBugsBasicArithmetic = jpype.JClass(

+ "de.bugplus.examples.development.NegBugsBasicArithmetic"

+)

+BugplusLibrary = jpype.JClass("de.bugplus.specification.BugplusLibrary")

+BugplusProgramSpecification = jpype.JClass(

+ "de.bugplus.specification.BugplusProgramSpecification"

+)

+BugplusProgramImplementation = jpype.JClass(

+ "de.bugplus.development.BugplusProgramImplementation"

+)

+BugplusProgramInstanceImpl = jpype.JClass(

+ "de.bugplus.development.BugplusProgramInstanceImpl"

+)

+BugplusThread = jpype.JClass("de.bugplus.development.BugplusThread")

+BugplusDevelopment = jpype.JPackage("de.bugplus.development")

+

+logfile = open('logfile.txt', "w")

+

+

+class Translator:

+ # The identifying number for each piece and field

+ _left_edge_rep: int = 1

+ _right_edge_rep: int = 2

+ _bidir_edge_rep: int = 3

+ _gear_rep: int = 4

+ _zero_bit_rep: int = 5

+ _stopping_pin_rep: int = 6

+ _zero_gearbit_rep: int = 7

+ _one_gearbit_rep: int = 8

+ _one_bit_rep: int = 9

+

+ # fields

+ _invalid_field: int = -100

+

+ # board size

+ _board_shape: tuple = (11, 11)

+

+ # other class variables

+ matrix: np.ndarray = np.zeros(shape=_board_shape)

+ function_library: None = None

+ bug_counter: int = 0

+ specification: BugplusProgramSpecification = None

+ challenge_implementation: BugplusProgramImplementation = None

+ bug_matrix: np.array = np.zeros(shape=_board_shape, dtype=object)

+ file_write: bool = False

+

+ bugplus_development_path: str = f"{os.getenv('REINFORCEMENT_LEARNING_DIR')}/../src/de/bugplus/examples/development/"

+

+ # for the translation into a simple CF-Matrix

+ num_bugs: int = 0

+

+ def matrix_to_bugbit_code(self, mtrx: str):

+ """

+ Takes a matrix representing the board and turns it into executable BugBit code

+

+ :param mtrx: A representation of a Turing Tumble board

+ :return: None

+ """

+ # decode the matrix

+ mtrx = json.loads(mtrx)

+ mtrx = np.array(mtrx["array"])

+

+ # the blueprint of the java file that can run a BugPlus program is copied into the Challenge.java file

+ with open(self.bugplus_development_path + "Blueprint.txt", "r") as template:

+ blueprint = template.readlines()

+ with open(

+ self.bugplus_development_path + "Challenge.java", "w"

+ ) as challenge:

+ for b in blueprint:

+ challenge.write(b)

+ # run the blueprint in python via JPype

+ self.matrix: np.array = mtrx

+ # self.write_to_file(f"Matrix: {self.matrix}")

+ self.function_library = BugplusLibrary.getInstance()

+ negation_bug_implementation = (

+ BugplusDevelopment.BugplusNEGImplementation.getInstance()

+ )

+ self.function_library.addSpecification(

+ negation_bug_implementation.getSpecification()

+ )

+

+ self.specification = BugplusProgramSpecification.getInstance(

+ "T2_Code", 0, 2, self.function_library

+ )

+

+ # additionally, write to file

+ self.write_to_file(

+ 'BugplusProgramSpecification T2_Code_specification = BugplusProgramSpecification.getInstance'

+ '("T2_Code_Instance", 0, 2, myFunctionLibrary); '

+ )

+

+ self.challenge_implementation = self.specification.addImplementation()

+ self.write_to_file(

+ "BugplusProgramImplementation T2_Code_Implementation = T2_Code_specification.addImplementation();"

+ )

+ # add a negation bug for each bit on the board

+ for i in self.find_bits():

+ self.bug_matrix[i[0], i[1]] = self.addNegBug()

+ self.num_bugs += 1

+

+ # create CF Matrix:

+ self.cf_matrix = np.zeros(shape=(self.num_bugs, 2 * self.num_bugs), dtype=np.int)

+

+ # connect the control in flow of the framework program to the first bit that will be active when starting a game

+ try:

+ self.connect_starting_bit()

+ except Exception:

+ self.write_to_file("An error happened when connecting the ControlInInterface")

+

+ # control flow: connect the bugs with each other

+ try:

+ self.connect_bits()

+ except Exception:

+ self.write_to_file("Bug in Connect Blue bits")

+

+ # data flow: connect the bugs with each other

+ try:

+ self.synchronize_connected_gear_bits()

+ except Exception as e:

+ self.write_to_file("Bug in Connect Coherent Components")

+ self.write_to_file(str(e))

+

+ # implement an instance of our BugPlus program

+ challenge_instance = self.challenge_implementation.instantiate()

+ self.write_to_file(

+ "BugplusInstance challengeInstance = T2_Code_Implementation.instantiate();"

+ )

+

+ t2_code_instance_impl = challenge_instance.getInstanceImpl()

+ self.write_to_file(

+ "BugplusProgramInstanceImpl T2_Code_Instance_Impl = challengeInstance.getInstanceImpl();"

+ )

+

+ # flip each bit corresponding to its starting state

+ bug_pos = self.find_bits()

+ for pos in bug_pos:

+ id = self.bug_matrix[pos]

+ value = 0

+

+ if self.matrix[pos] in [self._one_bit_rep, self._one_gearbit_rep]:

+ value = 1

+ t2_code_instance_impl.getBugs().get(id).setInternalState(value)

+ self.write_to_file(

+ f'T2_Code_Instance_Impl.getBugs().get("{id}").setInternalState({value});'

+ )

+

+ # create a thread that can run the program via JPype

+ new_thread = BugplusThread.getInstance()

+ self.write_to_file("BugplusThread newThread = BugplusThread.getInstance();")

+ new_thread.connectInstance(challenge_instance)

+ self.write_to_file("newThread.connectInstance(challengeInstance);")

+

+ # run the program via JPype

+ self.write_to_file("newThread.start();")

+

+ # print information regarding the bits' states and call counters

+ ids = []

+ for pos in bug_pos:

+ ids.append(self.bug_matrix[pos])

+

+ self.write_to_file(

+ "LinkedList challengeBugs = new LinkedList();"

+ )

+

+ for id in ids:

+ print(

+ f"Internal State: {id} : \t {t2_code_instance_impl.getBugs().get(id).getInternalState()}"

+ )

+ print(

+ f"Call Counter: {id} : \t {t2_code_instance_impl.getBugs().get(id).getCallCounter()}"

+ )

+

+ self.write_to_file(

+ f'System.out.println("Internal State " + "{id}" + ": \t" + T2_Code_Instance_Impl.getBugs().get("{id}")'

+ f'.getInternalState());'

+ )

+ self.write_to_file(

+ f'System.out.println("Call Counter " + "{id}" + ": \t" + T2_Code_Instance_Impl.getBugs().get("{id}")'

+ f'.getCallCounter() + "\\n");'

+ )

+

+ with open('CF_Matrix.pkl', 'wb') as handle:

+ pickle.dump(self.cf_matrix, handle, protocol=pickle.HIGHEST_PROTOCOL)

+

+ def connect_bits(self):

+ """

+ Connects the control flow of the bits.

+

+ :return: None

+ """

+

+ # get all positions with bits

+ bit_positions = self.find_bits()

+

+ # using 2 individual buckets (one red one blue)

+ # blue_bucket_out = (0, int((self._BOARD_SHAPE[1] + 1) / 4))

+ # red_bucket_out = (0, int(self._BOARD_SHAPE[1] - (self._BOARD_SHAPE[1] + 1) / 4 - 1))

+

+ # using 1 single shared bucket in the top center of the board

+ blue_bucket_out = (0, int(self._board_shape[1] / 2))

+ red_bucket_out = blue_bucket_out

+

+ # for each bit

+ for current_pos in bit_positions:

+

+ # the current momentum

+ momentum_right_next_l = False

+ momentum_right_next_r = True

+

+ # assign coordinates for bottom left and bottom right of bit

+ next_l = (current_pos[0] + 1, current_pos[1] - 1)

+ next_r = (current_pos[0] + 1, current_pos[1] + 1)

+

+ # check if next position is out of bounds

+ if next_l[1] < 0 or next_l[1] > self._board_shape[1] - 1:

+ next_l = None

+ if next_r[1] < 0 or next_r[1] > self._board_shape[1] - 1:

+ next_r = None

+ if self.matrix[next_l[0], next_l[1]] == -100:

+ next_l = None

+ if self.matrix[next_r[0], next_r[1]] == -100:

+ next_r = None

+

+ # all bits that will be implemented as bugs

+ mask_list = [

+ self._one_bit_rep,

+ self._zero_bit_rep,

+ self._one_gearbit_rep,

+ self._zero_gearbit_rep,

+ self._stopping_pin_rep,

+ ]

+

+ # exit bit to the left

+ if next_l:

+ # as long as we don't arrive at the next bit

+ while self.matrix[next_l] not in mask_list:

+ # check if we are in second to last row

+ if next_l[0] == self._board_shape[0] - 2:

+ # specify which switch will be activated

+ if next_l[1] in [0, 2]:

+ next_l = blue_bucket_out

+ momentum_right_next_l = True

+ continue

+ elif next_l[1] in [6, 8]:

+ next_l = red_bucket_out

+ momentum_right_next_l = True

+ continue

+ elif (

+ next_l[1] == 4

+ and self.matrix[next_l] == self._left_edge_rep

+ ):

+ next_l = blue_bucket_out

+ momentum_right_next_l = True

+ continue

+ elif (

+ next_l[1] == 6

+ and self.matrix[next_l] == self._right_edge_rep

+ ):

+ next_l = red_bucket_out

+ momentum_right_next_l = True

+ continue

+

+ # check if we are in last row

+ elif next_l[0] == self._board_shape[0] - 1:

+ # assign which switch will be activated

+ if next_l[1] != 5:

+ next_l = None

+ raise AssertionError(

+ "This is an error. This coordinate should never appear (next_l)."

+ )

+

+ elif self.matrix[next_l] == self._left_edge_rep:

+ next_l = blue_bucket_out

+ momentum_right_next_l = True

+ continue

+ elif self.matrix[next_l] == self._right_edge_rep:

+ next_l = red_bucket_out

+ momentum_right_next_l = False

+ continue

+ # normal case: check what next edge will be

+ # green edges

+ if self.matrix[next_l] == self._right_edge_rep:

+ next_l = (next_l[0] + 1, next_l[1] + 1)

+ momentum_right_next_l = True

+ elif self.matrix[next_l] == self._left_edge_rep:

+ next_l = (next_l[0] + 1, next_l[1] - 1)

+ momentum_right_next_l = False

+ # orange bidirectional edge

+ elif self.matrix[next_l] == self._bidir_edge_rep:

+ if momentum_right_next_l:

+ next_l = (next_l[0] + 1, next_l[1] + 1)

+ else:

+ next_l = (next_l[0] + 1, next_l[1] - 1)

+ # check if next position will be out of bounds (if we follow edge)

+ if next_l[1] < 0 or next_l[1] > self._board_shape[1] - 1:

+ next_l = None

+ raise AssertionError(

+ "This is an error. Next next_l position is out of bounds."

+ )

+

+ if self.matrix[next_l[0], next_l[1]] == -100:

+ next_l = None

+ raise AssertionError(

+ "This is an error. Next next_l position is out of bounds."

+ )

+

+ # we reached the next bit

+ if next_l:

+

+ # assign ids of the bits to connect

+ id_1 = self.bug_matrix[current_pos]

+ id_2 = self.bug_matrix[next_l]

+

+ if type(id_2) != str:

+ self.challenge_implementation.connectControlOutInterface(id_1, 0, 0)

+ self.challenge_implementation.connectControlOutInterface(id_1, 0, 1)

+ self.write_to_file(

+ f'T2_Code_Implementation.connectControlOutInterface("{id_1}", 0, 0);'

+ )

+ self.write_to_file(

+ f'T2_Code_Implementation.connectControlOutInterface("{id_1}", 0, 1);'

+ )

+

+ else:

+ self.challenge_implementation.addControlFlow(id_1, 0, id_2)

+ self.write_to_file(

+ f'T2_Code_Implementation.addControlFlow("{id_1}", 0, "{id_2}");'

+ )

+

+ # reparse ids:

+ i1 = int(id_1.split("_")[1])

+ i2 = int(id_2.split("_")[1])

+

+ # set 1 in CF Matrix

+ self.cf_matrix[i2, 2 * i1] = 1

+

+ # exit bit to the right

+ if next_r:

+ # as long as we don't arrive at the next bit

+ while self.matrix[next_r] not in mask_list:

+ # specify which switch will be activated

+ if next_r[0] == self._board_shape[0] - 2:

+ if next_r[1] in [0, 2]:

+ next_r = blue_bucket_out

+ momentum_right_next_r = True

+ continue

+ elif next_r[1] in [6, 8]:

+ next_r = red_bucket_out

+ momentum_right_next_r = True

+ continue

+ elif (

+ next_r[1] == 4

+ and self.matrix[next_r] == self._left_edge_rep

+ ):

+ next_r = blue_bucket_out

+ momentum_right_next_r = True

+ continue

+ elif (

+ next_r[1] == 6

+ and self.matrix[next_r] == self._right_edge_rep

+ ):

+ next_r = red_bucket_out

+ momentum_right_next_r = True

+ continue

+

+ # check if we are in last row

+ elif next_r[0] == self._board_shape[0] - 1:

+ # assign which swith will be activated

+ if next_r[1] != 5:

+ next_r = None

+ raise AssertionError(

+ "This is an error. This coordinate should never appear (next_r)."

+ )

+ elif self.matrix[next_r] == self._left_edge_rep:

+ next_r = blue_bucket_out

+ momentum_right_next_r = True

+ continue

+ elif self.matrix[next_r] == self._right_edge_rep:

+ next_r = red_bucket_out

+ momentum_right_next_r = False

+ continue

+

+ # usual case: check what next edge will be

+ # green edges

+ elif self.matrix[next_r] == self._right_edge_rep:

+ next_r = (next_r[0] + 1, next_r[1] + 1)

+ momentum_right_next_r = True

+ elif self.matrix[next_r] == self._left_edge_rep:

+ next_r = (next_r[0] + 1, next_r[1] - 1)

+ momentum_right_next_r = False

+

+ # orange bidirectional edge

+ elif self.matrix[next_r] == self._bidir_edge_rep:

+ if momentum_right_next_r:

+ next_r = (next_r[0] + 1, next_r[1] + 1)

+ else:

+ next_r = (next_r[0] + 1, next_r[1] - 1)

+

+ # check if next position will be out of bounds (if we follow edge)

+ if next_r[1] < 0 or next_r[1] > self._board_shape[1] - 1:

+ next_r = None

+ raise AssertionError(

+ "This is an error. Next next_r position is out of bounds."

+ )

+ if self.matrix[next_r[0], next_r[1]] == -100:

+ next_r = None

+ raise AssertionError(

+ "This is an error. Next next_r position is out of bounds."

+ )

+

+ # we reached the next bit

+ if next_r:

+ id_1 = self.bug_matrix[current_pos]

+ id_2 = self.bug_matrix[next_r]

+ # assign ids of the bits to connect

+ if type(id_2) != str:

+ self.challenge_implementation.connectControlOutInterface(id_1, 1, 0)

+ self.challenge_implementation.connectControlOutInterface(id_1, 1, 1)

+ self.write_to_file(

+ f'T2_Code_Implementation.connectControlOutInterface("{id_1}", 1, 0);'

+ )

+ self.write_to_file(

+ f'T2_Code_Implementation.connectControlOutInterface("{id_1}", 1, 1);'

+ )

+ else:

+ self.challenge_implementation.addControlFlow(id_1, 1, id_2)

+ # self.addControlFlow(id_1, 1, id_2)

+ self.write_to_file(

+ f'T2_Code_Implementation.addControlFlow("{id_1}", 1, "{id_2}");'

+ )

+ # reparse ids:

+ i1 = int(id_1.split("_")[1])

+ i2 = int(id_2.split("_")[1])

+ # set 1 in CF Matrix

+ self.cf_matrix[i2, 2 * i1 + 1] = 1

+

+ def connect_starting_bit(self):

+ """

+ Connects the initial control flow of the interface with the first bit

+

+ :return: None

+ """

+

+ # define the position just below the blue bucket

+ starting_pos = (0, int(self._board_shape[1] / 2))

+ next_pos = starting_pos

+ momentum_right = True

+

+ # follow the edges until we encounter a bit

+ while next_pos not in self.find_bits():

+

+ # follow green edges

+ if self.matrix[next_pos] == self._right_edge_rep:

+ next_pos = (next_pos[0] + 1, next_pos[1] + 1)

+ momentum_right = True

+ elif self.matrix[next_pos] == self._left_edge_rep:

+ next_pos = (next_pos[0] + 1, next_pos[1] - 1)

+ momentum_right = False

+

+ # follow orange edges

+ elif self.matrix[next_pos] == self._bidir_edge_rep:

+ if momentum_right:

+ next_pos = (next_pos[0] + 1, next_pos[1] + 1)

+ else:

+ next_pos = (next_pos[0] + 1, next_pos[1] - 1)

+

+ # connect the control flow of the interface with the bit that will be activated first when starting the program

+ start_id = self.bug_matrix[next_pos]

+ self.connectControlInInterface(start_id)

+

+ def connect_gear_bits(self, gear_bit_pos: tuple) -> List[tuple]:

+ """

+ Identifies gear bits that are connected via gears

+

+ :param gear_bit_pos: The position of a single gearbit on the board

+ :return: a list of the gear bits that are connected via gears

+ """

+

+ # check for gears and gear bits directly connected with input gear bit

+ connected_components = [gear_bit_pos]

+ list_size_increase = True

+

+ # as long as we keep finding neighbouring gears or gear bits

+ while list_size_increase:

+ list_size_increase = False

+

+ # for all gears and gear bits already in the list

+ for component in connected_components:

+

+ # check in all four directions for neighboring gears or gearbits

+ above_pos = (component[0] - 1, component[1])

+ below_pos = (component[0] + 1, component[1])

+ right_pos = (component[0], component[1] + 1)

+ left_pos = (component[0], component[1] - 1)

+

+ # if a gear or gear bit is found, add it to the list

+ if self.check_gear_or_gearbit(above_pos):

+ if above_pos not in connected_components:

+ connected_components.append(above_pos)

+ list_size_increase = True

+

+ if self.check_gear_or_gearbit(below_pos):

+ if below_pos not in connected_components:

+ connected_components.append(below_pos)

+ list_size_increase = True

+

+ if self.check_gear_or_gearbit(right_pos):

+ if right_pos not in connected_components:

+ connected_components.append(right_pos)

+ list_size_increase = True

+

+ if self.check_gear_or_gearbit(left_pos):

+ if left_pos not in connected_components:

+ connected_components.append(left_pos)

+ list_size_increase = True

+

+ # filter out the gear bits in the list connecting gear bits and gears

+ connected_gear_bits: List[tuple] = []

+ gear_bits = self.find_gear_bits()

+ for comp in connected_components:

+ if comp in gear_bits:

+ connected_gear_bits.append(comp)

+

+ # return the list of gear_bits whose data flows have to be connected

+ return connected_gear_bits

+

+ def synchronize_connected_gear_bits(self):

+ """

+ Connects the data flows of all gear bits that are connected via gears

+

+ :return:None

+ """

+

+ all_gear_bits_until_now = []

+

+ # for each gear bit on the board:

+ for gear_bit_pos in self.find_gear_bits():

+ # self.write_to_file(f"GearbitPos: {gear_bit_pos}")

+

+ # if the gear bit is not already in the list

+ if gear_bit_pos not in all_gear_bits_until_now:

+ try:

+ # find all gear bits connected to current gear bit

+ connected_comps = self.connect_gear_bits(gear_bit_pos)

+ except Exception as e:

+ self.write_to_file("Bug in connect_gear_bits")

+ self.write_to_file(str(e))

+ raise UserWarning(e)

+

+ # add all identified gear bits connected to current gear bit to the list

+ for pos in connected_comps:

+ try:

+ all_gear_bits_until_now.append(pos)

+ except Exception as e:

+ self.write_to_file(str(e))

+ try:

+

+ # obtain the ids of the connected gear bit-bugs

+ ids = [self.bug_matrix[conn_comps] for conn_comps in connected_comps]

+ except Exception as e:

+ self.write_to_file("Error in ids = self.bugMatrix(...)")

+ self.write_to_file(str(e))

+ raise UserWarning(e)

+

+ # connect the connected gear bit-bugs

+ for id1 in ids:

+ for id2 in ids:

+ self.challenge_implementation.addDataFlow(id1, id2, 0)

+ self.write_to_file(

+ f'T2_Code_Implementation.addDataFlow("{id1}", "{id2}", 0);'

+ )

+

+ def check_gear_or_gearbit(self, pos: tuple) -> bool:

+ """

+ checks if a position contains a gear or a gear bit

+

+ :param pos: a position on the board

+ :return: A Boolean: True if position contains a gear or a gear bit, otherwise False

+ """

+

+ # check if the position to check is out of bounds

+ if pos[1] < 0 or pos[1] > self._board_shape[1] - 1 or pos[0] < 0 or pos[0] > self._board_shape[0] - 1:

+ return False

+

+ # check if the position contains a gear or a gear bit

+ elif self.matrix[pos] in [

+ self._one_gearbit_rep,

+ self._zero_gearbit_rep,

+ self._gear_rep,

+ ]:

+ return True

+ else:

+ return False

+

+ def find_bits(self) -> List[tuple]:

+ """

+ Finds the positions of all bits on the board (normal bits AND gear bits).

+

+ :return: a list containing the positions of all bits on the board

+ """

+

+ indices = np.where(

+ (

+ (self.matrix == self._zero_bit_rep)

+ | (self.matrix == self._one_bit_rep)

+ | (self.matrix == self._one_gearbit_rep)

+ | (self.matrix == self._zero_gearbit_rep)

+ )

+ )

+ ind_list: List[tuple] = []

+ for i in range(len(indices[0])):

+ ind_list.append((indices[0][i], indices[1][i]))

+ return ind_list

+

+ def find_gear_bits(self) -> List[tuple]:

+ """

+ Finds the positions of all gear bits on the board (ONLY gear bits).

+

+ :return: the positions of all gear bits on the board

+ """

+ indices = np.where(

+ (

+ (self.matrix == self._one_gearbit_rep)

+ | (self.matrix == self._zero_gearbit_rep)

+ )

+ )

+ ind_list: List[tuple] = []

+ for i in range(len(indices[0])):

+ ind_list.append((indices[0][i], indices[1][i]))

+ return ind_list

+

+ def write_to_file(self, text: str):

+ """

+ Writes a string into a java file that can then be executed later.

+

+ :param text: Java Code as a string

+ :return: None

+ """

+

+ # read the content of the java file

+ with open(self.bugplus_development_path + "Challenge.java", "r") as f:

+ contents = f.readlines()

+

+ # add the new text to the lower end of the java file content

+ contents[-3] = contents[-3] + "\t\t" + text + "\n"

+

+ # write the modified content back into the java file

+ with open(self.bugplus_development_path + "Challenge.java", "w") as f:

+ for c in contents:

+ f.write(c)

+

+ def addNegBug(self) -> str:

+ """

+ Adds a negation bug to the program.

+

+ :return: The ID of the newly added bug as a string

+ """

+

+ # add a new bug

+ self.challenge_implementation.addBug("!", f"!_{self.bug_counter}")

+ self.write_to_file(

+ f'T2_Code_Implementation.addBug("!", "!_{self.bug_counter}");'

+ )

+

+ # connect the data flow of the bug with itself

+ self.challenge_implementation.addDataFlow(

+ f"!_{self.bug_counter}", f"!_{self.bug_counter}", 0

+ )

+ self.write_to_file(

+ f'T2_Code_Implementation.addDataFlow("!_{self.bug_counter}", "!_{self.bug_counter}", 0);'

+ )

+

+ # return the id of the newly added bug

+ self.bug_counter += 1

+ return f"!_{self.bug_counter - 1}"

+

+ def addDataFlow(

+ self,

+ id_source_bug: str,

+ id_target_bug: str,

+ index_data_in: int

+ ):

+ """

+ Adds a data flow from a given source bug to a given target bug

+

+ :param id_source_bug: the id of the source bug

+ :param id_target_bug:the id of the target bug

+ :param index_data_in: the index of the data in pin that should be used to connect the bugs (0 or 1)

+ :return: None

+ """

+ self.challenge_implementation.addDataFlow(id_source_bug, id_target_bug, index_data_in)

+

+ def connectDataInInterface(

+ self,

+ id_internal_bug: str,

+ index_internal_data_in: int,

+ index_external_data_in_interface: int,

+ ):

+ """

+ Connects a data in pin of an internal bug to the data in pin of the external interface

+

+ :param id_internal_bug: the id of the internal bug as it is defined for the Java Bugplus Code (e.g. "!_2")

+ :param index_internal_data_in: defines the data in pin of the internal bug that should be connected to the

+ DataInInterface. 0 or 1

+ :param index_external_data_in_interface: defines the index of the data in pin of the external bug

+ (DataInInterface) that should be connected to the internal Bug. 0 or 1

+ :return: None

+ """

+ self.challenge_implementation.connectDataInInterface(

+ id_internal_bug, index_internal_data_in, index_external_data_in_interface

+ )

+

+ def connectDataOutInterface(self, id_internal_bug: str):

+ """

+ Connects a data out pin of an internal bug to the data out pin of the external interface

+

+ :param id_internal_bug: id_internal_bug: the id of the internal bug as it is defined for the Java Bugplus Code

+ (e.g. "!_2")

+ """

+ self.challenge_implementation.connectDataOutInterface(id_internal_bug)

+

+ def connectControlInInterface(self, id_internal_bug: str):

+ """

+ Connects the control flow of the interface with the bit that will be activated first when starting the program.

+

+ :param id_internal_bug: the id of the bug that should be activated first when starting the program.

+ :return: None

+ """

+ self.write_to_file(

+ f'T2_Code_Implementation.connectControlInInterface("{id_internal_bug}");'

+ )

+ self.challenge_implementation.connectControlInInterface(id_internal_bug)

+

+ def addControlFlow(

+ self,

+ id_source_bug: str,

+ index_control_out: int,

+ id_target_bug: str

+ ):

+ """

+ Adds a control flow from a given source bug to a given target bug.

+

+ :param id_source_bug: the id of the source bug

+ :param index_control_out: the index of the control out pin that should be used to connect the bugs (0 or 1)

+ :param id_target_bug: the id of the target bug

+ :return:

+ """

+ self.write_to_file(

+ f'T2_Code_Implementation.addControlFlow("{id_source_bug}", 1, "{id_target_bug}");'

+ )

+

+ self.challenge_implementation.addControlFlow(

+ id_source_bug, index_control_out, id_target_bug

+ )

diff --git a/reinforcement_learning/translators/aux_matrix_to_image.py b/reinforcement_learning/translators/aux_matrix_to_image.py

new file mode 100644

index 0000000..16ad9f6

--- /dev/null

+++ b/reinforcement_learning/translators/aux_matrix_to_image.py

@@ -0,0 +1,144 @@

+"""

+Auxiliary function: uploads auxiliary TT matrix to Jesse Crossen simulator.

+"""

+

+# standard library imports

+import base64

+import webbrowser

+from io import BytesIO

+from typing import List

+

+# 3rd party imports

+from PIL import Image

+import numpy as np

+

+parts = {

+ "NotValid": -100,

+ "Valid": 0,

+ "GreenLeft": 1,

+ "GreenRight": 2,

+ "Orange": 3,

+ "Red": 4,

+ "BlueLeft": 5,

+ "Black": 6,

+ "BlueWheelLeft": 7,

+ "BlueWheelRight": 8,

+ "BlueRight": 9,

+ "LightGrey": -99,

+ "DarkGrey": -98

+}

+colors = {

+ "notValid": [32, 32, 32, 254],

+ "red": [255, 0, 0, 255],

+ "greenRight": [0, 255, 0, 255],

+ "greenLeft": [0, 189, 0, 255],

+ "orange": [255, 128, 0, 255],

+ "blueLeft": [0, 255, 255, 255],

+ "blueWheelLeft": [128, 0, 255, 255],

+ "black": [0, 0, 0, 255],

+ "white": [255, 255, 255, 255],

+ "blueWheelRight": [96, 0, 189, 255],

+ "blueRight": [0, 189, 189, 255],

+ "lightGrey": [128, 128, 128, 254],

+ "darkGrey": [96, 96, 96, 254]

+}

+

+

+def translate_value_to_color(val: int) -> List:

+ """

+ Auxiliary function to assign RGBA values to TT board pieces;

+ needed to generate picture for upload to Jesse Crossen TT simulator.

+

+ :param val: integer representing TT piece

+ :return: colors: list of RGBA values

+ """

+ if val == parts["NotValid"]:

+ return colors["notValid"]

+

+ if val == parts["GreenLeft"]:

+ return colors["greenLeft"]

+

+ if val == parts["Black"]:

+ return colors["black"]

+

+ if val == parts["BlueLeft"]:

+ return colors["blueLeft"]

+

+ if val == parts["BlueRight"]:

+ return colors["blueRight"]

+

+ if val == parts["BlueWheelLeft"]:

+ return colors["blueWheelLeft"]

+

+ if val == parts["BlueWheelRight"]:

+ return colors["blueWheelRight"]

+

+ if val == parts["DarkGrey"]:

+ return colors["darkGrey"]

+

+ if val == parts["GreenRight"]:

+ return colors["greenRight"]

+

+ if val == parts["LightGrey"]:

+ return colors["lightGrey"]

+

+ if val == parts["Orange"]:

+ return colors["orange"]

+

+ if val == parts["Red"]:

+ return colors["red"]

+

+ return colors["white"]

+

+

+def translate_matrix_to_board(mat: np.ndarray) -> str:

+ """

+ Takes matrix indicating placement of TT pieces and returns link for Jesse Crossen TT simulator.

+

+ :param mat: auxiliary matrix indicating position of TT pieces on board.

+ :return: formatted_str: image for upload to Jesse Crossen TT simulator in base 64.

+ """

+ mat = np.array(mat)

+ img = Image.open('assets/long_board.png')

+ pixel_map = img.load()

+

+ rows = len(mat)

+ cols = len(mat[0])

+ z = int((cols - 1) / 2)

+ for i in range(z):

+ for j in range(z - i):

+ mat[i, j] = parts["NotValid"]

+ mat[i, cols - j - 1] = parts["NotValid"]

+ z2 = int((cols - 3) / 2)

+ for j in range(z2):

+ mat[rows - 1, j] = parts["LightGrey"]

+ mat[rows - 1, cols - j - 1] = parts["DarkGrey"]

+ # testing:

+ mat[rows - 2, 0] = parts["LightGrey"]

+ mat[rows - 2, cols - 1] = parts["DarkGrey"]

+ print(mat)

+

+ for i in range(1, rows + 1):

+ for j in range(2, cols + 2):

+ # translates the value from the matrix into an rgba value (r, g, b, a)

+ color = tuple(translate_value_to_color(mat[i - 1][j - 2]))

+ pixel_map[j - 1, i + 1] = color

+

+ buffered = BytesIO()

+ img.save(buffered, format="PNG")

+ img_str = base64.b64encode(buffered.getvalue())

+ print(img_str)

+ formatted_str = f"data:image/png;base64,{str(img_str)[2:-1]}"

+ return formatted_str

+

+

+def open_new_board(mat: np.ndarray):

+ """

+ Opens base-64 representation of TT board in browser.

+

+ :param mat: numpy array indicating positioning of TT pieces on board

+ :return: (nothing): displays TT board in browser.

+ """

+ ttsim_link = "https://jessecrossen.github.io/ttsim/#s=17,33&z=32&cc=8&cr=16&t=2&sp=1&sc=0&b="

+ board = translate_matrix_to_board(mat)

+ webbrowser.open(ttsim_link + board, new=2)

diff --git a/reinforcement_learning/translators/aux_partial_orderer.py b/reinforcement_learning/translators/aux_partial_orderer.py

new file mode 100644

index 0000000..0e147fb

--- /dev/null

+++ b/reinforcement_learning/translators/aux_partial_orderer.py

@@ -0,0 +1,72 @@

+"""

+Generate page rank scores and a list of connected bits from CF matrix.

+"""

+# standard library imports

+from typing import List

+

+# 3rd party imports

+import numpy as np

+from igraph import *

+

+

+class PartialOrderer:

+ @classmethod

+ def order(cls, cf_matrix: np.ndarray) -> List[List]:

+ """

+ For n (bug)bits in the CF matrix, return IDs of node connected to control-out pins.

+

+ :param cf_matrix: a matrix of dimensions n x 2n

+ :return: fathers: a list of n sub-lists.

+ """

+ num_bugs = len(cf_matrix)

+ fathers = []

+ for column in range(num_bugs):

+ following_nodes = []

+ for row in range(num_bugs):

+ if cf_matrix[row][2 * column] == 1:

+ following_nodes.append(row)

+ for row in range(num_bugs):

+ if cf_matrix[row][2 * column + 1] == 1:

+ following_nodes.append(row)

+ fathers.append(following_nodes)

+

+ return fathers

+

+ @classmethod

+ def calc_rank(cls, fathers: list) -> List:

+ """

+ Calculate the page rank of each bit

+

+ :param fathers: List of n sub-lists

+ :return: ranking: List of page ranks of length n

+ """

+ print(f"Children: {fathers}")

+ all_nodes = [i for i in range(len(fathers))]

+ ranking = []

+

+ # create Graph

+ g = Graph(directed=True)

+ g.add_vertices(len(all_nodes), attributes={"label": range(len(all_nodes))})

+ for parent in range(len(fathers)):

+ for child in fathers[parent]:

+ if child == 0:

+ continue

+ g.add_edges([(parent, child)])

+ plot(g)

+

+ ranks = g.pagerank(directed=True)

+

+ added_to_rank_count = 0

+ while added_to_rank_count != len(all_nodes):

+ max_rank = min(ranks)

+ current_rank_bits = []

+ for i in range(len(ranks)):

+ if ranks[i] == max_rank:

+ current_rank_bits.append(i)

+ added_to_rank_count += 1

+ ranks[i] = np.inf

+ # ranks.remove(maxrank)

+ ranking.append(current_rank_bits)

+

+ print(ranking)

+ return ranking

diff --git a/reinforcement_learning/translators/startup_translatorT2_server.py b/reinforcement_learning/translators/startup_translatorT2_server.py

new file mode 100644

index 0000000..e5a7915

--- /dev/null

+++ b/reinforcement_learning/translators/startup_translatorT2_server.py

@@ -0,0 +1,85 @@

+"""

+Opens a Jesse Crossen GUI (TT board) on a local server.

+Board can then be set up in GUI and downloaded as image via specific button.

+This image is then used translator_image_to_code.py

+

+"""

+

+# start script with the following arguments in command line

+# ray start --head

+# serve start --http-host=127.0.0.1

+

+# standard library imports

+import os

+

+# 3rd party imports

+import ray

+from ray import serve

+from fastapi import FastAPI, Request

+from fastapi.middleware.cors import CORSMiddleware

+

+# local imports (i.e. our own code)

+from aux_image_to_code import Translator

+# noinspection PyUnresolvedReferences

+from utilities import utilities

+

+# initialise FastAPI API instance

+app = FastAPI()

+

+

+@serve.deployment

+@serve.ingress(app)

+class TranslationLayer:

+ translator: Translator

+

+ def __int__(self):

+ app.add_middleware(

+ CORSMiddleware,

+ allow_origins=['*', "http://localhost", "http://localhost:8080"],

+ allow_credentials=True,

+ allow_methods=["*"],

+ allow_headers=["*"],

+ )

+ pass

+

+ @app.get("/t2/{matrix}")

+ def translation2(self, matrix: str):

+ """

+ Route that accepts a matrix encoded as a string and calls the appropriate Translator function to convert it

+ to bugbit code.

+

+ :param matrix: matrix coded as string

+ :return: None

+ """

+ self.translator = Translator()

+ self.translator.matrix_to_bugbit_code(matrix)

+ return None

+

+ @app.post("/t2")

+ async def get_body(self, request: Request):

+ """

+ Alternative route to translate a matrix coded as string to bugbit code.

+

+ :param request: request object

+ :return: None

+ """

+

+ self.translator = Translator()

+ var = await request.json()

+ self.translator.matrix_to_bugbit_code(var)

+

+

+if __name__ == "__main__":

+ # start ray server

+ os.system("ray stop")

+ os.system("ray start --head")

+ os.system("serve start --http-host=127.0.0.1 --http-port=8000")

+

+ ray.init(address="auto", ignore_reinit_error=True, namespace="serve")

+ serve.start(detached=True) # or False

+

+ TranslationLayer.deploy()

+ # start TTSIM GUI

+ os.chdir(os.environ['TTSIM_PATH'])

+ os.system("make server")

+ os.chdir(os.getcwd())

diff --git a/reinforcement_learning/translators/translatorT1_cf_matrix_to_image.py b/reinforcement_learning/translators/translatorT1_cf_matrix_to_image.py

new file mode 100644

index 0000000..840a67c

--- /dev/null

+++ b/reinforcement_learning/translators/translatorT1_cf_matrix_to_image.py

@@ -0,0 +1,315 @@

+"""

+Translator (T1) from CF matrix to graphical TT board

+with help of functions defined in aux_partial_orderer.py

+and aux_matrix_to_image.py

+"""

+

+# standard library imports

+from typing import Optional, Tuple

+

+# 3rd party imports

+from igraph import *

+

+# local imports (i.e. our own code)

+from aux_partial_orderer import PartialOrderer

+from aux_matrix_to_image import *

+

+

+# noinspection PyShadowingNames

+class RankBasedTranslator:

+ num_bits = None

+ rows = None

+ columns = None

+ matrix = None

+ g = None

+ colors = None

+

+ def __init__(

+ self,

+ rows: Optional[int] = 20,

+ columns: Optional[int] = 20

+ ):

+ """

+ Builds empty TT board (of dimension rows x columns) as graph.

+ Graph is diamond grid-shaped: each node is only connected to its direct diagonal neighbours.

+

+ :param rows: number of rows in the TT grid

+ :param columns: number of columns in the TT grid

+ """

+ self.rows = rows

+ self.columns = columns

+ self.matrix = np.zeros(shape=(rows, columns), dtype=np.int64)

+

+ coordinates = []

+ self.edges = []

+ count = 0

+ for i in range(self.rows):

+ for j in range(self.columns):

+ coordinates.append((i, j))

+ if i % 2 != j % 2:

+ if i < self.rows - 1:

+

+ if j != 0:

+ self.edges.append((count, count + self.columns - 1))

+ if j != self.columns - 1:

+ self.edges.append((count, count + self.columns + 1))

+

+ if j != 0 and j != self.columns - 1:

+ pass

+ else:

+ pass

+ else:

+ self.edges.append((count, self.rows * self.columns))

+

+ count += 1

+ coordinates.append((self.rows, self.columns))

+

+ self.g = Graph(directed=True)

+

+ self.g.add_vertices(self.rows * self.columns + 1, attributes={"coordinates": coordinates})

+ self.g.vs["name"] = coordinates

+ self.g.vs["label"] = self.g.vs["name"]

+ self.colors = ["lightgrey"] * (self.rows * self.columns + 1)

+

+ # print(f"Edges: {edges}")

+ self.g.add_edges(self.edges)

+

+ def place_bits(self, rankings: list) -> List[Tuple]:

+ """

+ Generates coordinates to place pieces on from page ranks.

+

+ :param rankings: list of page ranks

+ :return: coordinates: list of position of TT bits on board.

+ """

+ coordinates = []

+ print(f"Ranks: {rankings}")

+ levels = len(rankings)

+ # we have to leave the last rows open to possibly add intercept tokens

+ placing_rows = np.ceil(np.linspace(start=0, stop=self.rows - 3, num=levels))

+ print(placing_rows)

+ for i in range(levels):

+ length = 1

+ try:

+ length = len(rankings[i])

+ except:

+ length = 1

+ if length != 1:

+ print(f"Linspace {(np.linspace(0, self.columns, num=length))}")

+ columns = np.ceil(np.linspace(0, self.columns, num=length + 2))[1:-1]

+ else:

+ columns = [np.ceil(np.linspace(0, self.columns, num=length + 2))[1]]

+ print(f"f Columns: {columns}")

+ for j in range(length):

+ if placing_rows[i] % 2 == columns[j] % 2:

+ columns[j] -= 1

+ coordinates.append((placing_rows[i], columns[j]))

+

+ # place Bits in Matrix

+ for (x, y) in coordinates:

+ self.matrix[int(x)][int(y)] = parts["BlueLeft"]

+ return coordinates

+

+ def find_shortest_paths(

+ self,

+ bit_coordinates: List[Tuple],

+ matrix: np.ndarray

+ ) -> List[List]:

+ """

+ Identifies placement of connecting pieces between bits.

+

+ :param bit_coordinates: placement of bits on TT board.

+ :param matrix: adjacency matrix of graph acting as empty TT board.

+ :return: shortest_paths: IDs of nodes traversed on shortest paths between bits.

+ """

+ bit_ids = []

+ for (i, j) in bit_coordinates:

+ bit_ids.append(i * self.columns + j)

+ print(f"Bit IDS: {bit_ids}")

+

+ for bid in bit_ids:

+ self.colors[int(bid)] = "blue"

+ self.g.vs["color"] = self.colors

+

+ print(f"Bit Coordinates {bit_coordinates}")

+ children = PartialOrderer.order(matrix)

+

+ for i in range(len(bit_ids)):

+ for j in children[i]:

+ shortest_paths = []

+ all_ids_without_ij = []

+ for b in bit_ids:

+ if b != bit_ids[i] and b != bit_ids[j]:

+ all_ids_without_ij.append(int(b))

+

+ delete_edges = []

+ for edge in self.edges:

+ for id in all_ids_without_ij:

+ if edge[0] == id or edge[1] == id:

+ delete_edges.append(edge)

+

+ self.g.delete_edges(delete_edges)

+

+ j = int(j)

+ i = int(i)

+ print(f"I {i}, {j}")

+ left_child_id = int(bit_ids[i] + self.columns - 1)

+ right_child_id = int(bit_ids[i] + self.columns + 1)

+ left_child_coord = self.get_coordinate(left_child_id)

+ right_child_coord = self.get_coordinate(right_child_id)

+ print(f"COORDS: {left_child_coord}")

+ if j == 0:

+ print("Link to Sink Node")

+ print(f"Path from {i}({bit_ids[i]}) to Sink Node)")

+