-

-

Notifications

You must be signed in to change notification settings - Fork 711

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Show column metadata plus links for foreign keys on arbitrary query results #1293

Comments

|

I've done various pieces of research into this over the past few years. Capturing what I've discovered in this ticket. The SQLite C API has functions that can help with this: https://www.sqlite.org/c3ref/column_database_name.html details those. But they're not exposed in the Python SQLite library. Maybe it would be possible to use them via |

|

A more promising route I found involved the >>> def print_args(*args, **kwargs):

... print("args", args, "kwargs", kwargs)

... return sqlite3.SQLITE_OK

>>> db = sqlite3.connect("fixtures.db")

>>> db.execute('select * from compound_primary_key join facetable on rowid').fetchall()

args (21, None, None, None, None) kwargs {}

args (20, 'compound_primary_key', 'pk1', 'main', None) kwargs {}

args (20, 'compound_primary_key', 'pk2', 'main', None) kwargs {}

args (20, 'compound_primary_key', 'content', 'main', None) kwargs {}

args (20, 'facetable', 'pk', 'main', None) kwargs {}

args (20, 'facetable', 'created', 'main', None) kwargs {}

args (20, 'facetable', 'planet_int', 'main', None) kwargs {}

args (20, 'facetable', 'on_earth', 'main', None) kwargs {}

args (20, 'facetable', 'state', 'main', None) kwargs {}

args (20, 'facetable', 'city_id', 'main', None) kwargs {}

args (20, 'facetable', 'neighborhood', 'main', None) kwargs {}

args (20, 'facetable', 'tags', 'main', None) kwargs {}

args (20, 'facetable', 'complex_array', 'main', None) kwargs {}

args (20, 'facetable', 'distinct_some_null', 'main', None) kwargs {}Those Then I found a snag: In [18]: db.execute('select 1 + 1 + (select max(rowid) from facetable)')

args (21, None, None, None, None) kwargs {}

args (31, None, 'max', None, None) kwargs {}

args (20, 'facetable', 'pk', 'main', None) kwargs {}

args (21, None, None, None, None) kwargs {}

args (20, 'facetable', '', None, None) kwargs {}Once a subselect is involved the order of the |

|

Here are all of the available constants: In [3]: for k in dir(sqlite3):

...: if k.startswith("SQLITE_"):

...: print(k, getattr(sqlite3, k))

...:

SQLITE_ALTER_TABLE 26

SQLITE_ANALYZE 28

SQLITE_ATTACH 24

SQLITE_CREATE_INDEX 1

SQLITE_CREATE_TABLE 2

SQLITE_CREATE_TEMP_INDEX 3

SQLITE_CREATE_TEMP_TABLE 4

SQLITE_CREATE_TEMP_TRIGGER 5

SQLITE_CREATE_TEMP_VIEW 6

SQLITE_CREATE_TRIGGER 7

SQLITE_CREATE_VIEW 8

SQLITE_CREATE_VTABLE 29

SQLITE_DELETE 9

SQLITE_DENY 1

SQLITE_DETACH 25

SQLITE_DONE 101

SQLITE_DROP_INDEX 10

SQLITE_DROP_TABLE 11

SQLITE_DROP_TEMP_INDEX 12

SQLITE_DROP_TEMP_TABLE 13

SQLITE_DROP_TEMP_TRIGGER 14

SQLITE_DROP_TEMP_VIEW 15

SQLITE_DROP_TRIGGER 16

SQLITE_DROP_VIEW 17

SQLITE_DROP_VTABLE 30

SQLITE_FUNCTION 31

SQLITE_IGNORE 2

SQLITE_INSERT 18

SQLITE_OK 0

SQLITE_PRAGMA 19

SQLITE_READ 20

SQLITE_RECURSIVE 33

SQLITE_REINDEX 27

SQLITE_SAVEPOINT 32

SQLITE_SELECT 21

SQLITE_TRANSACTION 22

SQLITE_UPDATE 23 |

|

Worth noting that adding In [20]: db.execute('select * from compound_primary_key join facetable on facetable.rowid = compound_primary_key.rowid limit 0').fetchall()

...:

args (21, None, None, None, None) kwargs {}

args (20, 'compound_primary_key', 'pk1', 'main', None) kwargs {}

args (20, 'compound_primary_key', 'pk2', 'main', None) kwargs {}

args (20, 'compound_primary_key', 'content', 'main', None) kwargs {}

args (20, 'facetable', 'pk', 'main', None) kwargs {}

args (20, 'facetable', 'created', 'main', None) kwargs {}

args (20, 'facetable', 'planet_int', 'main', None) kwargs {}

args (20, 'facetable', 'on_earth', 'main', None) kwargs {}

args (20, 'facetable', 'state', 'main', None) kwargs {}

args (20, 'facetable', 'city_id', 'main', None) kwargs {}

args (20, 'facetable', 'neighborhood', 'main', None) kwargs {}

args (20, 'facetable', 'tags', 'main', None) kwargs {}

args (20, 'facetable', 'complex_array', 'main', None) kwargs {}

args (20, 'facetable', 'distinct_some_null', 'main', None) kwargs {}

args (20, 'facetable', 'pk', 'main', None) kwargs {}

args (20, 'compound_primary_key', 'ROWID', 'main', None) kwargs {}

Out[20]: [] |

|

One option I've not fully explored yet: could I write my own custom SQLite C extension which exposes this functionality as a callable function? Then I could load that extension and run a SQL query something like this: Where |

|

I asked about this on the SQLite forum: https://sqlite.org/forum/forumpost/0180277fb7 |

|

The other approach I considered for this was to have my own SQL query parser running in Python, which could pick apart a complex query and figure out which column was sourced from which table. I dropped this idea because it felt that the moment A Python parser approach might be good enough to handle a subset of queries - those that don't use |

|

Oh wow, I just spotted https://github.com/macbre/sql-metadata

It's for MySQL, PostgreSQL and Hive right now but maybe getting it working with SQLite wouldn't be too hard? |

|

Had a fantastic suggestion on the SQLite forum: it might be possible to get what I want by interpreting the opcodes output by Copying the reply I posted to this thread: That's really useful, thanks! It looks like it might be possible for me to reconstruct where each column came from using the It looks like the opcodes I need to inspect are

The The I think this might work! |

Essential documentation for understanding that output: https://www.sqlite.org/opcode.html |

|

... that output might also provide a better way to extract variables than the current mechanism using a regular expression, by looking for the [UPDATE: it did indeed do that, see #1421] |

|

http://www.sqlite.org/draft/lang_explain.html says:

I'm going to keep exploring this though. |

|

This almost works, but throws errors with some queries (anything with a def columns_for_query(conn, sql):

rows = conn.execute('explain ' + sql).fetchall()

table_rootpage_by_register = {r['p1']: r['p2'] for r in rows if r['opcode'] == 'OpenRead'}

names_by_rootpage = dict(

conn.execute(

'select rootpage, name from sqlite_master where rootpage in ({})'.format(

', '.join(map(str, table_rootpage_by_register.values()))

)

)

)

columns_by_column_register = {}

for row in rows:

if row['opcode'] == 'Column':

addr, opcode, table_id, cid, column_register, p4, p5, comment = row

table = names_by_rootpage[table_rootpage_by_register[table_id]]

columns_by_column_register[column_register] = (table, cid)

result_row = [dict(r) for r in rows if r['opcode'] == 'ResultRow'][0]

registers = list(range(result_row["p1"], result_row["p1"] + result_row["p2"] - 1))

all_column_names = {}

for table in names_by_rootpage.values():

table_xinfo = conn.execute('pragma table_xinfo({})'.format(table)).fetchall()

for row in table_xinfo:

all_column_names[(table, row["cid"])] = row["name"]

final_output = []

for r in registers:

try:

table, cid = columns_by_column_register[r]

final_output.append((table, all_column_names[table, cid]))

except KeyError:

final_output.append((None, None))

return final_output |

|

Extracting variables with this trick appears to work OK, but you have to pass the correct variables to the >>> rows = conn.execute('explain select * from repos where id = :id', defaultdict(int))

>>> [dict(r) for r in rows if r['opcode'] == 'Variable']

[{'addr': 2,

'opcode': 'Variable',

'p1': 1,

'p2': 1,

'p3': 0,

'p4': ':id',

'p5': 0,

'comment': None}] |

|

I may need to do something special for rowid columns - there is a |

|

Here's some older example code that works with opcodes from Python, in this case to output indexes used by a query: https://github.com/plasticityai/supersqlite/blob/master/supersqlite/idxchk.py |

|

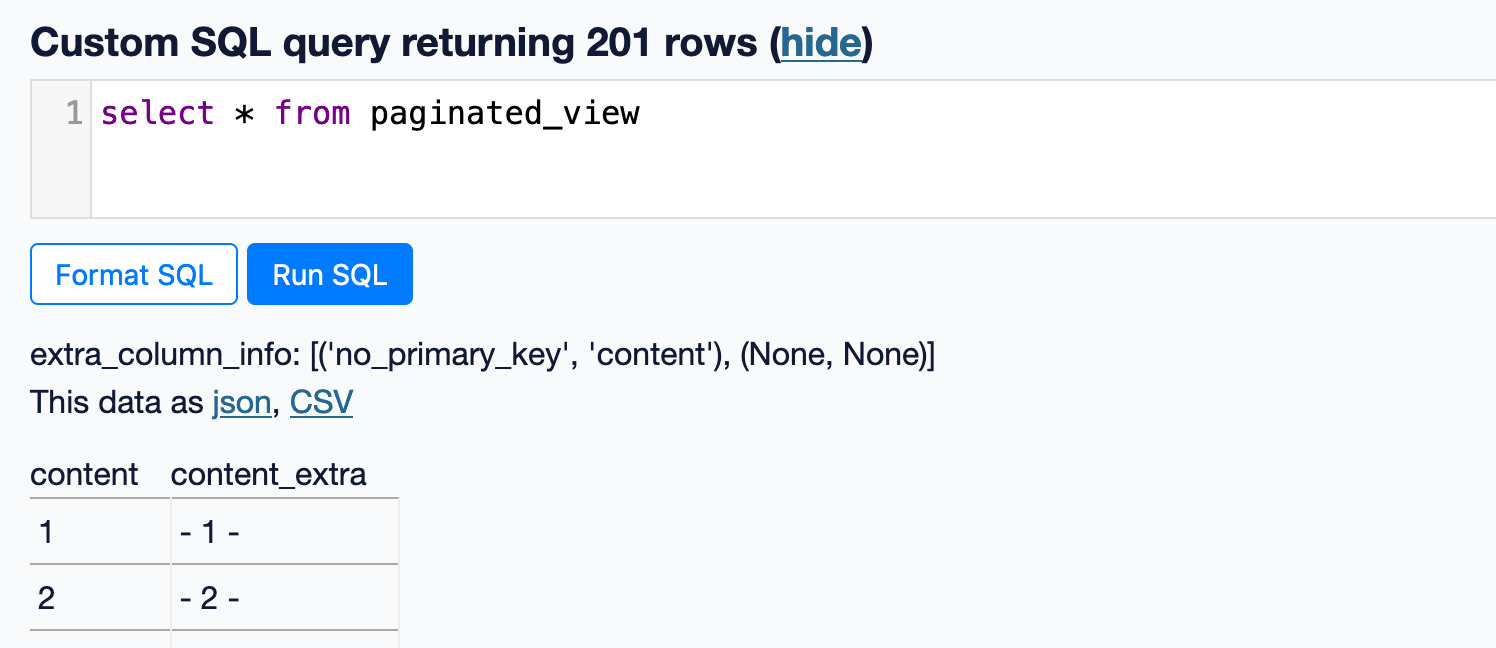

https://latest.datasette.io/fixtures?sql=explain+select+*+from+paginated_view will be an interesting test query - because CREATE VIEW paginated_view AS

SELECT

content,

'- ' || content || ' -' AS content_extra

FROM no_primary_key;So this will help test that the mechanism isn't confused by output columns that are created through a concatenation expression. |

|

Improved version of that function: def columns_for_query(conn, sql):

"""

Given a SQLite connection ``conn`` and a SQL query ``sql``,

returns a list of ``(table_name, column_name)`` pairs, one

per returned column. ``(None, None)`` if no table and column

could be derived.

"""

rows = conn.execute('explain ' + sql).fetchall()

table_rootpage_by_register = {r['p1']: r['p2'] for r in rows if r['opcode'] == 'OpenRead'}

names_by_rootpage = dict(

conn.execute(

'select rootpage, name from sqlite_master where rootpage in ({})'.format(

', '.join(map(str, table_rootpage_by_register.values()))

)

)

)

columns_by_column_register = {}

for row in rows:

if row['opcode'] in ('Rowid', 'Column'):

addr, opcode, table_id, cid, column_register, p4, p5, comment = row

table = names_by_rootpage[table_rootpage_by_register[table_id]]

columns_by_column_register[column_register] = (table, cid)

result_row = [dict(r) for r in rows if r['opcode'] == 'ResultRow'][0]

registers = list(range(result_row["p1"], result_row["p1"] + result_row["p2"]))

all_column_names = {}

for table in names_by_rootpage.values():

table_xinfo = conn.execute('pragma table_xinfo({})'.format(table)).fetchall()

for row in table_xinfo:

all_column_names[(table, row["cid"])] = row["name"]

final_output = []

for r in registers:

try:

table, cid = columns_by_column_register[r]

final_output.append((table, all_column_names[table, cid]))

except KeyError:

final_output.append((None, None))

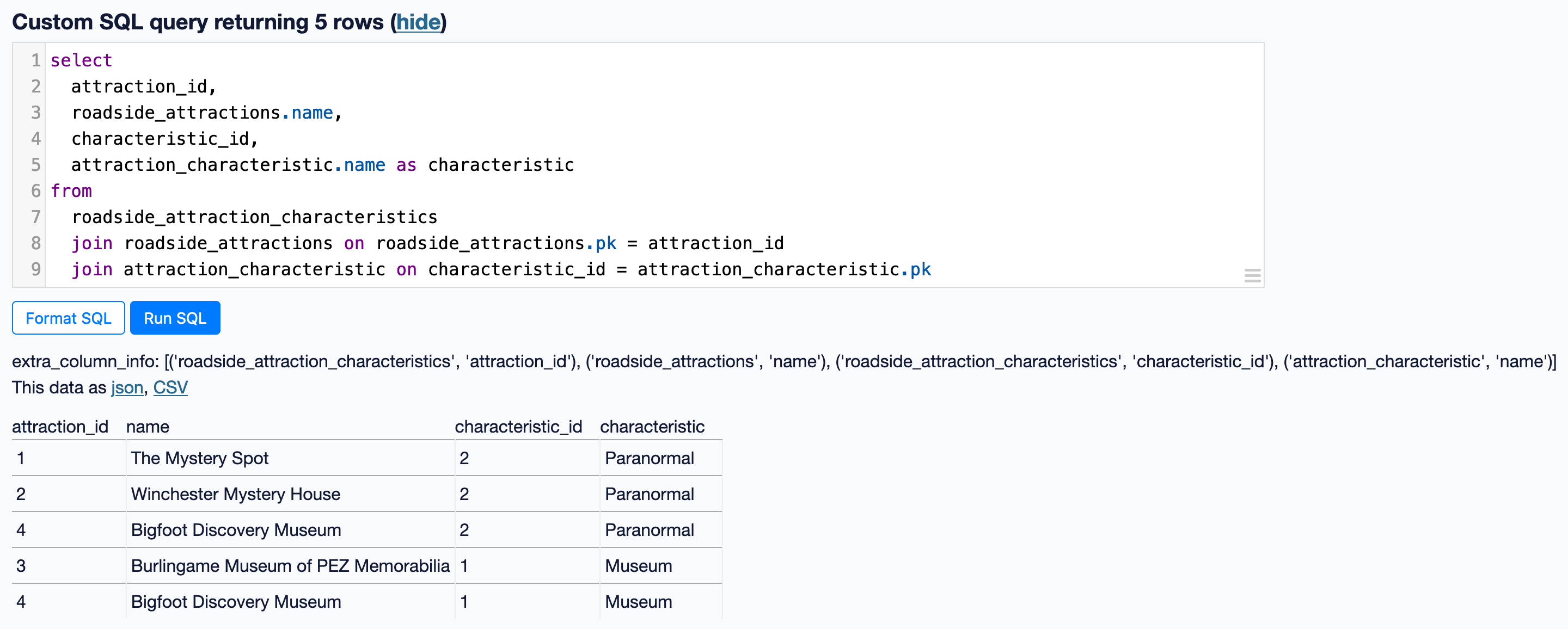

return final_outputIt works! diff --git a/datasette/templates/query.html b/datasette/templates/query.html

index 75f7f1b..9fe1d4f 100644

--- a/datasette/templates/query.html

+++ b/datasette/templates/query.html

@@ -67,6 +67,8 @@

</p>

</form>

+extra_column_info: {{ extra_column_info }}

+

{% if display_rows %}

<p class="export-links">This data as {% for name, url in renderers.items() %}<a href="{{ url }}">{{ name }}</a>{{ ", " if not loop.last }}{% endfor %}, <a href="{{ url_csv }}">CSV</a></p>

<div class="table-wrapper"><table class="rows-and-columns">

diff --git a/datasette/views/database.py b/datasette/views/database.py

index 7c36034..02f8039 100644

--- a/datasette/views/database.py

+++ b/datasette/views/database.py

@@ -10,6 +10,7 @@ import markupsafe

from datasette.utils import (

await_me_maybe,

check_visibility,

+ columns_for_query,

derive_named_parameters,

to_css_class,

validate_sql_select,

@@ -248,6 +249,8 @@ class QueryView(DataView):

query_error = None

+ extra_column_info = None

+

# Execute query - as write or as read

if write:

if request.method == "POST":

@@ -334,6 +337,10 @@ class QueryView(DataView):

database, sql, params_for_query, truncate=True, **extra_args

)

columns = [r[0] for r in results.description]

+

+ # Try to figure out extra column information

+ db = self.ds.get_database(database)

+ extra_column_info = await db.execute_fn(lambda conn: columns_for_query(conn, sql))

except sqlite3.DatabaseError as e:

query_error = e

results = None

@@ -462,6 +469,7 @@ class QueryView(DataView):

"show_hide_text": show_hide_text,

"show_hide_hidden": markupsafe.Markup(show_hide_hidden),

"hide_sql": hide_sql,

+ "extra_column_info": extra_column_info,

}

return ( |

Here's what it does for that: |

|

Trying to run |

|

Work will continue in PR #1434. |

|

The primary key column (or

|

|

Am I going to need to look at the |

|

Documentation for Running Compared with: So actually it looks like the |

|

SorterInsert:

SorterData:

OpenPseudo:

|

|

But the debug output here seems to be saying what we want it to say: We want to get back Why then are we seeing |

|

ResultRow:

Column:

|

|

My hunch here is that registers or columns are being reused in a way that makes my code break - my code is pretty dumb, there are places in it where maybe the first mention of a register wins instead of the last one? |

|

Some useful debug output: The Python code: def columns_for_query(conn, sql, params=None):

"""

Given a SQLite connection ``conn`` and a SQL query ``sql``, returns a list of

``(table_name, column_name)`` pairs corresponding to the columns that would be

returned by that SQL query.

Each pair indicates the source table and column for the returned column, or

``(None, None)`` if no table and column could be derived (e.g. for "select 1")

"""

if sql.lower().strip().startswith("explain"):

return []

opcodes = conn.execute("explain " + sql, params).fetchall()

table_rootpage_by_register = {

r["p1"]: r["p2"] for r in opcodes if r["opcode"] == "OpenRead"

}

print(f"{table_rootpage_by_register=}")

names_and_types_by_rootpage = dict(

[(r[0], (r[1], r[2])) for r in conn.execute(

"select rootpage, name, type from sqlite_master where rootpage in ({})".format(

", ".join(map(str, table_rootpage_by_register.values()))

)

)]

)

print(f"{names_and_types_by_rootpage=}")

columns_by_column_register = {}

for opcode in opcodes:

if opcode["opcode"] in ("Rowid", "Column"):

addr, opcode, table_id, cid, column_register, p4, p5, comment = opcode

try:

table = names_and_types_by_rootpage[table_rootpage_by_register[table_id]][0]

columns_by_column_register[column_register] = (table, cid)

except KeyError:

pass

result_row = [dict(r) for r in opcodes if r["opcode"] == "ResultRow"][0]

result_registers = list(range(result_row["p1"], result_row["p1"] + result_row["p2"]))

print(f"{result_registers=}")

print(f"{columns_by_column_register=}")

all_column_names = {}

for (table, _) in names_and_types_by_rootpage.values():

table_xinfo = conn.execute("pragma table_xinfo({})".format(table)).fetchall()

for column_info in table_xinfo:

all_column_names[(table, column_info["cid"])] = column_info["name"]

print(f"{all_column_names=}")

final_output = []

for register in result_registers:

try:

table, cid = columns_by_column_register[register]

final_output.append((table, all_column_names[table, cid]))

except KeyError:

final_output.append((None, None))

return final_output |

|

So it looks like the bug is in the code that populates |

|

More debug output: Those It looks like that Python code: def columns_for_query(conn, sql, params=None):

"""

Given a SQLite connection ``conn`` and a SQL query ``sql``, returns a list of

``(table_name, column_name)`` pairs corresponding to the columns that would be

returned by that SQL query.

Each pair indicates the source table and column for the returned column, or

``(None, None)`` if no table and column could be derived (e.g. for "select 1")

"""

if sql.lower().strip().startswith("explain"):

return []

opcodes = conn.execute("explain " + sql, params).fetchall()

table_rootpage_by_register = {

r["p1"]: r["p2"] for r in opcodes if r["opcode"] == "OpenRead"

}

print(f"{table_rootpage_by_register=}")

names_and_types_by_rootpage = dict(

[(r[0], (r[1], r[2])) for r in conn.execute(

"select rootpage, name, type from sqlite_master where rootpage in ({})".format(

", ".join(map(str, table_rootpage_by_register.values()))

)

)]

)

print(f"{names_and_types_by_rootpage=}")

columns_by_column_register = {}

for opcode_row in opcodes:

if opcode_row["opcode"] in ("Rowid", "Column"):

addr, opcode, table_id, cid, column_register, p4, p5, comment = opcode_row

print(f"{table_id=} {cid=} {column_register=}")

try:

table = names_and_types_by_rootpage[table_rootpage_by_register[table_id]][0]

columns_by_column_register[column_register] = (table, cid)

except KeyError as e:

print(" KeyError")

print(" ", e)

print(" table = names_and_types_by_rootpage[table_rootpage_by_register[table_id]][0]")

print(f" {names_and_types_by_rootpage=} {table_rootpage_by_register=} {table_id=}")

print(" columns_by_column_register[column_register] = (table, cid)")

print(f" {column_register=} = ({table=}, {cid=})")

pass

result_row = [dict(r) for r in opcodes if r["opcode"] == "ResultRow"][0]

result_registers = list(range(result_row["p1"], result_row["p1"] + result_row["p2"]))

print(f"{result_registers=}")

print(f"{columns_by_column_register=}")

all_column_names = {}

for (table, _) in names_and_types_by_rootpage.values():

table_xinfo = conn.execute("pragma table_xinfo({})".format(table)).fetchall()

for column_info in table_xinfo:

all_column_names[(table, column_info["cid"])] = column_info["name"]

print(f"{all_column_names=}")

final_output = []

for register in result_registers:

try:

table, cid = columns_by_column_register[register]

final_output.append((table, all_column_names[table, cid]))

except KeyError:

final_output.append((None, None))

return final_output |

|

So this line: Means "Take column 2 of table 3 (the pseudo-table) and store it in register 8" |

|

Need to figure out what column 2 of that pseudo-table is. I think the answer is here: I think the In which case column 2 would be But the debug code said "r[8]=state". |

|

Aha! That So if the This is really convoluted. I'm no longer confident I can get this to work in a sensible way, especially since I've not started exploring what complex nested tables with CTEs and sub-selects do yet. |

|

I think I need to look out for After all of that I'll be able to resolve that "table 3" reference. |

|

New theory: this is all about |

|

Another idea: strip out any |

|

Tried a more complicated query: explain select pk, text1, text2, [name with . and spaces] from searchable where rowid in (select rowid from searchable_fts where searchable_fts match escape_fts(:search)) order by text1 desc limit 101Here's the explain: Here the Frustratingly SQLite seems to be able to figure that out just fine, see the column of comments on the right hand side - but I only get those in the Maybe the key to that is this section: MakeRecord:

IdxInsert:

IdxLE:

|

|

I think I need to care about the following:

That might be enough. |

|

I would feel a lot more comfortable about all of this if I had a robust mechanism for running the Datasette test suite against multiple versions of SQLite itself. |

|

Maybe I split this out into a separate Python library that gets tested against every SQLite release I can possibly try it against, and then bakes out the supported release versions into the library code itself? Datasette could depend on that library. The library could be released independently of Datasette any time a new SQLite version comes out. I could even run a separate git scraper repo that checks for new SQLite releases and submits PRs against the library when a new release comes out. |

|

Another interesting query to consider: https://latest.datasette.io/fixtures?sql=explain+select+*+from++pragma_table_info%28+%27123_starts_with_digits%27%29 That one shows |

|

Did some more research into building SQLite custom versions via |

|

New approach: this time I'm building a simplified executor for the bytecode operations themselves. def execute_operations(operations, max_iterations = 100, trace=None):

trace = trace or (lambda *args: None)

registers: Dict[int, Any] = {}

cursors: Dict[int, Tuple[str, Dict]] = {}

instruction_pointer = 0

iterations = 0

result_row = None

while True:

iterations += 1

if iterations > max_iterations:

break

operation = operations[instruction_pointer]

trace(instruction_pointer, dict(operation))

opcode = operation["opcode"]

if opcode == "Init":

if operation["p2"] != 0:

instruction_pointer = operation["p2"]

continue

else:

instruction_pointer += 1

continue

elif opcode == "Goto":

instruction_pointer = operation["p2"]

continue

elif opcode == "Halt":

break

elif opcode == "OpenRead":

cursors[operation["p1"]] = ("database_table", {

"rootpage": operation["p2"],

"connection": operation["p3"],

})

elif opcode == "OpenEphemeral":

cursors[operation["p1"]] = ("ephemeral", {

"num_columns": operation["p2"],

"index_keys": [],

})

elif opcode == "MakeRecord":

registers[operation["p3"]] = ("MakeRecord", {

"registers": list(range(operation["p1"] + operation["p2"]))

})

elif opcode == "IdxInsert":

record = registers[operation["p2"]]

cursors[operation["p1"]][1]["index_keys"].append(record)

elif opcode == "Rowid":

registers[operation["p2"]] = ("rowid", {

"table": operation["p1"]

})

elif opcode == "Sequence":

registers[operation["p2"]] = ("sequence", {

"next_from_cursor": operation["p1"]

})

elif opcode == "Column":

registers[operation["p3"]] = ("column", {

"cursor": operation["p1"],

"column_offset": operation["p2"]

})

elif opcode == "ResultRow":

p1 = operation["p1"]

p2 = operation["p2"]

trace("ResultRow: ", list(range(p1, p1 + p2)), registers)

result_row = [registers.get(i) for i in range(p1, p1 + p2)]

elif opcode == "Integer":

registers[operation["p2"]] = ("Integer", operation["p1"])

elif opcode == "String8":

registers[operation["p2"]] = ("String", operation["p4"])

instruction_pointer += 1

return {"registers": registers, "cursors": cursors, "result_row": result_row}Results are promising! Here's what happens with a union across three tables: Note how the result_row refers to cursor 3, which is an ephemeral table which had three different sets of |

I'm going to do this, and call the Python library |

|

Accidentally closed. |

Related to #620. It would be really cool if Datasette could magically detect the source of the data displayed in an arbitrary query and, if that data represents a foreign key, display it as a hyperlink.

Compare https://latest.datasette.io/fixtures/facetable

To https://latest.datasette.io/fixtures?sql=select+pk%2C+created%2C+planet_int%2C+on_earth%2C+state%2C+city_id%2C+neighborhood%2C+tags%2C+complex_array%2C+distinct_some_null+from+facetable+order+by+pk+limit+101

The text was updated successfully, but these errors were encountered: