diff --git a/.editorconfig b/.editorconfig

index c3a07968f6bd..7b96363f113e 100644

--- a/.editorconfig

+++ b/.editorconfig

@@ -158,13 +158,18 @@ dotnet_diagnostic.CA1032.severity = none # We're using RCS1194 which seems to co

dotnet_diagnostic.CA1034.severity = none # Do not nest type. Alternatively, change its accessibility so that it is not externally visible

dotnet_diagnostic.CA1062.severity = none # Disable null check, C# already does it for us

dotnet_diagnostic.CA1303.severity = none # Do not pass literals as localized parameters

+dotnet_diagnostic.CA1305.severity = none # Operation could vary based on current user's locale settings

+dotnet_diagnostic.CA1307.severity = none # Operation has an overload that takes a StringComparison

dotnet_diagnostic.CA1508.severity = none # Avoid dead conditional code. Too many false positives.

-dotnet_diagnostic.CA1510.severity = none

+dotnet_diagnostic.CA1510.severity = none # ArgumentNullException.Throw

+dotnet_diagnostic.CA1512.severity = none # ArgumentOutOfRangeException.Throw

dotnet_diagnostic.CA1515.severity = none # Making public types from exes internal

dotnet_diagnostic.CA1805.severity = none # Member is explicitly initialized to its default value

dotnet_diagnostic.CA1822.severity = none # Member does not access instance data and can be marked as static

dotnet_diagnostic.CA1848.severity = none # For improved performance, use the LoggerMessage delegates

dotnet_diagnostic.CA1849.severity = none # Use async equivalent; analyzer is currently noisy

+dotnet_diagnostic.CA1865.severity = none # StartsWith(char)

+dotnet_diagnostic.CA1867.severity = none # EndsWith(char)

dotnet_diagnostic.CA2007.severity = none # Do not directly await a Task

dotnet_diagnostic.CA2225.severity = none # Operator overloads have named alternates

dotnet_diagnostic.CA2227.severity = none # Change to be read-only by removing the property setter

diff --git a/.github/_typos.toml b/.github/_typos.toml

index 81e68cf0fcf5..a56c70770c47 100644

--- a/.github/_typos.toml

+++ b/.github/_typos.toml

@@ -14,6 +14,7 @@ extend-exclude = [

"vocab.bpe",

"CodeTokenizerTests.cs",

"test_code_tokenizer.py",

+ "*response.json",

]

[default.extend-words]

@@ -25,6 +26,8 @@ HD = "HD" # Test header value

EOF = "EOF" # End of File

ans = "ans" # Short for answers

arange = "arange" # Method in Python numpy package

+prompty = "prompty" # prompty is a format name.

+ist = "ist" # German language

[default.extend-identifiers]

ags = "ags" # Azure Graph Service

diff --git a/.github/workflows/dotnet-build-and-test.yml b/.github/workflows/dotnet-build-and-test.yml

index 43c51fe5dcb0..876a75048090 100644

--- a/.github/workflows/dotnet-build-and-test.yml

+++ b/.github/workflows/dotnet-build-and-test.yml

@@ -52,40 +52,40 @@ jobs:

fail-fast: false

matrix:

include:

- - { dotnet: "8.0-jammy", os: "ubuntu", configuration: Release }

- {

dotnet: "8.0",

- os: "windows",

- configuration: Debug,

+ os: "ubuntu-latest",

+ configuration: Release,

integration-tests: true,

}

- - { dotnet: "8.0", os: "windows", configuration: Release }

-

- runs-on: ubuntu-latest

- container:

- image: mcr.microsoft.com/dotnet/sdk:${{ matrix.dotnet }}

- env:

- NUGET_CERT_REVOCATION_MODE: offline

- GITHUB_ACTIONS: "true"

+ - { dotnet: "8.0", os: "windows-latest", configuration: Debug }

+ - { dotnet: "8.0", os: "windows-latest", configuration: Release }

+ runs-on: ${{ matrix.os }}

steps:

- uses: actions/checkout@v4

-

+ - name: Setup dotnet ${{ matrix.dotnet }}

+ uses: actions/setup-dotnet@v3

+ with:

+ dotnet-version: ${{ matrix.dotnet }}

- name: Build dotnet solutions

+ shell: bash

run: |

export SOLUTIONS=$(find ./dotnet/ -type f -name "*.sln" | tr '\n' ' ')

for solution in $SOLUTIONS; do

- dotnet build -c ${{ matrix.configuration }} /warnaserror $solution

+ dotnet build $solution -c ${{ matrix.configuration }} --warnaserror

done

- name: Run Unit Tests

+ shell: bash

run: |

export UT_PROJECTS=$(find ./dotnet -type f -name "*.UnitTests.csproj" | grep -v -E "(Experimental.Orchestration.Flow.UnitTests.csproj|Experimental.Assistants.UnitTests.csproj)" | tr '\n' ' ')

for project in $UT_PROJECTS; do

- dotnet test -c ${{ matrix.configuration }} $project --no-build -v Normal --logger trx --collect:"XPlat Code Coverage" --results-directory:"TestResults/Coverage/"

+ dotnet test -c ${{ matrix.configuration }} $project --no-build -v Normal --logger trx --collect:"XPlat Code Coverage" --results-directory:"TestResults/Coverage/" -- DataCollectionRunSettings.DataCollectors.DataCollector.Configuration.ExcludeByAttribute=ObsoleteAttribute,GeneratedCodeAttribute,CompilerGeneratedAttribute,ExcludeFromCodeCoverageAttribute

done

- name: Run Integration Tests

+ shell: bash

if: github.event_name != 'pull_request' && matrix.integration-tests

run: |

export INTEGRATION_TEST_PROJECTS=$(find ./dotnet -type f -name "*IntegrationTests.csproj" | grep -v "Experimental.Orchestration.Flow.IntegrationTests.csproj" | tr '\n' ' ')

@@ -98,9 +98,9 @@ jobs:

AzureOpenAI__DeploymentName: ${{ vars.AZUREOPENAI__DEPLOYMENTNAME }}

AzureOpenAIEmbeddings__DeploymentName: ${{ vars.AZUREOPENAIEMBEDDING__DEPLOYMENTNAME }}

AzureOpenAI__Endpoint: ${{ secrets.AZUREOPENAI__ENDPOINT }}

- AzureOpenAIEmbeddings__Endpoint: ${{ secrets.AZUREOPENAI__ENDPOINT }}

+ AzureOpenAIEmbeddings__Endpoint: ${{ secrets.AZUREOPENAI_EASTUS__ENDPOINT }}

AzureOpenAI__ApiKey: ${{ secrets.AZUREOPENAI__APIKEY }}

- AzureOpenAIEmbeddings__ApiKey: ${{ secrets.AZUREOPENAI__APIKEY }}

+ AzureOpenAIEmbeddings__ApiKey: ${{ secrets.AZUREOPENAI_EASTUS__APIKEY }}

Planners__AzureOpenAI__ApiKey: ${{ secrets.PLANNERS__AZUREOPENAI__APIKEY }}

Planners__AzureOpenAI__Endpoint: ${{ secrets.PLANNERS__AZUREOPENAI__ENDPOINT }}

Planners__AzureOpenAI__DeploymentName: ${{ vars.PLANNERS__AZUREOPENAI__DEPLOYMENTNAME }}

diff --git a/.github/workflows/python-integration-tests.yml b/.github/workflows/python-integration-tests.yml

index 475fe4ca02b1..b02fc8eae1ed 100644

--- a/.github/workflows/python-integration-tests.yml

+++ b/.github/workflows/python-integration-tests.yml

@@ -76,26 +76,21 @@ jobs:

env: # Set Azure credentials secret as an input

HNSWLIB_NO_NATIVE: 1

Python_Integration_Tests: Python_Integration_Tests

- AzureOpenAI__Label: azure-text-davinci-003

- AzureOpenAIEmbedding__Label: azure-text-embedding-ada-002

- AzureOpenAI__DeploymentName: ${{ vars.AZUREOPENAI__DEPLOYMENTNAME }}

- AzureOpenAI__Text__DeploymentName: ${{ vars.AZUREOPENAI__TEXT__DEPLOYMENTNAME }}

- AzureOpenAIChat__DeploymentName: ${{ vars.AZUREOPENAI__CHAT__DEPLOYMENTNAME }}

- AzureOpenAIEmbeddings__DeploymentName: ${{ vars.AZUREOPENAIEMBEDDINGS__DEPLOYMENTNAME2 }}

- AzureOpenAIEmbeddings_EastUS__DeploymentName: ${{ vars.AZUREOPENAIEMBEDDINGS_EASTUS__DEPLOYMENTNAME}}

- AzureOpenAI__Endpoint: ${{ secrets.AZUREOPENAI__ENDPOINT }}

- AzureOpenAI_EastUS__Endpoint: ${{ secrets.AZUREOPENAI_EASTUS__ENDPOINT }}

- AzureOpenAI_EastUS__ApiKey: ${{ secrets.AZUREOPENAI_EASTUS__APIKEY }}

- AzureOpenAIEmbeddings__Endpoint: ${{ secrets.AZUREOPENAI__ENDPOINT }}

- AzureOpenAI__ApiKey: ${{ secrets.AZUREOPENAI__APIKEY }}

- AzureOpenAIEmbeddings__ApiKey: ${{ secrets.AZUREOPENAI__APIKEY }}

- Bing__ApiKey: ${{ secrets.BING__APIKEY }}

- OpenAI__ApiKey: ${{ secrets.OPENAI__APIKEY }}

- Pinecone__ApiKey: ${{ secrets.PINECONE__APIKEY }}

- Pinecone__Environment: ${{ secrets.PINECONE__ENVIRONMENT }}

- Postgres__Connectionstr: ${{secrets.POSTGRES__CONNECTIONSTR}}

- AZURE_COGNITIVE_SEARCH_ADMIN_KEY: ${{secrets.AZURE_COGNITIVE_SEARCH_ADMIN_KEY}}

- AZURE_COGNITIVE_SEARCH_ENDPOINT: ${{secrets.AZURE_COGNITIVE_SEARCH_ENDPOINT}}

+ AZURE_OPENAI_EMBEDDING_DEPLOYMENT_NAME: ${{ vars.AZURE_OPENAI_EMBEDDING_DEPLOYMENT_NAME }} # azure-text-embedding-ada-002

+ AZURE_OPENAI_CHAT_DEPLOYMENT_NAME: ${{ vars.AZURE_OPENAI_CHAT_DEPLOYMENT_NAME }}

+ AZURE_OPENAI_TEXT_DEPLOYMENT_NAME: ${{ vars.AZURE_OPENAI_TEXT_DEPLOYMENT_NAME }}

+ AZURE_OPENAI_API_VERSION: ${{ vars.AZURE_OPENAI_API_VERSION }}

+ AZURE_OPENAI_ENDPOINT: ${{ secrets.AZURE_OPENAI_ENDPOINT }}

+ AZURE_OPENAI_API_KEY: ${{ secrets.AZURE_OPENAI_API_KEY }}

+ BING_API_KEY: ${{ secrets.BING_API_KEY }}

+ OPENAI_CHAT_MODEL_ID: ${{ vars.OPENAI_CHAT_MODEL_ID }}

+ OPENAI_TEXT_MODEL_ID: ${{ vars.OPENAI_TEXT_MODEL_ID }}

+ OPENAI_EMBEDDING_MODEL_ID: ${{ vars.OPENAI_EMBEDDING_MODEL_ID }}

+ OPENAI_API_KEY: ${{ secrets.OPENAI_API_KEY }}

+ PINECONE_API_KEY: ${{ secrets.PINECONE__APIKEY }}

+ POSTGRES_CONNECTION_STRING: ${{secrets.POSTGRES__CONNECTIONSTR}}

+ AZURE_AI_SEARCH_API_KEY: ${{secrets.AZURE_AI_SEARCH_API_KEY}}

+ AZURE_AI_SEARCH_ENDPOINT: ${{secrets.AZURE_AI_SEARCH_ENDPOINT}}

MONGODB_ATLAS_CONNECTION_STRING: ${{secrets.MONGODB_ATLAS_CONNECTION_STRING}}

run: |

if ${{ matrix.os == 'ubuntu-latest' }}; then

@@ -143,26 +138,21 @@ jobs:

env: # Set Azure credentials secret as an input

HNSWLIB_NO_NATIVE: 1

Python_Integration_Tests: Python_Integration_Tests

- AzureOpenAI__Label: azure-text-davinci-003

- AzureOpenAIEmbedding__Label: azure-text-embedding-ada-002

- AzureOpenAI__DeploymentName: ${{ vars.AZUREOPENAI__DEPLOYMENTNAME }}

- AzureOpenAI__Text__DeploymentName: ${{ vars.AZUREOPENAI__TEXT__DEPLOYMENTNAME }}

- AzureOpenAIChat__DeploymentName: ${{ vars.AZUREOPENAI__CHAT__DEPLOYMENTNAME }}

- AzureOpenAIEmbeddings__DeploymentName: ${{ vars.AZUREOPENAIEMBEDDINGS__DEPLOYMENTNAME2 }}

- AzureOpenAIEmbeddings_EastUS__DeploymentName: ${{ vars.AZUREOPENAIEMBEDDINGS_EASTUS__DEPLOYMENTNAME}}

- AzureOpenAI__Endpoint: ${{ secrets.AZUREOPENAI__ENDPOINT }}

- AzureOpenAIEmbeddings__Endpoint: ${{ secrets.AZUREOPENAI__ENDPOINT }}

- AzureOpenAI__ApiKey: ${{ secrets.AZUREOPENAI__APIKEY }}

- AzureOpenAI_EastUS__Endpoint: ${{ secrets.AZUREOPENAI_EASTUS__ENDPOINT }}

- AzureOpenAI_EastUS__ApiKey: ${{ secrets.AZUREOPENAI_EASTUS__APIKEY }}

- AzureOpenAIEmbeddings__ApiKey: ${{ secrets.AZUREOPENAI__APIKEY }}

- Bing__ApiKey: ${{ secrets.BING__APIKEY }}

- OpenAI__ApiKey: ${{ secrets.OPENAI__APIKEY }}

- Pinecone__ApiKey: ${{ secrets.PINECONE__APIKEY }}

- Pinecone__Environment: ${{ secrets.PINECONE__ENVIRONMENT }}

- Postgres__Connectionstr: ${{secrets.POSTGRES__CONNECTIONSTR}}

- AZURE_COGNITIVE_SEARCH_ADMIN_KEY: ${{secrets.AZURE_COGNITIVE_SEARCH_ADMIN_KEY}}

- AZURE_COGNITIVE_SEARCH_ENDPOINT: ${{secrets.AZURE_COGNITIVE_SEARCH_ENDPOINT}}

+ AZURE_OPENAI_EMBEDDING_DEPLOYMENT_NAME: ${{ vars.AZURE_OPENAI_EMBEDDING_DEPLOYMENT_NAME }} # azure-text-embedding-ada-002

+ AZURE_OPENAI_CHAT_DEPLOYMENT_NAME: ${{ vars.AZURE_OPENAI_CHAT_DEPLOYMENT_NAME }}

+ AZURE_OPENAI_TEXT_DEPLOYMENT_NAME: ${{ vars.AZURE_OPENAI_TEXT_DEPLOYMENT_NAME }}

+ AZURE_OPENAI_API_VERSION: ${{ vars.AZURE_OPENAI_API_VERSION }}

+ AZURE_OPENAI_ENDPOINT: ${{ secrets.AZURE_OPENAI_ENDPOINT }}

+ AZURE_OPENAI_API_KEY: ${{ secrets.AZURE_OPENAI_API_KEY }}

+ BING_API_KEY: ${{ secrets.BING_API_KEY }}

+ OPENAI_CHAT_MODEL_ID: ${{ vars.OPENAI_CHAT_MODEL_ID }}

+ OPENAI_TEXT_MODEL_ID: ${{ vars.OPENAI_TEXT_MODEL_ID }}

+ OPENAI_EMBEDDING_MODEL_ID: ${{ vars.OPENAI_EMBEDDING_MODEL_ID }}

+ OPENAI_API_KEY: ${{ secrets.OPENAI_API_KEY }}

+ PINECONE_API_KEY: ${{ secrets.PINECONE__APIKEY }}

+ POSTGRES_CONNECTION_STRING: ${{secrets.POSTGRES__CONNECTIONSTR}}

+ AZURE_AI_SEARCH_API_KEY: ${{secrets.AZURE_AI_SEARCH_API_KEY}}

+ AZURE_AI_SEARCH_ENDPOINT: ${{secrets.AZURE_AI_SEARCH_ENDPOINT}}

MONGODB_ATLAS_CONNECTION_STRING: ${{secrets.MONGODB_ATLAS_CONNECTION_STRING}}

run: |

if ${{ matrix.os == 'ubuntu-latest' }}; then

diff --git a/.github/workflows/python-lint.yml b/.github/workflows/python-lint.yml

index 2864db70442b..3f20ae2f0d02 100644

--- a/.github/workflows/python-lint.yml

+++ b/.github/workflows/python-lint.yml

@@ -7,16 +7,15 @@ on:

- 'python/**'

jobs:

- ruff:

+ pre-commit:

if: '!cancelled()'

strategy:

fail-fast: false

matrix:

python-version: ["3.10"]

runs-on: ubuntu-latest

- timeout-minutes: 5

+ continue-on-error: true

steps:

- - run: echo "/root/.local/bin" >> $GITHUB_PATH

- uses: actions/checkout@v4

- name: Install poetry

run: pipx install poetry

@@ -24,50 +23,6 @@ jobs:

with:

python-version: ${{ matrix.python-version }}

cache: "poetry"

- - name: Install Semantic Kernel

- run: cd python && poetry install --no-ansi

- - name: Run ruff

- run: cd python && poetry run ruff check .

- black:

- if: '!cancelled()'

- strategy:

- fail-fast: false

- matrix:

- python-version: ["3.10"]

- runs-on: ubuntu-latest

- timeout-minutes: 5

- steps:

- - run: echo "/root/.local/bin" >> $GITHUB_PATH

- - uses: actions/checkout@v4

- - name: Install poetry

- run: pipx install poetry

- - uses: actions/setup-python@v5

- with:

- python-version: ${{ matrix.python-version }}

- cache: "poetry"

- - name: Install Semantic Kernel

- run: cd python && poetry install --no-ansi

- - name: Run black

- run: cd python && poetry run black --check .

- mypy:

- if: '!cancelled()'

- strategy:

- fail-fast: false

- matrix:

- python-version: ["3.10"]

- runs-on: ubuntu-latest

- timeout-minutes: 5

- steps:

- - run: echo "/root/.local/bin" >> $GITHUB_PATH

- - uses: actions/checkout@v4

- - name: Install poetry

- run: pipx install poetry

- - uses: actions/setup-python@v5

- with:

- python-version: ${{ matrix.python-version }}

- cache: "poetry"

- - name: Install Semantic Kernel

- run: cd python && poetry install --no-ansi

- - name: Run mypy

- run: cd python && poetry run mypy -p semantic_kernel --config-file=mypy.ini

-

+ - name: Install dependencies

+ run: cd python && poetry install

+ - uses: pre-commit/action@v3.0.1

diff --git a/.github/workflows/python-test-coverage.yml b/.github/workflows/python-test-coverage.yml

index 7eaea6ac1f56..617dddf63c72 100644

--- a/.github/workflows/python-test-coverage.yml

+++ b/.github/workflows/python-test-coverage.yml

@@ -10,7 +10,6 @@ jobs:

python-tests-coverage:

name: Create Test Coverage Messages

runs-on: ${{ matrix.os }}

- continue-on-error: true

permissions:

pull-requests: write

contents: read

@@ -21,14 +20,17 @@ jobs:

os: [ubuntu-latest]

steps:

- name: Wait for unit tests to succeed

+ continue-on-error: true

uses: lewagon/wait-on-check-action@v1.3.4

with:

ref: ${{ github.event.pull_request.head.sha }}

check-name: 'Python Unit Tests (${{ matrix.python-version}}, ${{ matrix.os }})'

repo-token: ${{ secrets.GH_ACTIONS_PR_WRITE }}

wait-interval: 10

+ allowed-conclusions: success

- uses: actions/checkout@v4

- name: Download coverage

+ continue-on-error: true

uses: dawidd6/action-download-artifact@v3

with:

name: python-coverage-${{ matrix.os }}-${{ matrix.python-version }}.txt

@@ -37,6 +39,7 @@ jobs:

search_artifacts: true

if_no_artifact_found: warn

- name: Download pytest

+ continue-on-error: true

uses: dawidd6/action-download-artifact@v3

with:

name: pytest-${{ matrix.os }}-${{ matrix.python-version }}.xml

@@ -45,6 +48,7 @@ jobs:

search_artifacts: true

if_no_artifact_found: warn

- name: Pytest coverage comment

+ continue-on-error: true

id: coverageComment

uses: MishaKav/pytest-coverage-comment@main

with:

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

index 580c7fd67815..f7d2de87b67f 100644

--- a/.pre-commit-config.yaml

+++ b/.pre-commit-config.yaml

@@ -7,23 +7,37 @@ repos:

- id: sync_with_poetry

args: [--config=.pre-commit-config.yaml, --db=python/.conf/packages_list.json, python/poetry.lock]

- repo: https://github.com/pre-commit/pre-commit-hooks

- rev: v4.0.1

+ rev: v4.6.0

hooks:

- id: check-toml

files: \.toml$

- id: check-yaml

files: \.yaml$

+ - id: check-json

+ files: \.json$

+ exclude: ^python\/\.vscode\/.*

- id: end-of-file-fixer

files: \.py$

- id: mixed-line-ending

files: \.py$

- - repo: https://github.com/psf/black

- rev: 24.4.0

+ - id: debug-statements

+ files: ^python\/semantic_kernel\/.*\.py$

+ - id: check-ast

+ name: Check Valid Python Samples

+ types: ["python"]

+ - repo: https://github.com/nbQA-dev/nbQA

+ rev: 1.8.5

hooks:

- - id: black

- files: \.py$

+ - id: nbqa-check-ast

+ name: Check Valid Python Notebooks

+ types: ["jupyter"]

+ - repo: https://github.com/asottile/pyupgrade

+ rev: v3.15.2

+ hooks:

+ - id: pyupgrade

+ args: [--py310-plus]

- repo: https://github.com/astral-sh/ruff-pre-commit

- rev: v0.4.1

+ rev: v0.4.5

hooks:

- id: ruff

args: [ --fix, --exit-non-zero-on-fix ]

@@ -36,3 +50,9 @@ repos:

language: system

types: [python]

pass_filenames: false

+ - repo: https://github.com/PyCQA/bandit

+ rev: 1.7.8

+ hooks:

+ - id: bandit

+ args: ["-c", "python/pyproject.toml"]

+ additional_dependencies: [ "bandit[toml]" ]

\ No newline at end of file

diff --git a/.vscode/settings.json b/.vscode/settings.json

index dece652ca33a..3dc48d0f6e75 100644

--- a/.vscode/settings.json

+++ b/.vscode/settings.json

@@ -72,6 +72,7 @@

},

"cSpell.words": [

"Partitioner",

+ "Prompty",

"SKEXP"

],

"[java]": {

diff --git a/README.md b/README.md

index 9a0f0f37413b..c400ede21d35 100644

--- a/README.md

+++ b/README.md

@@ -90,7 +90,7 @@ The fastest way to learn how to use Semantic Kernel is with our C# and Python Ju

demonstrate how to use Semantic Kernel with code snippets that you can run with a push of a button.

- [Getting Started with C# notebook](dotnet/notebooks/00-getting-started.ipynb)

-- [Getting Started with Python notebook](python/notebooks/00-getting-started.ipynb)

+- [Getting Started with Python notebook](python/samples/getting_started/00-getting-started.ipynb)

Once you've finished the getting started notebooks, you can then check out the main walkthroughs

on our Learn site. Each sample comes with a completed C# and Python project that you can run locally.

@@ -108,45 +108,6 @@ Finally, refer to our API references for more details on the C# and Python APIs:

- [C# API reference](https://learn.microsoft.com/en-us/dotnet/api/microsoft.semantickernel?view=semantic-kernel-dotnet)

- Python API reference (coming soon)

-## Chat Copilot: see what's possible with Semantic Kernel

-

-If you're interested in seeing a full end-to-end example of how to use Semantic Kernel, check out

-our [Chat Copilot](https://github.com/microsoft/chat-copilot) reference application. Chat Copilot

-is a chatbot that demonstrates the power of Semantic Kernel. By combining plugins, planners, and personas,

-we demonstrate how you can build a chatbot that can maintain long-running conversations with users while

-also leveraging plugins to integrate with other services.

-

-

-

-You can run the app yourself by downloading it from its [GitHub repo](https://github.com/microsoft/chat-copilot).

-

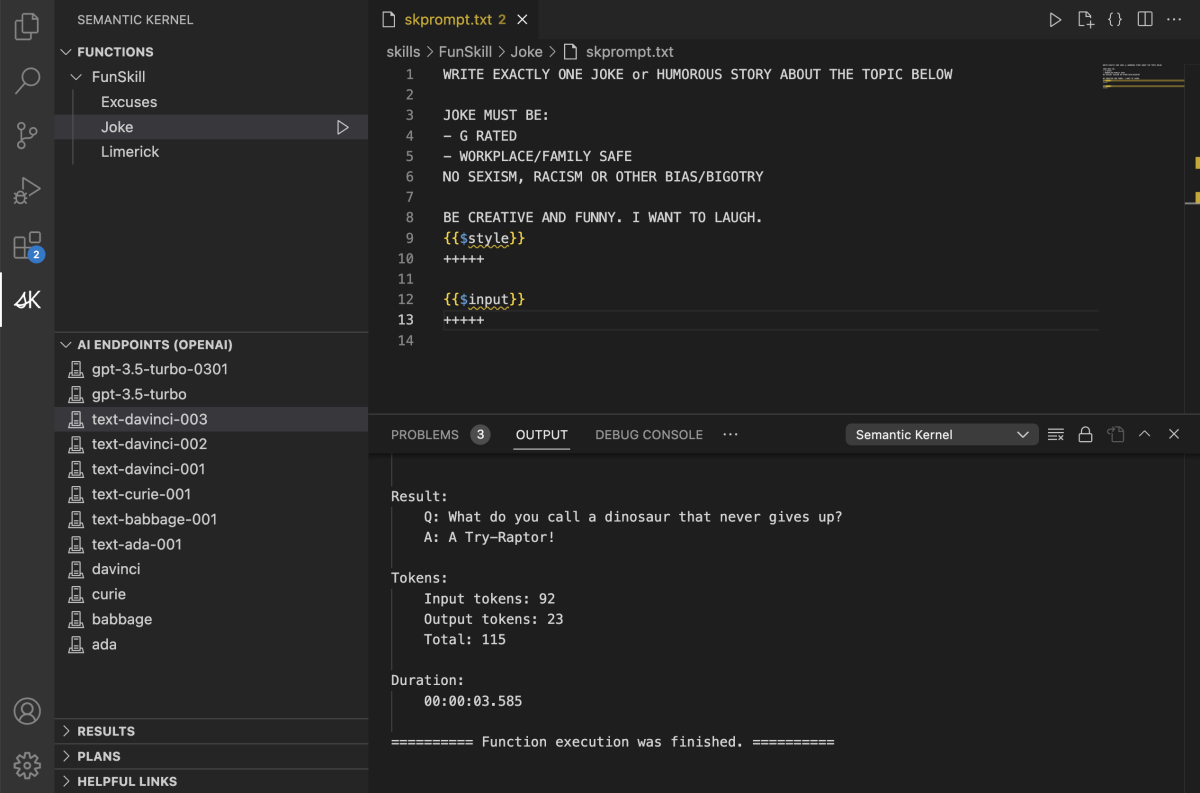

-## Visual Studio Code extension: design semantic functions with ease

-

-The [Semantic Kernel extension for Visual Studio Code](https://learn.microsoft.com/en-us/semantic-kernel/vs-code-tools/)

-makes it easy to design and test semantic functions. The extension provides an interface for

-designing semantic functions and allows you to test them with a push of a button with your

-existing models and data.

-

-

-

-In the above screenshot, you can see the extension in action:

-

-- Syntax highlighting for semantic functions

-- Code completion for semantic functions

-- LLM model picker

-- Run button to test the semantic function with your input data

-

-## Check out our other repos!

-

-If you like Semantic Kernel, you may also be interested in other repos the Semantic Kernel team supports:

-

-| Repo | Description |

-| --------------------------------------------------------------------------------- | --------------------------------------------------------------------------------------------- |

-| [Chat Copilot](https://github.com/microsoft/chat-copilot) | A reference application that demonstrates how to build a chatbot with Semantic Kernel. |

-| [Semantic Kernel Docs](https://github.com/MicrosoftDocs/semantic-kernel-docs) | The home for Semantic Kernel documentation that appears on the Microsoft learn site. |

-| [Semantic Kernel Starters](https://github.com/microsoft/semantic-kernel-starters) | Starter projects for Semantic Kernel to make it easier to get started. |

-| [Kernel Memory](https://github.com/microsoft/kernel-memory) | A scalable Memory service to store information and ask questions using the RAG pattern. |

-

## Join the community

We welcome your contributions and suggestions to SK community! One of the easiest

diff --git a/docs/decisions/0040-chat-prompt-xml-support.md b/docs/decisions/0040-chat-prompt-xml-support.md

index 42e77becc572..1a1bf19db7a2 100644

--- a/docs/decisions/0040-chat-prompt-xml-support.md

+++ b/docs/decisions/0040-chat-prompt-xml-support.md

@@ -109,13 +109,13 @@ Chosen option: "HTML encode all inserted content by default.", because it meets

This solution work as follows:

1. By default inserted content is treated as unsafe and will be encoded.

- 1. By default `HttpUtility.HtmlEncode` is used to encode all inserted content.

+ 1. By default `HttpUtility.HtmlEncode` in dotnet and `html.escape` in Python are used to encode all inserted content.

1. When the prompt is parsed into Chat History the text content will be automatically decoded.

- 1. By default `HttpUtility.HtmlDecode` is used to decode all Chat History content.

+ 1. By default `HttpUtility.HtmlDecode` in dotnet and `html.unescape` in Python are used to decode all Chat History content.

1. Developers can opt out as follows:

1. Set `AllowUnsafeContent = true` for the `PromptTemplateConfig` to allow function call return values to be trusted.

1. Set `AllowUnsafeContent = true` for the `InputVariable` to allow a specific input variable to be trusted.

- 1. Set `AllowUnsafeContent = true` for the `KernelPromptTemplateFactory` or `HandlebarsPromptTemplateFactory` to trust all inserted content i.e. revert to behavior before these changes were implemented.

+ 1. Set `AllowUnsafeContent = true` for the `KernelPromptTemplateFactory` or `HandlebarsPromptTemplateFactory` to trust all inserted content i.e. revert to behavior before these changes were implemented. In Python, this is done on each of the `PromptTemplate` classes, through the `PromptTemplateBase` class.

- Good, because values inserted into a prompt are not trusted by default.

- Bad, because there isn't a reliable way to decode message tags that were encoded.

diff --git a/docs/decisions/0044-OTel-semantic-convention.md b/docs/decisions/0044-OTel-semantic-convention.md

new file mode 100644

index 000000000000..b62b7c0afc24

--- /dev/null

+++ b/docs/decisions/0044-OTel-semantic-convention.md

@@ -0,0 +1,332 @@

+---

+# These are optional elements. Feel free to remove any of them.

+status: { accepted }

+contact: { Tao Chen }

+date: { 2024-05-02 }

+deciders: { Stephen Toub, Ben Thomas }

+consulted: { Stephen Toub, Liudmila Molkova, Ben Thomas }

+informed: { Dmytro Struk, Mark Wallace }

+---

+

+# Use standardized vocabulary and specification for observability in Semantic Kernel

+

+## Context and Problem Statement

+

+Observing LLM applications has been a huge ask from customers and the community. This work aims to ensure that SK provides the best developer experience while complying with the industry standards for observability in generative-AI-based applications.

+

+For more information, please refer to this issue: https://github.com/open-telemetry/semantic-conventions/issues/327

+

+### Semantic conventions

+

+The semantic conventions for generative AI are currently in their nascent stage, and as a result, many of the requirements outlined here may undergo changes in the future. Consequently, several features derived from this Architectural Decision Record (ADR) may be considered experimental. It is essential to remain adaptable and responsive to evolving industry standards to ensure the continuous improvement of our system's performance and reliability.

+

+- [Semantic conventions for generative AI](https://github.com/open-telemetry/semantic-conventions/tree/main/docs/gen-ai)

+- [Generic LLM attributes](https://github.com/open-telemetry/semantic-conventions/blob/main/docs/attributes-registry/gen-ai.md)

+

+### Telemetry requirements (Experimental)

+

+Based on the [initial version](https://github.com/open-telemetry/semantic-conventions/blob/651d779183ecc7c2f8cfa90bf94e105f7b9d3f5a/docs/attributes-registry/gen-ai.md), Semantic Kernel should provide the following attributes in activities that represent individual LLM requests:

+

+> `Activity` is a .Net concept and existed before OpenTelemetry. A `span` is an OpenTelemetry concept that is equivalent to an `Activity`.

+

+- (Required)`gen_ai.system`

+- (Required)`gen_ai.request.model`

+- (Recommended)`gen_ai.request.max_token`

+- (Recommended)`gen_ai.request.temperature`

+- (Recommended)`gen_ai.request.top_p`

+- (Recommended)`gen_ai.response.id`

+- (Recommended)`gen_ai.response.model`

+- (Recommended)`gen_ai.response.finish_reasons`

+- (Recommended)`gen_ai.response.prompt_tokens`

+- (Recommended)`gen_ai.response.completion_tokens`

+

+The following events will be optionally attached to an activity:

+| Event name| Attribute(s)|

+|---|---|

+|`gen_ai.content.prompt`|`gen_ai.prompt`|

+|`gen_ai.content.completion`|`gen_ai.completion`|

+

+> The kernel must provide configuration options to disable these events because they may contain PII.

+> See the [Semantic conventions for generative AI](https://github.com/open-telemetry/semantic-conventions/tree/main/docs/gen-ai) for requirement level for these attributes.

+

+## Where do we create the activities

+

+It is crucial to establish a clear line of responsibilities, particularly since certain service providers, such as the Azure OpenAI SDK, have pre-existing instrumentation. Our objective is to position our activities as close to the model level as possible to promote a more cohesive and consistent developer experience.

+

+```mermaid

+block-beta

+columns 1

+ Models

+ blockArrowId1<[" "]>(y)

+ block:Clients

+ columns 3

+ ConnectorTypeClientA["Instrumented client SDK (i.e. Azure OpenAI client)"]

+ ConnectorTypeClientB["Un-instrumented Client SDK"]

+ ConnectorTypeClientC["Custom client on REST API (i.e. HuggingFaceClient)"]

+ end

+ Connectors["AI Connectors"]

+ blockArrowId2<[" "]>(y)

+ SemanticKernel["Semantic Kernel"]

+ block:Kernel

+ Function

+ Planner

+ Agent

+ end

+```

+

+> Semantic Kernel also supports other types of connectors for memories/vector databases. We will discuss instrumentations for those connectors in a separate ADR.

+

+> Note that this will not change our approaches to [instrumentation for planners and kernel functions](./0025-planner-telemetry-enhancement.md). We may modify or remove some of the meters we created previously, which will introduce breaking changes.

+

+In order to keep the activities as close to the model level as possible, we should keep them at the connector level.

+

+### Out of scope

+

+These services will be discuss in the future:

+

+- Memory/vector database services

+- Audio to text services (`IAudioToTextService`)

+- Embedding services (`IEmbeddingGenerationService`)

+- Image to text services (`IImageToTextService`)

+- Text to audio services (`ITextToAudioService`)

+- Text to image services (`ITextToImageService`)

+

+## Considered Options

+

+- Scope of Activities

+ - All connectors, irrespective of the client SDKs used.

+ - Connectors that either lack instrumentation in their client SDKs or use custom clients.

+ - All connectors, noting that the attributes of activities derived from connectors and those from instrumented client SDKs do not overlap.

+- Implementations of Instrumentation

+ - Static class

+- Switches for experimental features and the collection of sensitive data

+ - App context switch

+

+### Scope of Activities

+

+#### All connectors, irrespective of the client SDKs utilized

+

+All AI connectors will generate activities for the purpose of tracing individual requests to models. Each activity will maintain a **consistent set of attributes**. This uniformity guarantees that users can monitor their LLM requests consistently, irrespective of the connectors used within their applications. However, it introduces the potential drawback of data duplication which **leads to greater costs**, as the attributes contained within these activities will encompass a broader set (i.e. additional SK-specific attributes) than those generated by the client SDKs, assuming that the client SDKs are likewise instrumented in alignment with the semantic conventions.

+

+> In an ideal world, it is anticipated that all client SDKs will eventually align with the semantic conventions.

+

+#### Connectors that either lack instrumentation in their client SDKs or utilize custom clients

+

+AI connectors paired with client SDKs that lack the capability to generate activities for LLM requests will take on the responsibility of creating such activities. In contrast, connectors associated with client SDKs that do already generate request activities will not be subject to further instrumentation. It is required that users subscribe to the activity sources offered by the client SDKs to ensure consistent tracking of LLM requests. This approach helps in **mitigating the costs** associated with unnecessary data duplication. However, it may introduce **inconsistencies in tracing**, as not all LLM requests will be accompanied by connector-generated activities.

+

+#### All connectors, noting that the attributes of activities derived from connectors and those from instrumented client SDKs do not overlap

+

+All connectors will generate activities for the purpose of tracing individual requests to models. The composition of these connector activities, specifically the attributes included, will be determined based on the instrumentation status of the associated client SDK. The aim is to include only the necessary attributes to prevent data duplication. Initially, a connector linked to a client SDK that lacks instrumentation will generate activities encompassing all potential attributes as outlined by the LLM semantic conventions, alongside some SK-specific attributes. However, once the client SDK becomes instrumented in alignment with these conventions, the connector will cease to include those previously added attributes in its activities, avoiding redundancy. This approach facilitates a **relatively consistent** development experience for user building with SK while **optimizing costs** associated with observability.

+

+### Instrumentation implementations

+

+#### Static class `ModelDiagnostics`

+

+This class will live under `dotnet\src\InternalUtilities\src\Diagnostics`.

+

+```C#

+// Example

+namespace Microsoft.SemanticKernel;

+

+internal static class ModelDiagnostics

+{

+ public static Activity? StartCompletionActivity(

+ string name,

+ string modelName,

+ string modelProvider,

+ string prompt,

+ PromptExecutionSettings? executionSettings)

+ {

+ ...

+ }

+

+ // Can be used for both non-streaming endpoints and streaming endpoints.

+ // For streaming, collect a list of `StreamingTextContent` and concatenate them into a single `TextContent` at the end of the streaming.

+ public static void SetCompletionResponses(

+ Activity? activity,

+ IEnumerable completions,

+ int promptTokens,

+ int completionTokens,

+ IEnumerable? finishReasons)

+ {

+ ...

+ }

+

+ // Contains more methods for chat completion and other services

+ ...

+}

+```

+

+Example usage

+

+```C#

+public async Task> GenerateTextAsync(

+ string prompt,

+ PromptExecutionSettings? executionSettings,

+ CancellationToken cancellationToken)

+{

+ using var activity = ModelDiagnostics.StartCompletionActivity(

+ $"text.generation {this._modelId}",

+ this._modelId,

+ "HuggingFace",

+ prompt,

+ executionSettings);

+

+ var completions = ...;

+ var finishReasons = ...;

+ // Usage can be estimated.

+ var promptTokens = ...;

+ var completionTokens = ...;

+

+ ModelDiagnostics.SetCompletionResponses(

+ activity,

+ completions,

+ promptTokens,

+ completionTokens,

+ finishReasons);

+

+ return completions;

+}

+```

+

+### Switches for experimental features and the collection of sensitive data

+

+#### App context switch

+

+We will introduce two flags to facilitate the explicit activation of tracing LLMs requests:

+

+1. `Microsoft.SemanticKernel.Experimental.EnableModelDiagnostics`

+ - Activating will enable the creation of activities that represent individual LLM requests.

+2. `Microsoft.SemanticKernel.Experimental.EnableModelDiagnosticsWithSensitiveData`

+ - Activating will enable the creation of activities that represent individual LLM requests, with events that may contain PII information.

+

+```C#

+// In application code

+if (builder.Environment.IsProduction())

+{

+ AppContext.SetSwitch("Microsoft.SemanticKernel.Experimental.EnableModelDiagnostics", true);

+}

+else

+{

+ AppContext.SetSwitch("Microsoft.SemanticKernel.Experimental.EnableModelDiagnosticsWithSensitiveData", true);

+}

+

+// Or in the project file

+

+

+

+

+

+

+

+```

+

+## Decision Outcome

+

+Chosen options:

+

+[x] Scope of Activities: **Option 3** - All connectors, noting that the attributes of activities derived from connectors and those from instrumented client SDKs do not overlap.

+

+[x] Instrumentation Implementation: **Option 1** - Static class

+

+[x] Experimental switch: **Option 1** - App context switch

+

+## Appendix

+

+### `AppContextSwitchHelper.cs`

+

+```C#

+internal static class AppContextSwitchHelper

+{

+ public static bool GetConfigValue(string appContextSwitchName)

+ {

+ if (AppContext.TryGetSwitch(appContextSwitchName, out bool value))

+ {

+ return value;

+ }

+

+ return false;

+ }

+}

+```

+

+### `ModelDiagnostics`

+

+```C#

+internal static class ModelDiagnostics

+{

+ // Consistent namespace for all connectors

+ private static readonly string s_namespace = typeof(ModelDiagnostics).Namespace;

+ private static readonly ActivitySource s_activitySource = new(s_namespace);

+

+ private const string EnableModelDiagnosticsSettingName = "Microsoft.SemanticKernel.Experimental.GenAI.EnableOTelDiagnostics";

+ private const string EnableSensitiveEventsSettingName = "Microsoft.SemanticKernel.Experimental.GenAI.EnableOTelDiagnosticsSensitive";

+

+ private static readonly bool s_enableSensitiveEvents = AppContextSwitchHelper.GetConfigValue(EnableSensitiveEventsSettingName);

+ private static readonly bool s_enableModelDiagnostics = AppContextSwitchHelper.GetConfigValue(EnableModelDiagnosticsSettingName) || s_enableSensitiveEvents;

+

+ public static Activity? StartCompletionActivity(string name, string modelName, string modelProvider, string prompt, PromptExecutionSettings? executionSettings)

+ {

+ if (!s_enableModelDiagnostics)

+ {

+ return null;

+ }

+

+ var activity = s_activitySource.StartActivityWithTags(

+ name,

+ new() {

+ new("gen_ai.request.model", modelName),

+ new("gen_ai.system", modelProvider),

+ ...

+ });

+

+ // Chat history is optional as it may contain sensitive data.

+ if (s_enableSensitiveEvents)

+ {

+ activity?.AttachSensitiveDataAsEvent("gen_ai.content.prompt", new() { new("gen_ai.prompt", prompt) });

+ }

+

+ return activity;

+ }

+ ...

+}

+```

+

+### Extensions

+

+```C#

+internal static class ActivityExtensions

+{

+ public static Activity? StartActivityWithTags(this ActivitySource source, string name, List> tags)

+ {

+ return source.StartActivity(

+ name,

+ ActivityKind.Internal,

+ Activity.Current?.Context ?? new ActivityContext(),

+ tags);

+ }

+

+ public static Activity EnrichAfterResponse(this Activity activity, List> tags)

+ {

+ tags.ForEach(tag =>

+ {

+ if (tag.Value is not null)

+ {

+ activity.SetTag(tag.Key, tag.Value);

+ }

+ });

+ }

+

+ public static Activity AttachSensitiveDataAsEvent(this Activity activity, string name, List> tags)

+ {

+ activity.AddEvent(new ActivityEvent(

+ name,

+ tags: new ActivityTagsCollection(tags)

+ ));

+

+ return activity;

+ }

+}

+```

+

+> Please be aware that the implementations provided above serve as illustrative examples, and the actual implementations within the codebase may undergo modifications.

diff --git a/dotnet/Directory.Packages.props b/dotnet/Directory.Packages.props

index 21b4b5bf5bd5..86beaba2698d 100644

--- a/dotnet/Directory.Packages.props

+++ b/dotnet/Directory.Packages.props

@@ -8,12 +8,13 @@

-

+

-

-

+

+

+

@@ -26,7 +27,7 @@

-

+

@@ -37,9 +38,9 @@

-

+

-

+

@@ -52,10 +53,10 @@

-

+

-

+

@@ -71,10 +72,9 @@

-

-

+

+

-

@@ -83,12 +83,15 @@

-

+

+

+

+

@@ -97,12 +100,12 @@

allruntime; build; native; contentfiles; analyzers; buildtransitive

-

+ allruntime; build; native; contentfiles; analyzers; buildtransitive

-

+ allruntime; build; native; contentfiles; analyzers; buildtransitive

diff --git a/dotnet/SK-dotnet.sln b/dotnet/SK-dotnet.sln

index d6eabd49cc4b..6320eeb19832 100644

--- a/dotnet/SK-dotnet.sln

+++ b/dotnet/SK-dotnet.sln

@@ -230,6 +230,9 @@ Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "Connectors.AzureAISearch.Un

EndProject

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "Connectors.HuggingFace.UnitTests", "src\Connectors\Connectors.HuggingFace.UnitTests\Connectors.HuggingFace.UnitTests.csproj", "{1F96837A-61EC-4C8F-904A-07BEBD05FDEE}"

EndProject

+Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "Connectors.MistralAI", "src\Connectors\Connectors.MistralAI\Connectors.MistralAI.csproj", "{14461919-E88D-49A9-BE8C-DF704CB79122}"

+EndProject

+Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "Connectors.MistralAI.UnitTests", "src\Connectors\Connectors.MistralAI.UnitTests\Connectors.MistralAI.UnitTests.csproj", "{47DB70C3-A659-49EE-BD0F-BF5F0E0ECE05}"

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "Connectors.Google", "src\Connectors\Connectors.Google\Connectors.Google.csproj", "{6578D31B-2CF3-4FF4-A845-7A0412FEB42E}"

EndProject

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "Connectors.Google.UnitTests", "src\Connectors\Connectors.Google.UnitTests\Connectors.Google.UnitTests.csproj", "{648CF4FE-4AFC-4EB0-87DB-9C2FE935CA24}"

@@ -252,6 +255,9 @@ EndProject

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "Agents.OpenAI", "src\Agents\OpenAI\Agents.OpenAI.csproj", "{644A2F10-324D-429E-A1A3-887EAE64207F}"

EndProject

Project("{2150E333-8FDC-42A3-9474-1A3956D46DE8}") = "Demos", "Demos", "{5D4C0700-BBB5-418F-A7B2-F392B9A18263}"

+ ProjectSection(SolutionItems) = preProject

+ samples\Demos\README.md = samples\Demos\README.md

+ EndProjectSection

EndProject

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "LearnResources", "samples\LearnResources\LearnResources.csproj", "{B04C26BC-A933-4A53-BE17-7875EB12E012}"

EndProject

@@ -283,10 +289,29 @@ Project("{2150E333-8FDC-42A3-9474-1A3956D46DE8}") = "samples", "samples", "{77E1

src\InternalUtilities\samples\YourAppException.cs = src\InternalUtilities\samples\YourAppException.cs

EndProjectSection

EndProject

-Project("{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}") = "ContentSafety", "samples\Demos\ContentSafety\ContentSafety.csproj", "{6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2}"

+Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "Functions.Prompty", "src\Functions\Functions.Prompty\Functions.Prompty.csproj", "{12B06019-740B-466D-A9E0-F05BC123A47D}"

+EndProject

+Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "PromptTemplates.Liquid", "src\Extensions\PromptTemplates.Liquid\PromptTemplates.Liquid.csproj", "{66D94E25-9B63-4C29-B7A1-3DFA17A90745}"

+EndProject

+Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "PromptTemplates.Liquid.UnitTests", "src\Extensions\PromptTemplates.Liquid.UnitTests\PromptTemplates.Liquid.UnitTests.csproj", "{CC6DEE89-57AA-494D-B40D-B09E1CCC6FAD}"

+EndProject

+Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "Functions.Prompty.UnitTests", "src\Functions\Functions.Prompty.UnitTests\Functions.Prompty.UnitTests.csproj", "{AD787471-5E43-44DF-BF3E-5CD26C765B4E}"

+EndProject

+Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "ContentSafety", "samples\Demos\ContentSafety\ContentSafety.csproj", "{6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2}"

EndProject

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "Concepts", "samples\Concepts\Concepts.csproj", "{925B1185-8B58-4E2D-95C9-4CA0BA9364E5}"

EndProject

+Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "FunctionInvocationApproval", "samples\Demos\FunctionInvocationApproval\FunctionInvocationApproval.csproj", "{6B56D8EE-9991-43E3-90B2-B8F5C5CE77C2}"

+EndProject

+Project("{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}") = "Connectors.Memory.SqlServer", "src\Connectors\Connectors.Memory.SqlServer\Connectors.Memory.SqlServer.csproj", "{24B8041B-92C6-4BB3-A699-C593AF5A870F}"

+EndProject

+Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "CodeInterpreterPlugin", "samples\Demos\CodeInterpreterPlugin\CodeInterpreterPlugin.csproj", "{3ED53702-0E53-473A-A0F4-645DB33541C2}"

+EndProject

+Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "QualityCheckWithFilters", "samples\Demos\QualityCheck\QualityCheckWithFilters\QualityCheckWithFilters.csproj", "{1D3EEB5B-0E06-4700-80D5-164956E43D0A}"

+EndProject

+Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "TimePlugin", "samples\Demos\TimePlugin\TimePlugin.csproj", "{F312FCE1-12D7-4DEF-BC29-2FF6618509F3}"

+Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "Connectors.Memory.AzureCosmosDBNoSQL", "src\Connectors\Connectors.Memory.AzureCosmosDBNoSQL\Connectors.Memory.AzureCosmosDBNoSQL.csproj", "{B0B3901E-AF56-432B-8FAA-858468E5D0DF}"

+EndProject

Global

GlobalSection(SolutionConfigurationPlatforms) = preSolution

Debug|Any CPU = Debug|Any CPU

@@ -570,6 +595,18 @@ Global

{1F96837A-61EC-4C8F-904A-07BEBD05FDEE}.Publish|Any CPU.Build.0 = Debug|Any CPU

{1F96837A-61EC-4C8F-904A-07BEBD05FDEE}.Release|Any CPU.ActiveCfg = Release|Any CPU

{1F96837A-61EC-4C8F-904A-07BEBD05FDEE}.Release|Any CPU.Build.0 = Release|Any CPU

+ {14461919-E88D-49A9-BE8C-DF704CB79122}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {14461919-E88D-49A9-BE8C-DF704CB79122}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {14461919-E88D-49A9-BE8C-DF704CB79122}.Publish|Any CPU.ActiveCfg = Publish|Any CPU

+ {14461919-E88D-49A9-BE8C-DF704CB79122}.Publish|Any CPU.Build.0 = Publish|Any CPU

+ {14461919-E88D-49A9-BE8C-DF704CB79122}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {14461919-E88D-49A9-BE8C-DF704CB79122}.Release|Any CPU.Build.0 = Release|Any CPU

+ {47DB70C3-A659-49EE-BD0F-BF5F0E0ECE05}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {47DB70C3-A659-49EE-BD0F-BF5F0E0ECE05}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {47DB70C3-A659-49EE-BD0F-BF5F0E0ECE05}.Publish|Any CPU.ActiveCfg = Debug|Any CPU

+ {47DB70C3-A659-49EE-BD0F-BF5F0E0ECE05}.Publish|Any CPU.Build.0 = Debug|Any CPU

+ {47DB70C3-A659-49EE-BD0F-BF5F0E0ECE05}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {47DB70C3-A659-49EE-BD0F-BF5F0E0ECE05}.Release|Any CPU.Build.0 = Release|Any CPU

{6578D31B-2CF3-4FF4-A845-7A0412FEB42E}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

{6578D31B-2CF3-4FF4-A845-7A0412FEB42E}.Debug|Any CPU.Build.0 = Debug|Any CPU

{6578D31B-2CF3-4FF4-A845-7A0412FEB42E}.Publish|Any CPU.ActiveCfg = Publish|Any CPU

@@ -654,24 +691,84 @@ Global

{1D98CF16-5156-40F0-91F0-76294B153DB3}.Publish|Any CPU.Build.0 = Debug|Any CPU

{1D98CF16-5156-40F0-91F0-76294B153DB3}.Release|Any CPU.ActiveCfg = Release|Any CPU

{1D98CF16-5156-40F0-91F0-76294B153DB3}.Release|Any CPU.Build.0 = Release|Any CPU

- {6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

- {6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2}.Debug|Any CPU.Build.0 = Debug|Any CPU

- {6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2}.Publish|Any CPU.ActiveCfg = Debug|Any CPU

- {6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2}.Publish|Any CPU.Build.0 = Debug|Any CPU

- {6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2}.Release|Any CPU.ActiveCfg = Release|Any CPU

- {6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2}.Release|Any CPU.Build.0 = Release|Any CPU

{87DA81FE-112E-4AF5-BEFB-0B91B993F749}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

{87DA81FE-112E-4AF5-BEFB-0B91B993F749}.Debug|Any CPU.Build.0 = Debug|Any CPU

{87DA81FE-112E-4AF5-BEFB-0B91B993F749}.Publish|Any CPU.ActiveCfg = Debug|Any CPU

{87DA81FE-112E-4AF5-BEFB-0B91B993F749}.Publish|Any CPU.Build.0 = Debug|Any CPU

{87DA81FE-112E-4AF5-BEFB-0B91B993F749}.Release|Any CPU.ActiveCfg = Release|Any CPU

{87DA81FE-112E-4AF5-BEFB-0B91B993F749}.Release|Any CPU.Build.0 = Release|Any CPU

+ {12B06019-740B-466D-A9E0-F05BC123A47D}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {12B06019-740B-466D-A9E0-F05BC123A47D}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {12B06019-740B-466D-A9E0-F05BC123A47D}.Publish|Any CPU.ActiveCfg = Publish|Any CPU

+ {12B06019-740B-466D-A9E0-F05BC123A47D}.Publish|Any CPU.Build.0 = Publish|Any CPU

+ {12B06019-740B-466D-A9E0-F05BC123A47D}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {12B06019-740B-466D-A9E0-F05BC123A47D}.Release|Any CPU.Build.0 = Release|Any CPU

+ {66D94E25-9B63-4C29-B7A1-3DFA17A90745}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {66D94E25-9B63-4C29-B7A1-3DFA17A90745}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {66D94E25-9B63-4C29-B7A1-3DFA17A90745}.Publish|Any CPU.ActiveCfg = Publish|Any CPU

+ {66D94E25-9B63-4C29-B7A1-3DFA17A90745}.Publish|Any CPU.Build.0 = Publish|Any CPU

+ {66D94E25-9B63-4C29-B7A1-3DFA17A90745}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {66D94E25-9B63-4C29-B7A1-3DFA17A90745}.Release|Any CPU.Build.0 = Release|Any CPU

+ {CC6DEE89-57AA-494D-B40D-B09E1CCC6FAD}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {CC6DEE89-57AA-494D-B40D-B09E1CCC6FAD}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {CC6DEE89-57AA-494D-B40D-B09E1CCC6FAD}.Publish|Any CPU.ActiveCfg = Debug|Any CPU

+ {CC6DEE89-57AA-494D-B40D-B09E1CCC6FAD}.Publish|Any CPU.Build.0 = Debug|Any CPU

+ {CC6DEE89-57AA-494D-B40D-B09E1CCC6FAD}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {CC6DEE89-57AA-494D-B40D-B09E1CCC6FAD}.Release|Any CPU.Build.0 = Release|Any CPU

+ {AD787471-5E43-44DF-BF3E-5CD26C765B4E}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {AD787471-5E43-44DF-BF3E-5CD26C765B4E}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {AD787471-5E43-44DF-BF3E-5CD26C765B4E}.Publish|Any CPU.ActiveCfg = Debug|Any CPU

+ {AD787471-5E43-44DF-BF3E-5CD26C765B4E}.Publish|Any CPU.Build.0 = Debug|Any CPU

+ {AD787471-5E43-44DF-BF3E-5CD26C765B4E}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {AD787471-5E43-44DF-BF3E-5CD26C765B4E}.Release|Any CPU.Build.0 = Release|Any CPU

+ {6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2}.Publish|Any CPU.ActiveCfg = Debug|Any CPU

+ {6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2}.Publish|Any CPU.Build.0 = Debug|Any CPU

+ {6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2}.Release|Any CPU.Build.0 = Release|Any CPU

{925B1185-8B58-4E2D-95C9-4CA0BA9364E5}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

{925B1185-8B58-4E2D-95C9-4CA0BA9364E5}.Debug|Any CPU.Build.0 = Debug|Any CPU

{925B1185-8B58-4E2D-95C9-4CA0BA9364E5}.Publish|Any CPU.ActiveCfg = Debug|Any CPU

{925B1185-8B58-4E2D-95C9-4CA0BA9364E5}.Publish|Any CPU.Build.0 = Debug|Any CPU

{925B1185-8B58-4E2D-95C9-4CA0BA9364E5}.Release|Any CPU.ActiveCfg = Release|Any CPU

{925B1185-8B58-4E2D-95C9-4CA0BA9364E5}.Release|Any CPU.Build.0 = Release|Any CPU

+ {6B56D8EE-9991-43E3-90B2-B8F5C5CE77C2}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {6B56D8EE-9991-43E3-90B2-B8F5C5CE77C2}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {6B56D8EE-9991-43E3-90B2-B8F5C5CE77C2}.Publish|Any CPU.ActiveCfg = Debug|Any CPU

+ {6B56D8EE-9991-43E3-90B2-B8F5C5CE77C2}.Publish|Any CPU.Build.0 = Debug|Any CPU

+ {6B56D8EE-9991-43E3-90B2-B8F5C5CE77C2}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {6B56D8EE-9991-43E3-90B2-B8F5C5CE77C2}.Release|Any CPU.Build.0 = Release|Any CPU

+ {24B8041B-92C6-4BB3-A699-C593AF5A870F}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {24B8041B-92C6-4BB3-A699-C593AF5A870F}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {24B8041B-92C6-4BB3-A699-C593AF5A870F}.Publish|Any CPU.ActiveCfg = Debug|Any CPU

+ {24B8041B-92C6-4BB3-A699-C593AF5A870F}.Publish|Any CPU.Build.0 = Debug|Any CPU

+ {24B8041B-92C6-4BB3-A699-C593AF5A870F}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {24B8041B-92C6-4BB3-A699-C593AF5A870F}.Release|Any CPU.Build.0 = Release|Any CPU

+ {3ED53702-0E53-473A-A0F4-645DB33541C2}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {3ED53702-0E53-473A-A0F4-645DB33541C2}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {3ED53702-0E53-473A-A0F4-645DB33541C2}.Publish|Any CPU.ActiveCfg = Debug|Any CPU

+ {3ED53702-0E53-473A-A0F4-645DB33541C2}.Publish|Any CPU.Build.0 = Debug|Any CPU

+ {3ED53702-0E53-473A-A0F4-645DB33541C2}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {3ED53702-0E53-473A-A0F4-645DB33541C2}.Release|Any CPU.Build.0 = Release|Any CPU

+ {1D3EEB5B-0E06-4700-80D5-164956E43D0A}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {1D3EEB5B-0E06-4700-80D5-164956E43D0A}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {1D3EEB5B-0E06-4700-80D5-164956E43D0A}.Publish|Any CPU.ActiveCfg = Debug|Any CPU

+ {1D3EEB5B-0E06-4700-80D5-164956E43D0A}.Publish|Any CPU.Build.0 = Debug|Any CPU

+ {1D3EEB5B-0E06-4700-80D5-164956E43D0A}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {1D3EEB5B-0E06-4700-80D5-164956E43D0A}.Release|Any CPU.Build.0 = Release|Any CPU

+ {F312FCE1-12D7-4DEF-BC29-2FF6618509F3}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {F312FCE1-12D7-4DEF-BC29-2FF6618509F3}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {F312FCE1-12D7-4DEF-BC29-2FF6618509F3}.Publish|Any CPU.ActiveCfg = Debug|Any CPU

+ {F312FCE1-12D7-4DEF-BC29-2FF6618509F3}.Publish|Any CPU.Build.0 = Debug|Any CPU

+ {F312FCE1-12D7-4DEF-BC29-2FF6618509F3}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {F312FCE1-12D7-4DEF-BC29-2FF6618509F3}.Release|Any CPU.Build.0 = Release|Any CPU

+ {B0B3901E-AF56-432B-8FAA-858468E5D0DF}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {B0B3901E-AF56-432B-8FAA-858468E5D0DF}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {B0B3901E-AF56-432B-8FAA-858468E5D0DF}.Publish|Any CPU.ActiveCfg = Publish|Any CPU

+ {B0B3901E-AF56-432B-8FAA-858468E5D0DF}.Publish|Any CPU.Build.0 = Publish|Any CPU

+ {B0B3901E-AF56-432B-8FAA-858468E5D0DF}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {B0B3901E-AF56-432B-8FAA-858468E5D0DF}.Release|Any CPU.Build.0 = Release|Any CPU

EndGlobalSection

GlobalSection(SolutionProperties) = preSolution

HideSolutionNode = FALSE

@@ -745,6 +842,8 @@ Global

{607DD6FA-FA0D-45E6-80BA-22A373609E89} = {5C246969-D794-4EC3-8E8F-F90D4D166420}

{BCDD5B96-CCC3-46B9-8217-89CD5885F6A2} = {0247C2C9-86C3-45BA-8873-28B0948EDC0C}

{1F96837A-61EC-4C8F-904A-07BEBD05FDEE} = {1B4CBDE0-10C2-4E7D-9CD0-FE7586C96ED1}

+ {14461919-E88D-49A9-BE8C-DF704CB79122} = {1B4CBDE0-10C2-4E7D-9CD0-FE7586C96ED1}

+ {47DB70C3-A659-49EE-BD0F-BF5F0E0ECE05} = {1B4CBDE0-10C2-4E7D-9CD0-FE7586C96ED1}

{6578D31B-2CF3-4FF4-A845-7A0412FEB42E} = {1B4CBDE0-10C2-4E7D-9CD0-FE7586C96ED1}

{648CF4FE-4AFC-4EB0-87DB-9C2FE935CA24} = {1B4CBDE0-10C2-4E7D-9CD0-FE7586C96ED1}

{D06465FA-0308-494C-920B-D502DA5690CB} = {1B4CBDE0-10C2-4E7D-9CD0-FE7586C96ED1}

@@ -762,10 +861,22 @@ Global

{5C813F83-9FD8-462A-9B38-865CA01C384C} = {5D4C0700-BBB5-418F-A7B2-F392B9A18263}

{D5E4C960-53B3-4C35-99C1-1BA97AECC489} = {5D4C0700-BBB5-418F-A7B2-F392B9A18263}

{1D98CF16-5156-40F0-91F0-76294B153DB3} = {FA3720F1-C99A-49B2-9577-A940257098BF}

- {6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2} = {5D4C0700-BBB5-418F-A7B2-F392B9A18263}

{87DA81FE-112E-4AF5-BEFB-0B91B993F749} = {FA3720F1-C99A-49B2-9577-A940257098BF}

{77E141BA-AF5E-4C01-A970-6C07AC3CD55A} = {4D3DAE63-41C6-4E1C-A35A-E77BDFC40675}

+ {12B06019-740B-466D-A9E0-F05BC123A47D} = {9ECD1AA0-75B3-4E25-B0B5-9F0945B64974}

+ {66D94E25-9B63-4C29-B7A1-3DFA17A90745} = {078F96B4-09E1-4E0E-B214-F71A4F4BF633}

+ {CC6DEE89-57AA-494D-B40D-B09E1CCC6FAD} = {078F96B4-09E1-4E0E-B214-F71A4F4BF633}

+ {AD787471-5E43-44DF-BF3E-5CD26C765B4E} = {9ECD1AA0-75B3-4E25-B0B5-9F0945B64974}

+ {6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2} = {5D4C0700-BBB5-418F-A7B2-F392B9A18263}

+ {925B1185-8B58-4E2D-95C9-4CA0BA9364E5} = {FA3720F1-C99A-49B2-9577-A940257098BF}

+ {6B56D8EE-9991-43E3-90B2-B8F5C5CE77C2} = {5D4C0700-BBB5-418F-A7B2-F392B9A18263}

+ {24B8041B-92C6-4BB3-A699-C593AF5A870F} = {24503383-A8C4-4255-9998-28D70FE8E99A}

+ {3ED53702-0E53-473A-A0F4-645DB33541C2} = {5D4C0700-BBB5-418F-A7B2-F392B9A18263}

+ {1D3EEB5B-0E06-4700-80D5-164956E43D0A} = {5D4C0700-BBB5-418F-A7B2-F392B9A18263}

+ {F312FCE1-12D7-4DEF-BC29-2FF6618509F3} = {5D4C0700-BBB5-418F-A7B2-F392B9A18263}

+ {6EF9663D-976C-4A27-B8D3-8B1E63BA3BF2} = {5D4C0700-BBB5-418F-A7B2-F392B9A18263}

{925B1185-8B58-4E2D-95C9-4CA0BA9364E5} = {FA3720F1-C99A-49B2-9577-A940257098BF}

+ {B0B3901E-AF56-432B-8FAA-858468E5D0DF} = {24503383-A8C4-4255-9998-28D70FE8E99A}

EndGlobalSection

GlobalSection(ExtensibilityGlobals) = postSolution

SolutionGuid = {FBDC56A3-86AD-4323-AA0F-201E59123B83}

diff --git a/dotnet/code-coverage.ps1 b/dotnet/code-coverage.ps1

index 108dbdffa776..f2c662d9212d 100644

--- a/dotnet/code-coverage.ps1

+++ b/dotnet/code-coverage.ps1

@@ -27,6 +27,7 @@ foreach ($project in $testProjects) {

dotnet test $testProjectPath `

--collect:"XPlat Code Coverage" `

--results-directory:$coverageOutputPath `

+ -- DataCollectionRunSettings.DataCollectors.DataCollector.Configuration.ExcludeByAttribute=ObsoleteAttribute,GeneratedCodeAttribute,CompilerGeneratedAttribute,ExcludeFromCodeCoverageAttribute `

}

diff --git a/dotnet/docs/EXPERIMENTS.md b/dotnet/docs/EXPERIMENTS.md

index 374991da97b0..2be4606e5596 100644

--- a/dotnet/docs/EXPERIMENTS.md

+++ b/dotnet/docs/EXPERIMENTS.md

@@ -6,7 +6,7 @@ You can use the following diagnostic IDs to ignore warnings or errors for a part

```xml

- SKEXP0001,SKEXP0010

+ $(NoWarn);SKEXP0001,SKEXP0010

```

@@ -58,6 +58,7 @@ You can use the following diagnostic IDs to ignore warnings or errors for a part

| SKEXP0040 | Markdown functions | | | | | |

| SKEXP0040 | OpenAPI functions | | | | | |

| SKEXP0040 | OpenAPI function extensions | | | | | |

+| SKEXP0040 | Prompty Format support | | | | | |

| | | | | | | |

| SKEXP0050 | Core plugins | | | | | |

| SKEXP0050 | Document plugins | | | | | |

@@ -78,4 +79,4 @@ You can use the following diagnostic IDs to ignore warnings or errors for a part

| SKEXP0101 | Experiment with Assistants | | | | | |

| SKEXP0101 | Experiment with Flow Orchestration | | | | | |

| | | | | | | |

-| SKEXP0110 | Agent Framework | | | | | |

+| SKEXP0110 | Agent Framework | | | | | |

\ No newline at end of file

diff --git a/dotnet/docs/TELEMETRY.md b/dotnet/docs/TELEMETRY.md

index 50eb520e484d..3bcef7e63fc1 100644

--- a/dotnet/docs/TELEMETRY.md

+++ b/dotnet/docs/TELEMETRY.md

@@ -1,9 +1,9 @@

# Telemetry

Telemetry in Semantic Kernel (SK) .NET implementation includes _logging_, _metering_ and _tracing_.

-The code is instrumented using native .NET instrumentation tools, which means that it's possible to use different monitoring platforms (e.g. Application Insights, Prometheus, Grafana etc.).

+The code is instrumented using native .NET instrumentation tools, which means that it's possible to use different monitoring platforms (e.g. Application Insights, Aspire dashboard, Prometheus, Grafana etc.).

-Code example using Application Insights can be found [here](https://github.com/microsoft/semantic-kernel/blob/main/dotnet/samples/TelemetryExample).

+Code example using Application Insights can be found [here](../samples/Demos/TelemetryWithAppInsights/).

## Logging

@@ -108,7 +108,7 @@ Tracing is implemented with `Activity` class from `System.Diagnostics` namespace

Available activity sources:

- _Microsoft.SemanticKernel.Planning_ - creates activities for all planners.

-- _Microsoft.SemanticKernel_ - creates activities for `KernelFunction`.

+- _Microsoft.SemanticKernel_ - creates activities for `KernelFunction` as well as requests to models.

### Examples

diff --git a/dotnet/notebooks/00-getting-started.ipynb b/dotnet/notebooks/00-getting-started.ipynb

index f850d4d20190..1977879b9b79 100644

--- a/dotnet/notebooks/00-getting-started.ipynb

+++ b/dotnet/notebooks/00-getting-started.ipynb

@@ -61,7 +61,7 @@

"outputs": [],

"source": [

"// Import Semantic Kernel\n",

- "#r \"nuget: Microsoft.SemanticKernel, 1.0.1\""

+ "#r \"nuget: Microsoft.SemanticKernel, 1.11.1\""

]

},

{

@@ -138,7 +138,7 @@

"outputs": [],

"source": [

"// FunPlugin directory path\n",

- "var funPluginDirectoryPath = Path.Combine(System.IO.Directory.GetCurrentDirectory(), \"..\", \"..\", \"samples\", \"plugins\", \"FunPlugin\");\n",

+ "var funPluginDirectoryPath = Path.Combine(System.IO.Directory.GetCurrentDirectory(), \"..\", \"..\", \"prompt_template_samples\", \"FunPlugin\");\n",

"\n",

"// Load the FunPlugin from the Plugins Directory\n",

"var funPluginFunctions = kernel.ImportPluginFromPromptDirectory(funPluginDirectoryPath);\n",

diff --git a/dotnet/notebooks/01-basic-loading-the-kernel.ipynb b/dotnet/notebooks/01-basic-loading-the-kernel.ipynb

index a5f6d01dc289..f9d7e5b8abe4 100644

--- a/dotnet/notebooks/01-basic-loading-the-kernel.ipynb

+++ b/dotnet/notebooks/01-basic-loading-the-kernel.ipynb

@@ -32,7 +32,7 @@

},

"outputs": [],

"source": [

- "#r \"nuget: Microsoft.SemanticKernel, 1.0.1\""

+ "#r \"nuget: Microsoft.SemanticKernel, 1.11.1\""

]

},

{

diff --git a/dotnet/notebooks/02-running-prompts-from-file.ipynb b/dotnet/notebooks/02-running-prompts-from-file.ipynb

index 0a23abb9e88a..2475712372c8 100644

--- a/dotnet/notebooks/02-running-prompts-from-file.ipynb

+++ b/dotnet/notebooks/02-running-prompts-from-file.ipynb

@@ -93,7 +93,7 @@

},

"outputs": [],

"source": [

- "#r \"nuget: Microsoft.SemanticKernel, 1.0.1\"\n",

+ "#r \"nuget: Microsoft.SemanticKernel, 1.11.1\"\n",

"\n",

"#!import config/Settings.cs\n",

"\n",

@@ -135,7 +135,7 @@

"outputs": [],

"source": [

"// FunPlugin directory path\n",

- "var funPluginDirectoryPath = Path.Combine(System.IO.Directory.GetCurrentDirectory(), \"..\", \"..\", \"samples\", \"plugins\", \"FunPlugin\");\n",

+ "var funPluginDirectoryPath = Path.Combine(System.IO.Directory.GetCurrentDirectory(), \"..\", \"..\", \"prompt_template_samples\", \"FunPlugin\");\n",

"\n",

"// Load the FunPlugin from the Plugins Directory\n",

"var funPluginFunctions = kernel.ImportPluginFromPromptDirectory(funPluginDirectoryPath);"

diff --git a/dotnet/notebooks/03-semantic-function-inline.ipynb b/dotnet/notebooks/03-semantic-function-inline.ipynb

index 133bcf8ee21c..3ea79d955c37 100644

--- a/dotnet/notebooks/03-semantic-function-inline.ipynb

+++ b/dotnet/notebooks/03-semantic-function-inline.ipynb

@@ -51,7 +51,7 @@

},

"outputs": [],

"source": [

- "#r \"nuget: Microsoft.SemanticKernel, 1.0.1\"\n",

+ "#r \"nuget: Microsoft.SemanticKernel, 1.11.1\"\n",

"\n",

"#!import config/Settings.cs\n",

"\n",

diff --git a/dotnet/notebooks/04-kernel-arguments-chat.ipynb b/dotnet/notebooks/04-kernel-arguments-chat.ipynb

index bcd9748763d7..9af04e818fae 100644

--- a/dotnet/notebooks/04-kernel-arguments-chat.ipynb

+++ b/dotnet/notebooks/04-kernel-arguments-chat.ipynb

@@ -30,7 +30,7 @@

},

"outputs": [],

"source": [

- "#r \"nuget: Microsoft.SemanticKernel, 1.0.1\"\n",

+ "#r \"nuget: Microsoft.SemanticKernel, 1.11.1\"\n",

"#!import config/Settings.cs\n",

"\n",

"using Microsoft.SemanticKernel;\n",

diff --git a/dotnet/notebooks/05-using-the-planner.ipynb b/dotnet/notebooks/05-using-the-planner.ipynb

index 51e3b057ae71..e58f351ae721 100644

--- a/dotnet/notebooks/05-using-the-planner.ipynb

+++ b/dotnet/notebooks/05-using-the-planner.ipynb

@@ -25,8 +25,8 @@

},

"outputs": [],

"source": [

- "#r \"nuget: Microsoft.SemanticKernel, 1.0.1\"\n",

- "#r \"nuget: Microsoft.SemanticKernel.Planners.Handlebars, 1.0.1-preview\"\n",

+ "#r \"nuget: Microsoft.SemanticKernel, 1.11.1\"\n",

+ "#r \"nuget: Microsoft.SemanticKernel.Planners.Handlebars, 1.11.1-preview\"\n",

"\n",

"#!import config/Settings.cs\n",

"#!import config/Utils.cs\n",

@@ -99,7 +99,7 @@

},

"outputs": [],

"source": [

- "var pluginsDirectory = Path.Combine(System.IO.Directory.GetCurrentDirectory(), \"..\", \"..\", \"samples\", \"plugins\");\n",

+ "var pluginsDirectory = Path.Combine(System.IO.Directory.GetCurrentDirectory(), \"..\", \"..\", \"prompt_template_samples\");\n",

"\n",

"kernel.ImportPluginFromPromptDirectory(Path.Combine(pluginsDirectory, \"SummarizePlugin\"));\n",

"kernel.ImportPluginFromPromptDirectory(Path.Combine(pluginsDirectory, \"WriterPlugin\"));"

diff --git a/dotnet/notebooks/06-memory-and-embeddings.ipynb b/dotnet/notebooks/06-memory-and-embeddings.ipynb

index 5b8e902cd179..a1656d450edc 100644

--- a/dotnet/notebooks/06-memory-and-embeddings.ipynb

+++ b/dotnet/notebooks/06-memory-and-embeddings.ipynb

@@ -33,8 +33,8 @@

},

"outputs": [],

"source": [

- "#r \"nuget: Microsoft.SemanticKernel, 1.0.1\"\n",

- "#r \"nuget: Microsoft.SemanticKernel.Plugins.Memory, 1.0.1-alpha\"\n",

+ "#r \"nuget: Microsoft.SemanticKernel, 1.11.1\"\n",

+ "#r \"nuget: Microsoft.SemanticKernel.Plugins.Memory, 1.11.1-alpha\"\n",

"#r \"nuget: System.Linq.Async, 6.0.1\"\n",

"\n",

"#!import config/Settings.cs\n",

@@ -234,7 +234,7 @@

"source": [

"using Microsoft.SemanticKernel.Plugins.Memory;\n",

"\n",

- "#pragma warning disable SKEXP0050\n",

+ "#pragma warning disable SKEXP0001, SKEXP0050\n",

"\n",

"// TextMemoryPlugin provides the \"recall\" function\n",

"kernel.ImportPluginFromObject(new TextMemoryPlugin(memory));"

@@ -293,7 +293,7 @@

},

"outputs": [],

"source": [

- "#pragma warning disable SKEXP0050\n",

+ "#pragma warning disable SKEXP0001, SKEXP0050\n",

"\n",

"var arguments = new KernelArguments();\n",

"\n",

diff --git a/dotnet/notebooks/07-DALL-E-3.ipynb b/dotnet/notebooks/07-DALL-E-3.ipynb

index 1db64c8f2fd8..4c0ef213e87b 100644

--- a/dotnet/notebooks/07-DALL-E-3.ipynb

+++ b/dotnet/notebooks/07-DALL-E-3.ipynb

@@ -33,7 +33,7 @@

"source": [

"// Usual setup: importing Semantic Kernel SDK and SkiaSharp, used to display images inline.\n",

"\n",

- "#r \"nuget: Microsoft.SemanticKernel, 1.0.1\"\n",

+ "#r \"nuget: Microsoft.SemanticKernel, 1.11.1\"\n",

"#r \"nuget: System.Numerics.Tensors, 8.0.0\"\n",

"#r \"nuget: SkiaSharp, 2.88.3\"\n",

"\n",

diff --git a/dotnet/notebooks/08-chatGPT-with-DALL-E-3.ipynb b/dotnet/notebooks/08-chatGPT-with-DALL-E-3.ipynb

index c8fbef36f087..c573f57cf2fc 100644

--- a/dotnet/notebooks/08-chatGPT-with-DALL-E-3.ipynb

+++ b/dotnet/notebooks/08-chatGPT-with-DALL-E-3.ipynb

@@ -56,7 +56,7 @@

"source": [

"// Usual setup: importing Semantic Kernel SDK and SkiaSharp, used to display images inline.\n",

"\n",

- "#r \"nuget: Microsoft.SemanticKernel, 1.0.1\"\n",

+ "#r \"nuget: Microsoft.SemanticKernel, 1.11.1\"\n",

"#r \"nuget: SkiaSharp, 2.88.3\"\n",

"\n",

"#!import config/Settings.cs\n",

diff --git a/dotnet/notebooks/09-memory-with-chroma.ipynb b/dotnet/notebooks/09-memory-with-chroma.ipynb

index 8cfd51637546..66a93ec523b6 100644

--- a/dotnet/notebooks/09-memory-with-chroma.ipynb

+++ b/dotnet/notebooks/09-memory-with-chroma.ipynb

@@ -38,9 +38,9 @@

},

"outputs": [],

"source": [

- "#r \"nuget: Microsoft.SemanticKernel, 1.0.1\"\n",

- "#r \"nuget: Microsoft.SemanticKernel.Connectors.Chroma, 1.0.1-alpha\"\n",

- "#r \"nuget: Microsoft.SemanticKernel.Plugins.Memory, 1.0.1-alpha\"\n",

+ "#r \"nuget: Microsoft.SemanticKernel, 1.11.1\"\n",

+ "#r \"nuget: Microsoft.SemanticKernel.Connectors.Chroma, 1.11.1-alpha\"\n",

+ "#r \"nuget: Microsoft.SemanticKernel.Plugins.Memory, 1.11.1-alpha\"\n",

"#r \"nuget: System.Linq.Async, 6.0.1\"\n",

"\n",

"#!import config/Settings.cs\n",

@@ -244,7 +244,7 @@

},

"outputs": [],

"source": [

- "#pragma warning disable SKEXP0050\n",

+ "#pragma warning disable SKEXP0001, SKEXP0050\n",

"\n",

"// TextMemoryPlugin provides the \"recall\" function\n",

"kernel.ImportPluginFromObject(new TextMemoryPlugin(memory));"

@@ -303,7 +303,7 @@

},

"outputs": [],

"source": [

- "#pragma warning disable SKEXP0050\n",

+ "#pragma warning disable SKEXP0001, SKEXP0050\n",

"\n",

"var arguments = new KernelArguments();\n",

"\n",

@@ -442,7 +442,7 @@

" = \"Jupyter notebook describing how to pass prompts from a file to a semantic plugin or function\",\n",

" [\"https://github.com/microsoft/semantic-kernel/blob/main/dotnet/notebooks/00-getting-started.ipynb\"]\n",

" = \"Jupyter notebook describing how to get started with the Semantic Kernel\",\n",

- " [\"https://github.com/microsoft/semantic-kernel/tree/main/samples/plugins/ChatPlugin/ChatGPT\"]\n",

+ " [\"https://github.com/microsoft/semantic-kernel/tree/main/prompt_template_samples/ChatPlugin/ChatGPT\"]\n",

" = \"Sample demonstrating how to create a chat plugin interfacing with ChatGPT\",\n",

" [\"https://github.com/microsoft/semantic-kernel/blob/main/dotnet/src/Plugins/Plugins.Memory/VolatileMemoryStore.cs\"]\n",

" = \"C# class that defines a volatile embedding store\",\n",

diff --git a/dotnet/notebooks/10-BingSearch-using-kernel.ipynb b/dotnet/notebooks/10-BingSearch-using-kernel.ipynb

index 47ba404b1b73..2f5534b79cbb 100644

--- a/dotnet/notebooks/10-BingSearch-using-kernel.ipynb

+++ b/dotnet/notebooks/10-BingSearch-using-kernel.ipynb

@@ -35,9 +35,9 @@

},

"outputs": [],

"source": [

- "#r \"nuget: Microsoft.SemanticKernel, 1.0.1\"\n",

- "#r \"nuget: Microsoft.SemanticKernel.Plugins.Web, 1.0.1-alpha\"\n",

- "#r \"nuget: Microsoft.SemanticKernel.Plugins.Core, 1.0.1-alpha\"\n",

+ "#r \"nuget: Microsoft.SemanticKernel, 1.11.1\"\n",

+ "#r \"nuget: Microsoft.SemanticKernel.Plugins.Web, 1.11.1-alpha\"\n",

+ "#r \"nuget: Microsoft.SemanticKernel.Plugins.Core, 1.11.1-alpha\"\n",

"\n",

"#!import config/Settings.cs\n",

"#!import config/Utils.cs\n",

diff --git a/dotnet/nuget/nuget-package.props b/dotnet/nuget/nuget-package.props

index 4ce4b56ec772..8473f163e15d 100644

--- a/dotnet/nuget/nuget-package.props

+++ b/dotnet/nuget/nuget-package.props

@@ -1,7 +1,7 @@

- 1.10.0

+ 1.13.0$(VersionPrefix)-$(VersionSuffix)$(VersionPrefix)

diff --git a/dotnet/samples/Concepts/Agents/Legacy_AgentCollaboration.cs b/dotnet/samples/Concepts/Agents/Legacy_AgentCollaboration.cs

index afe4e14bd4d5..53ae0c07662a 100644

--- a/dotnet/samples/Concepts/Agents/Legacy_AgentCollaboration.cs

+++ b/dotnet/samples/Concepts/Agents/Legacy_AgentCollaboration.cs

@@ -157,7 +157,7 @@ private void DisplayMessages(IEnumerable messages, IAgent? agent =

private void DisplayMessage(IChatMessage message, IAgent? agent = null)

{

Console.WriteLine($"[{message.Id}]");

- if (agent != null)

+ if (agent is not null)

{

Console.WriteLine($"# {message.Role}: ({agent.Name}) {message.Content}");

}

diff --git a/dotnet/samples/Concepts/Agents/Legacy_AgentDelegation.cs b/dotnet/samples/Concepts/Agents/Legacy_AgentDelegation.cs

index a8570cbe5189..86dacb9c256d 100644

--- a/dotnet/samples/Concepts/Agents/Legacy_AgentDelegation.cs

+++ b/dotnet/samples/Concepts/Agents/Legacy_AgentDelegation.cs

@@ -29,7 +29,7 @@ public async Task RunAsync()

{

Console.WriteLine("======== Example71_AgentDelegation ========");

- if (TestConfiguration.OpenAI.ApiKey == null)

+ if (TestConfiguration.OpenAI.ApiKey is null)

{

Console.WriteLine("OpenAI apiKey not found. Skipping example.");

return;

diff --git a/dotnet/samples/Concepts/Agents/Legacy_AgentTools.cs b/dotnet/samples/Concepts/Agents/Legacy_AgentTools.cs

index f2eff8977e66..acacc1ecc2fd 100644

--- a/dotnet/samples/Concepts/Agents/Legacy_AgentTools.cs

+++ b/dotnet/samples/Concepts/Agents/Legacy_AgentTools.cs

@@ -73,7 +73,7 @@ public async Task RunRetrievalToolAsync()

Console.WriteLine("======== Using Retrieval tool ========");

- if (TestConfiguration.OpenAI.ApiKey == null)

+ if (TestConfiguration.OpenAI.ApiKey is null)

{

Console.WriteLine("OpenAI apiKey not found. Skipping example.");

return;

@@ -125,7 +125,7 @@ private async Task ChatAsync(

params string[] questions)

{

string[]? fileIds = null;

- if (fileId != null)

+ if (fileId is not null)

{

fileIds = [fileId];

}

diff --git a/dotnet/samples/Concepts/Caching/SemanticCachingWithFilters.cs b/dotnet/samples/Concepts/Caching/SemanticCachingWithFilters.cs

new file mode 100644

index 000000000000..cd90de3964b4

--- /dev/null

+++ b/dotnet/samples/Concepts/Caching/SemanticCachingWithFilters.cs

@@ -0,0 +1,248 @@

+// Copyright (c) Microsoft. All rights reserved.

+

+using System.Diagnostics;

+using Microsoft.Extensions.DependencyInjection;

+using Microsoft.SemanticKernel;

+using Microsoft.SemanticKernel.Connectors.AzureCosmosDBMongoDB;

+using Microsoft.SemanticKernel.Connectors.Redis;

+using Microsoft.SemanticKernel.Memory;

+

+namespace Caching;

+

+///

+/// This example shows how to achieve Semantic Caching with Filters.

+/// is used to get rendered prompt and check in cache if similar prompt was already answered.

+/// If there is a record in cache, then previously cached answer will be returned to the user instead of making a call to LLM.

+/// If there is no record in cache, a call to LLM will be performed, and result will be cached together with rendered prompt.

+/// is used to update cache with rendered prompt and related LLM result.

+///

+public class SemanticCachingWithFilters(ITestOutputHelper output) : BaseTest(output)

+{

+ ///

+ /// Similarity/relevance score, from 0 to 1, where 1 means exact match.

+ /// It's possible to change this value during testing to see how caching logic will behave.

+ ///

+ private const double SimilarityScore = 0.9;

+

+ ///

+ /// Executing similar requests two times using in-memory caching store to compare execution time and results.

+ /// Second execution is faster, because the result is returned from cache.

+ ///

+ [Fact]

+ public async Task InMemoryCacheAsync()

+ {

+ var kernel = GetKernelWithCache(_ => new VolatileMemoryStore());

+

+ var result1 = await ExecuteAsync(kernel, "First run", "What's the tallest building in New York?");

+ var result2 = await ExecuteAsync(kernel, "Second run", "What is the highest building in New York City?");

+

+ Console.WriteLine($"Result 1: {result1}");

+ Console.WriteLine($"Result 2: {result2}");

+

+ /*

+ Output:

+ First run: What's the tallest building in New York?

+ Elapsed Time: 00:00:03.828

+ Second run: What is the highest building in New York City?

+ Elapsed Time: 00:00:00.541

+ Result 1: The tallest building in New York is One World Trade Center, also known as Freedom Tower.It stands at 1,776 feet(541.3 meters) tall, including its spire.

+ Result 2: The tallest building in New York is One World Trade Center, also known as Freedom Tower.It stands at 1,776 feet(541.3 meters) tall, including its spire.

+ */

+ }

+

+ ///

+ /// Executing similar requests two times using Redis caching store to compare execution time and results.

+ /// Second execution is faster, because the result is returned from cache.

+ /// How to run Redis on Docker locally: https://redis.io/docs/latest/operate/oss_and_stack/install/install-stack/docker/

+ ///

+ [Fact]

+ public async Task RedisCacheAsync()

+ {

+ var kernel = GetKernelWithCache(_ => new RedisMemoryStore("localhost:6379", vectorSize: 1536));

+

+ var result1 = await ExecuteAsync(kernel, "First run", "What's the tallest building in New York?");

+ var result2 = await ExecuteAsync(kernel, "Second run", "What is the highest building in New York City?");

+

+ Console.WriteLine($"Result 1: {result1}");

+ Console.WriteLine($"Result 2: {result2}");

+

+ /*

+ First run: What's the tallest building in New York?

+ Elapsed Time: 00:00:03.674

+ Second run: What is the highest building in New York City?

+ Elapsed Time: 00:00:00.292

+ Result 1: The tallest building in New York is One World Trade Center, also known as Freedom Tower. It stands at 1,776 feet (541 meters) tall, including its spire.

+ Result 2: The tallest building in New York is One World Trade Center, also known as Freedom Tower. It stands at 1,776 feet (541 meters) tall, including its spire.

+ */

+ }

+

+ ///