-

|

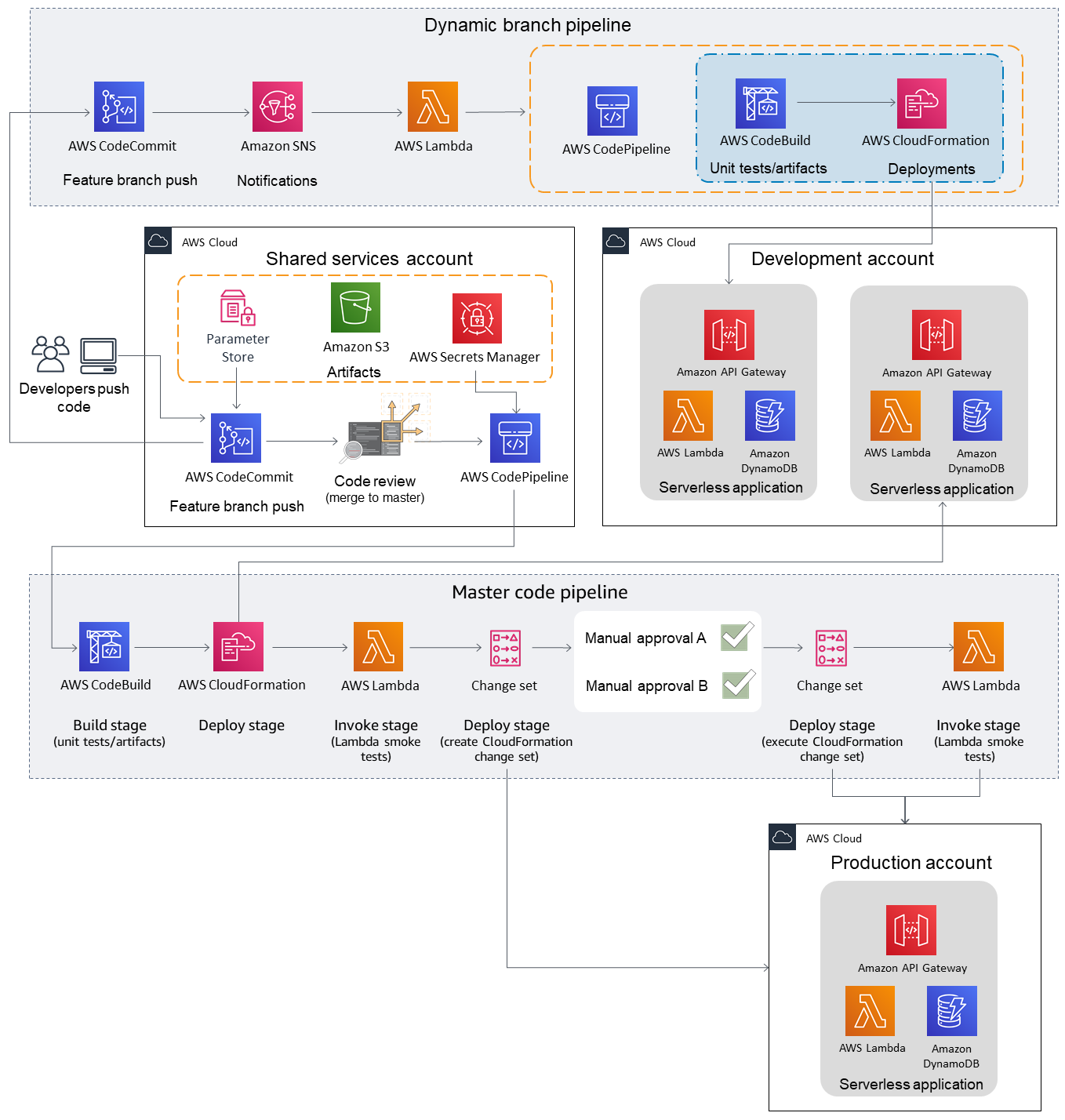

Hi everyone, we're trying to implement a gitflow like pipeline-based strategy in our company. This project listens for new commits in codecommit, and creates a new pipeline per branch, where each pipeline deploys a cloudformation stack to an account (master and feature branches are deployed in Development account, and when approved, the master branch is also pushed to Production account). This works for a simple stack like in the example, which only deploys an api gateway, lambda, and dynamodb trio. However, I'm having trouble understanding how to scale this approach to a reasonably large backend. We are dealing with 100+ lambdas, api gateway routes, 10+ databases, iot routes, etc. I can't imagine having to deploy all of these just to test, say, a new feature which only impacts a single api->lambda->ddb route. However, what if the feature I am testing is a somewhat central service, which many other services need to talk to? In this case, it seems that I need to redeploy almost the entire backend just to test this new feature, in which case the one-feature-per-branch doesn't seem to make a whole lot of sense financially speaking (codebuild costs tend to add up quite fast). So what is the most scalable environment strategy?

|

Beta Was this translation helpful? Give feedback.

Replies: 1 comment

-

|

Here's my take on this. Do with it what you will 🙂.

Hope this helps! Thanks, |

Beta Was this translation helpful? Give feedback.

Here's my take on this. Do with it what you will 🙂.